Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

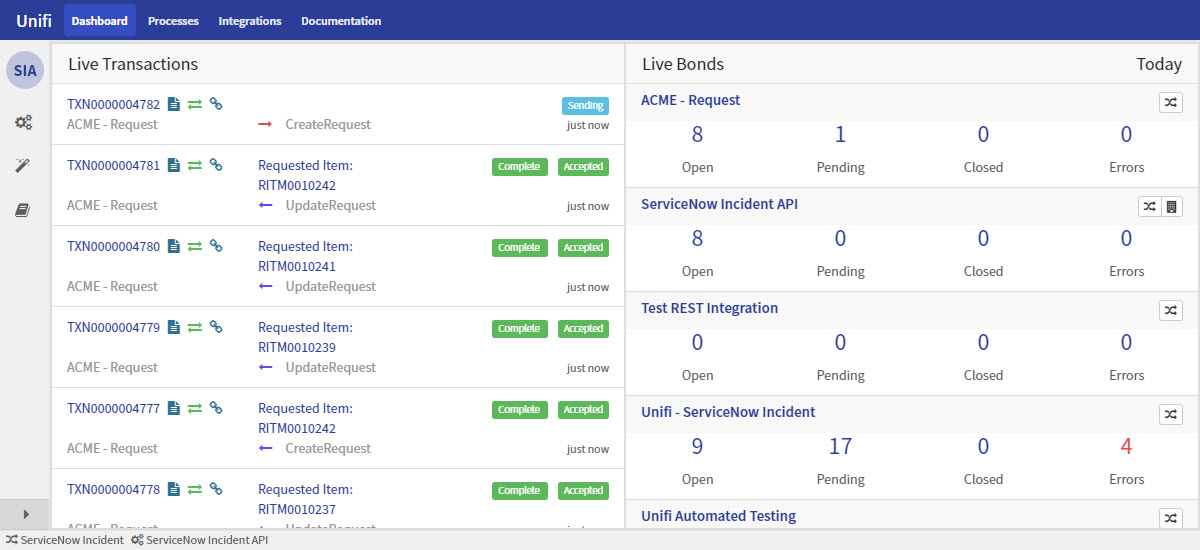

Unifi is an integration platform built specifically to support complex ticket exchange integrations to and from your ServiceNow instance. From no-code to pro-code, Unifi gives you the flexibility, insight, and control to create exceptional integration experiences for you, your partners and your customers.

Unifi is a ServiceNow application that is delivered and managed through the . You can find out more information and request a demo through our .

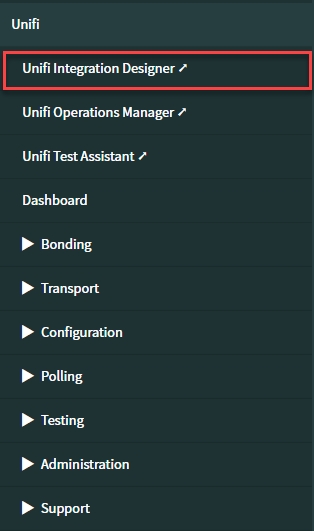

Unifi Integration Designer is the primary and recommended interface used for creating and managing integrations in Unifi.

Designer can be accessed by clicking Integration Designer ➚ from the navigator menu.

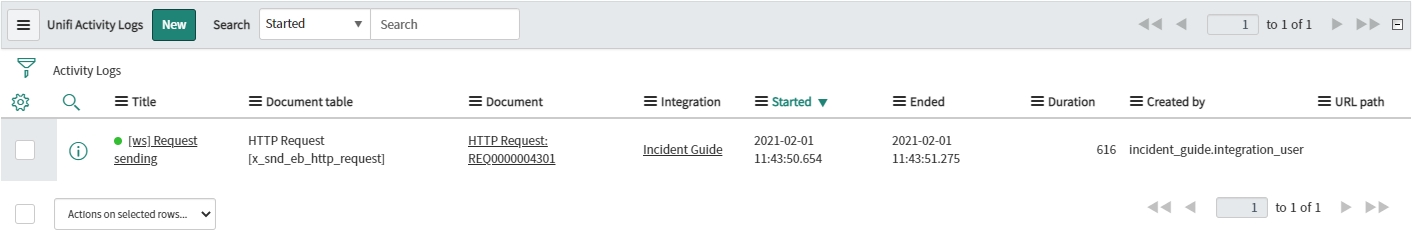

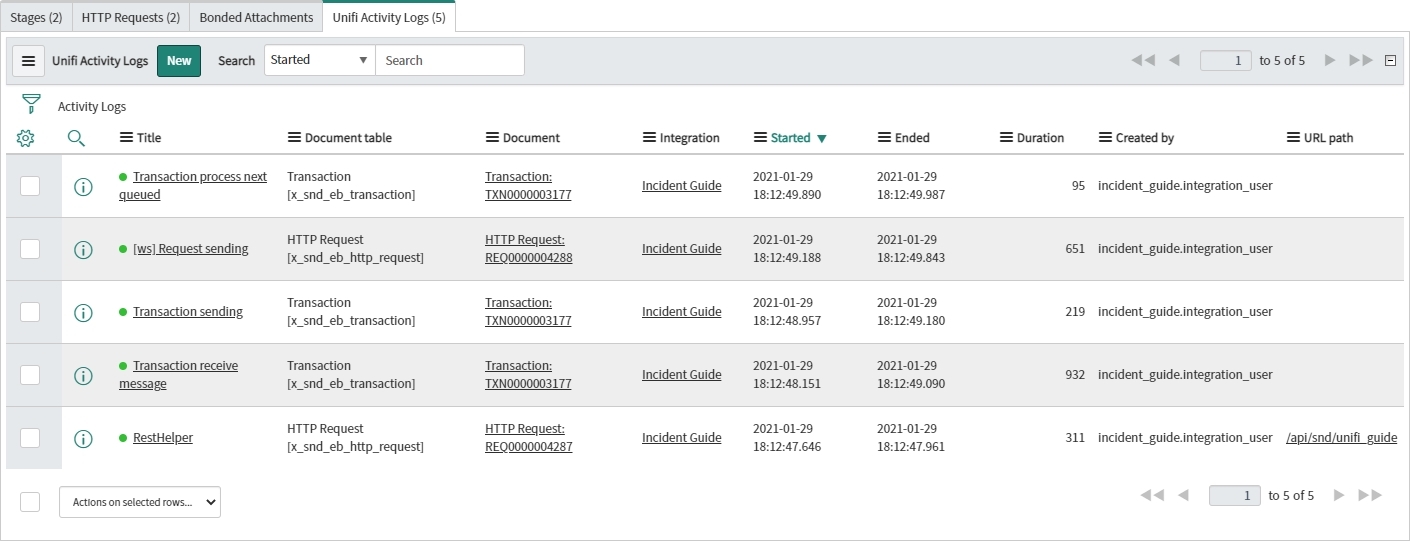

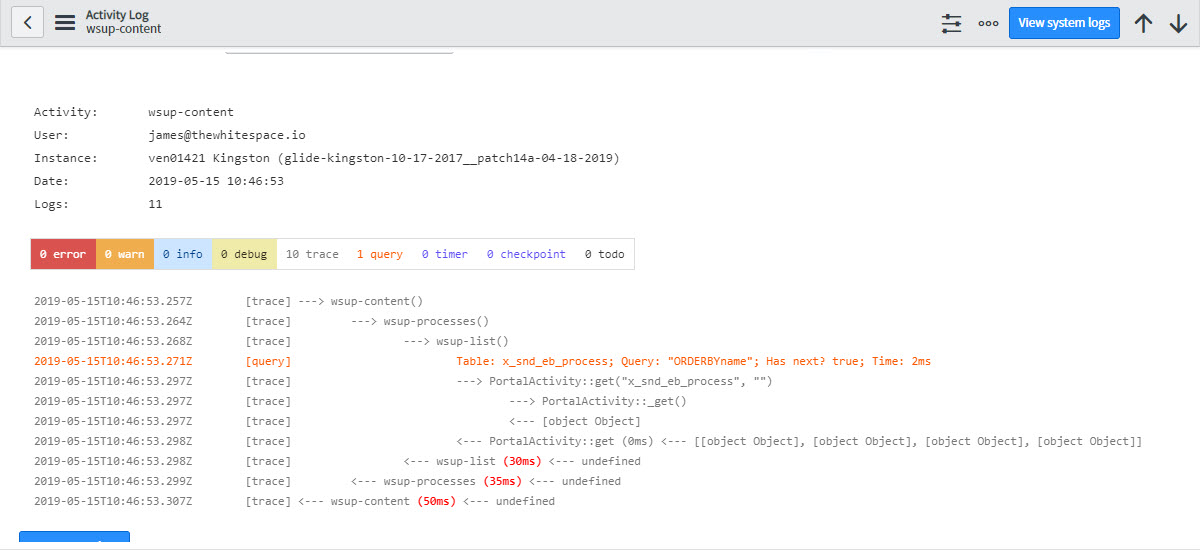

The Activity Logs module is a quick link to the Unifi Activity Logs and shows all entries from the current day.

Not to be confused with the , the Activity Logs module displays all the entries to the Unifi Activity Log table from the current day. This reduces the clutter in the System logs table by bringing all the Unifi log entries together in one place, providing contextual links to the records.

Clicking into the records, you will very quickly discover that the level of detail and clarity provided in the Activity Logs makes them of even greater value. It is the place to look when debugging.

Below is an excerpt from one of the log entries in the Activity Logs:

The System Logs module is a quick link to the ServiceNow system logs and shows errors and warnings from the current day.

The link to the ServiceNow System Logs shows errors and warnings from the current day, and is the place to look in the case of something catastrophic happening outside of Unifi, or something that isn't captured in (effectively providing an additional back up).

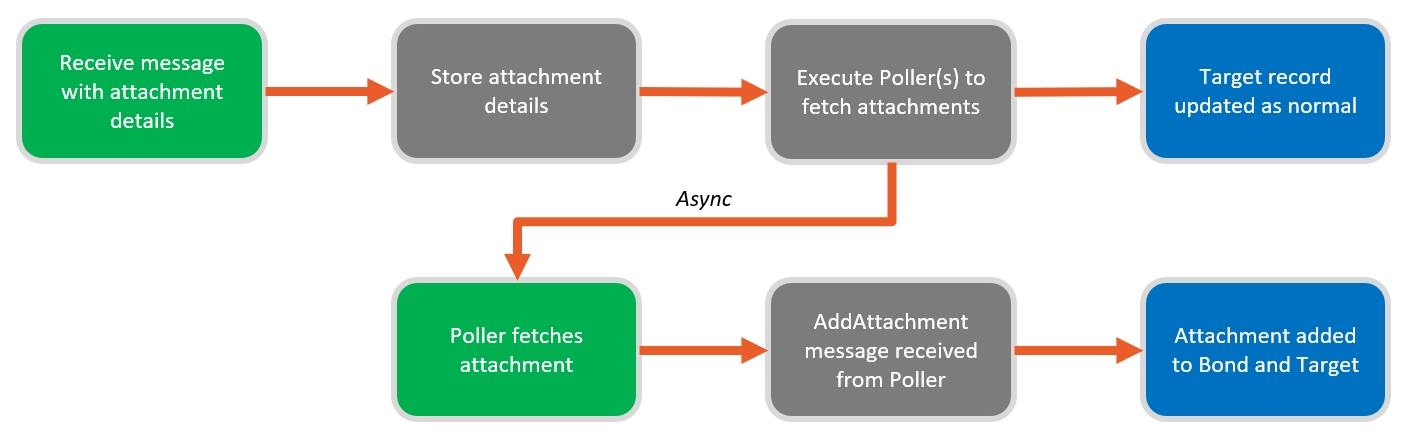

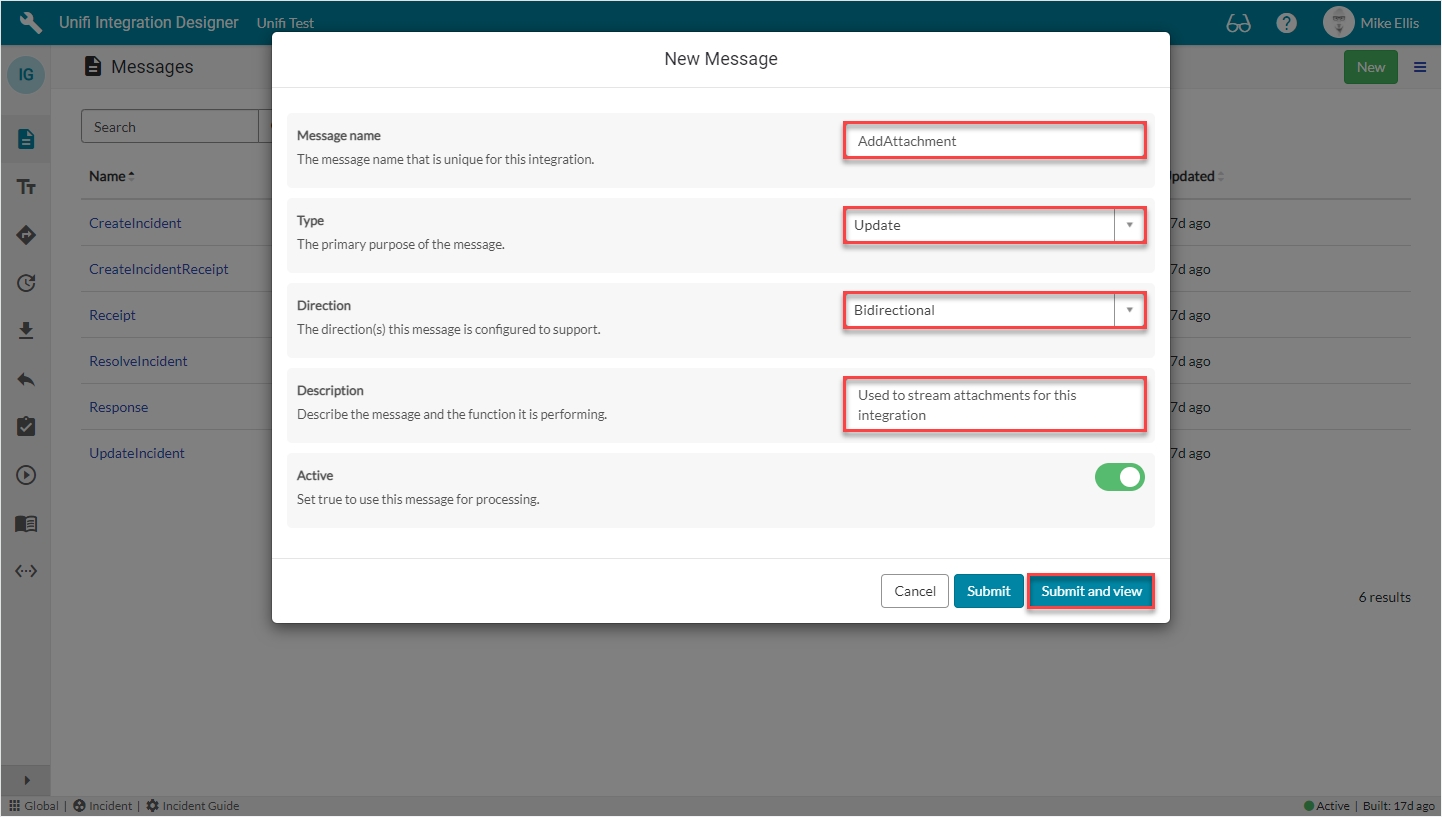

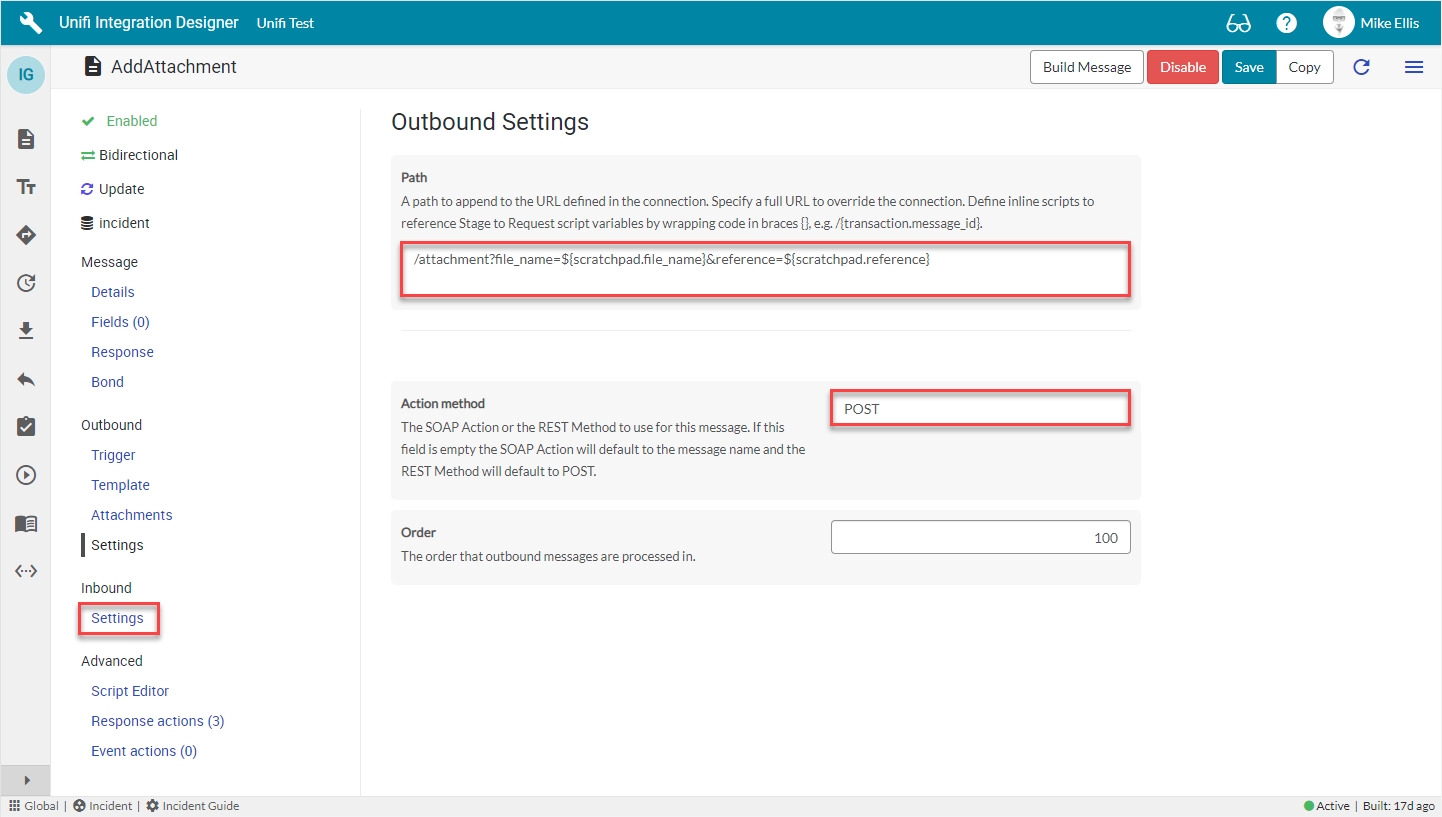

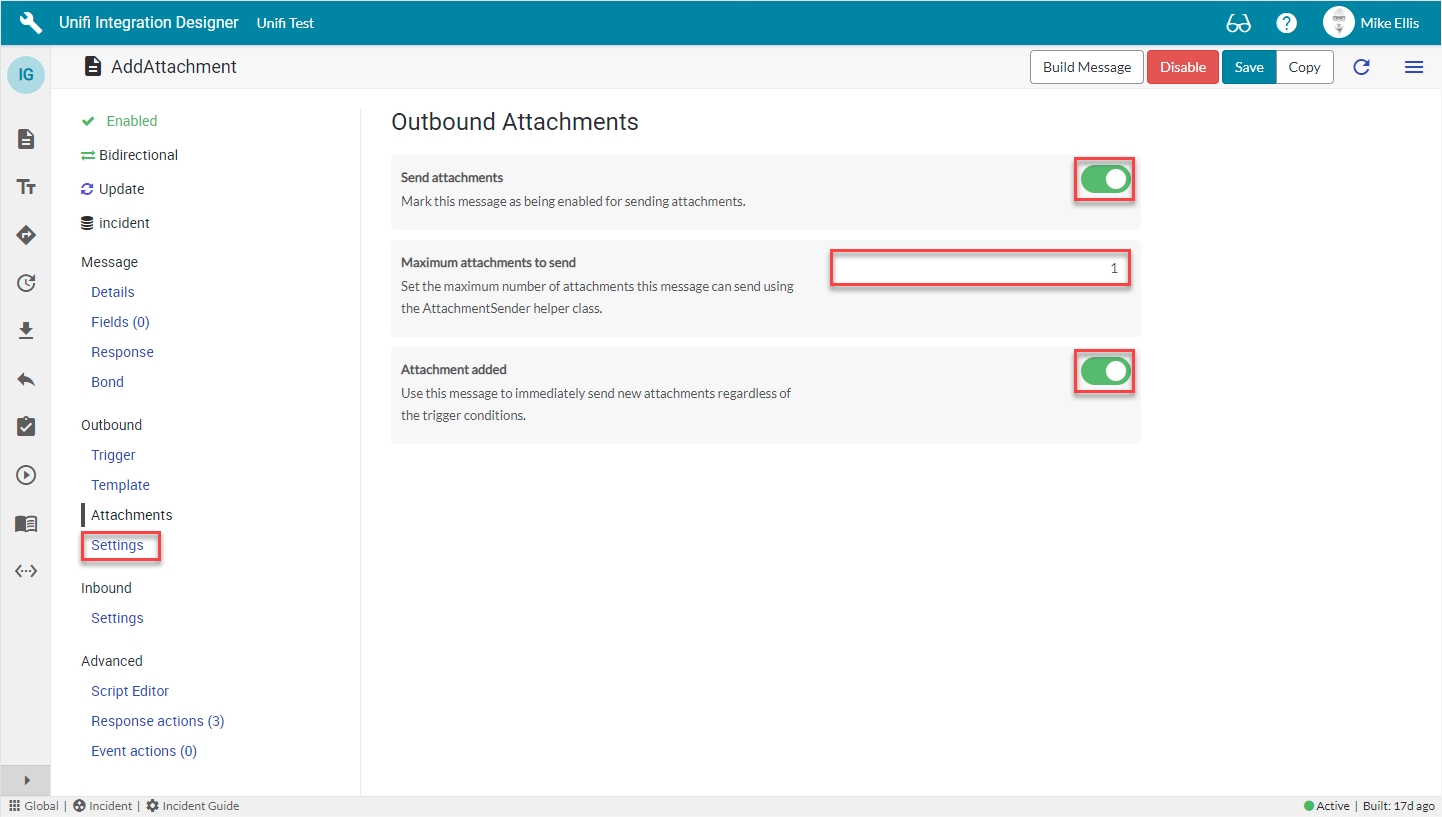

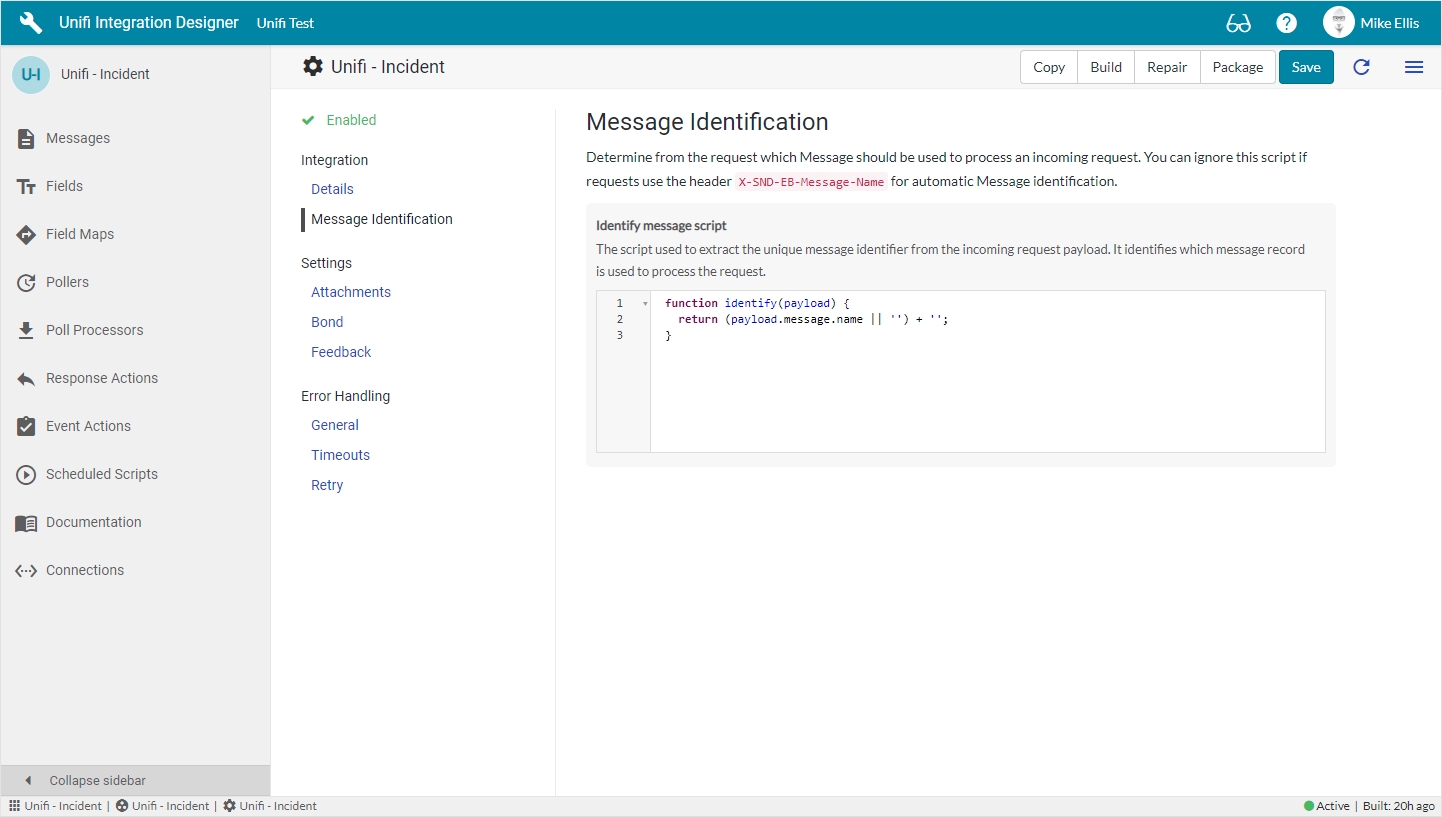

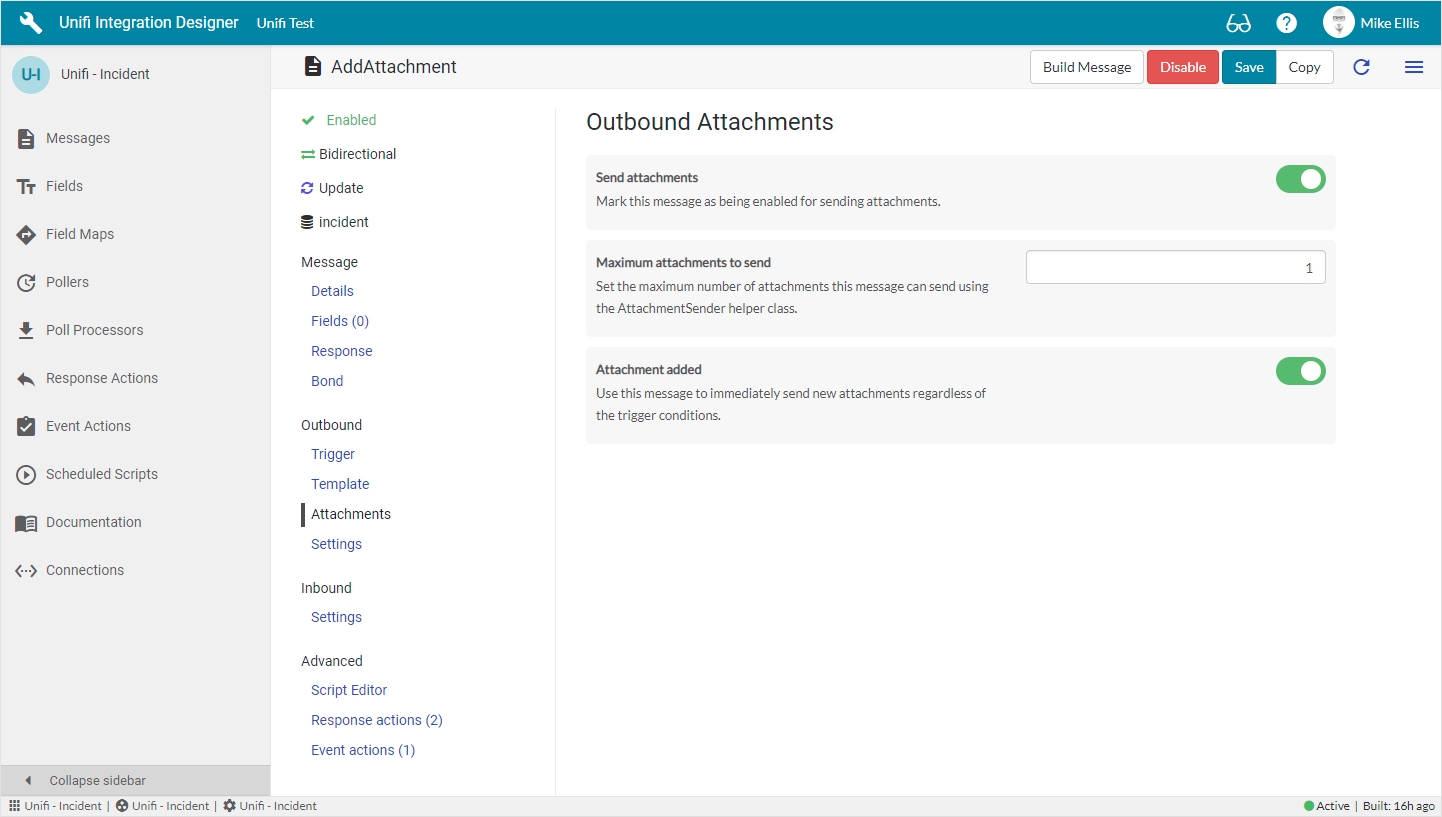

Follow this guide to learn how to configure a dedicated Message in Unifi to handle streamed attachments.

Attachments can be sent either as a stream or embedded in the payload as Base64.

In this guide, you will configure a dedicated Message as part of the Incident Guide Integration (created when following the ). It is assumed that the Process, Web Service, Integration & Connection are already in place (if not, please ensure that those elements are in place before continuing).

The components to configure are as follows:

Message

Poll Processors are used to setup, execute and process Poll Requests.

The Poll Processor is a configuration record which contains the logic that will be applied when polling a remote system for data. There are three main scripts which are used to setup, execute and process the Poll Requests.

The Setup Script is the first script to run (it runs at the point in time the Poll Request is created). It is used to build the environment for the poll and define what it will do (for example, create/setup the URL that will be called). It also supplies a params object as one of the arguments, which will be passed through to the subsequent scripts (and on to further Pollers, if required).

The Request Script is used to reach into the remote system and execute the request. This is usually done by making a REST call to the URL defined in the Setup Script.

The Response Script is used to process the information returned from the remote system. This could include converting the data from its proprietary format to a more usable format, sending the data to Unifi, or even kicking off a new poll.

Scheduled Scripts are automatically created by the system to perform tasks.

The Scheduled Scripts module displays the scripts which are automatically created by the system in order to perform tasks. These scripts generate log entries in the Activity Logs each time they run.

Some integrations will not have a Message that closes the Bond. In these situations, it is preferable to close any open Bonds manually from a business rule on the Target table, e.g. when an Incident is closed . The following business rule script will close all the Bonds for a given record:

(function executeRule(current, previous /*null when async*/) {

var bond;

bond = new GlideRecord('x_snd_eb_bond');

bond.addQuery('document', '=', current.sys_id);

bond.addQuery('state', '!=', 'Closed');

bond.query();

while (bond.next()) {

bond.state = 'Closed';

bond.update();

}

})(current, previous);A Poller makes a scheduled request to a remote system.

In cases where it is not possible for a remote system to send us the data, we can make a scheduled request for it using Pollers. All Pollers belong to an integration. Although a Poller belongs to only one integration, an integration can have multiple Pollers.

A Poller is a configuration record which defines the frequency of polling and which logic to use (the logic itself is defined in the Poll Processor). Each time it is run, it creates a corresponding Poll Request record.

Depending on the use case, the use of a Poller to collect data from a remote system poses some development challenges which need to be considered. Namely, there is an additional responsibility and workload placed on the host system to store and check some returned data in order to evaluate what has changed.

For example, in order to decide whether the state has changed, or what comments have been added, or even which system has made the updates to the data (we don't want to pull back data we have changed), checks have to be built into the scripts. This is aided by holding a copy of the relevant returned data, using (see the relevant page in the Administration section).

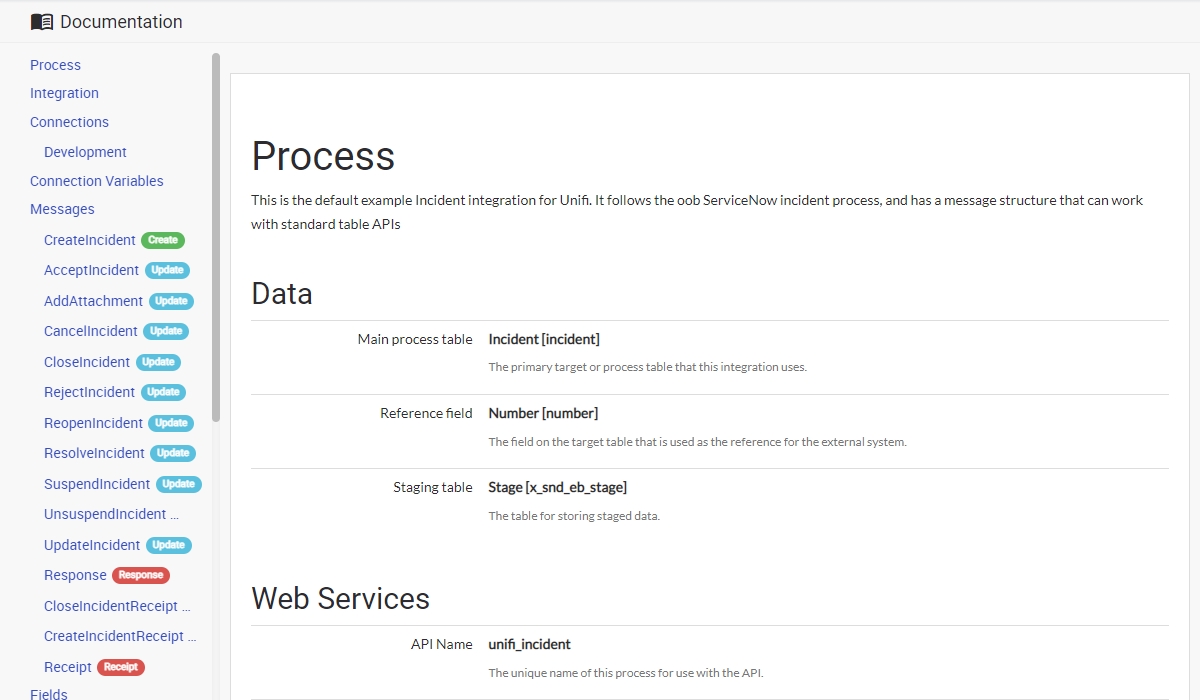

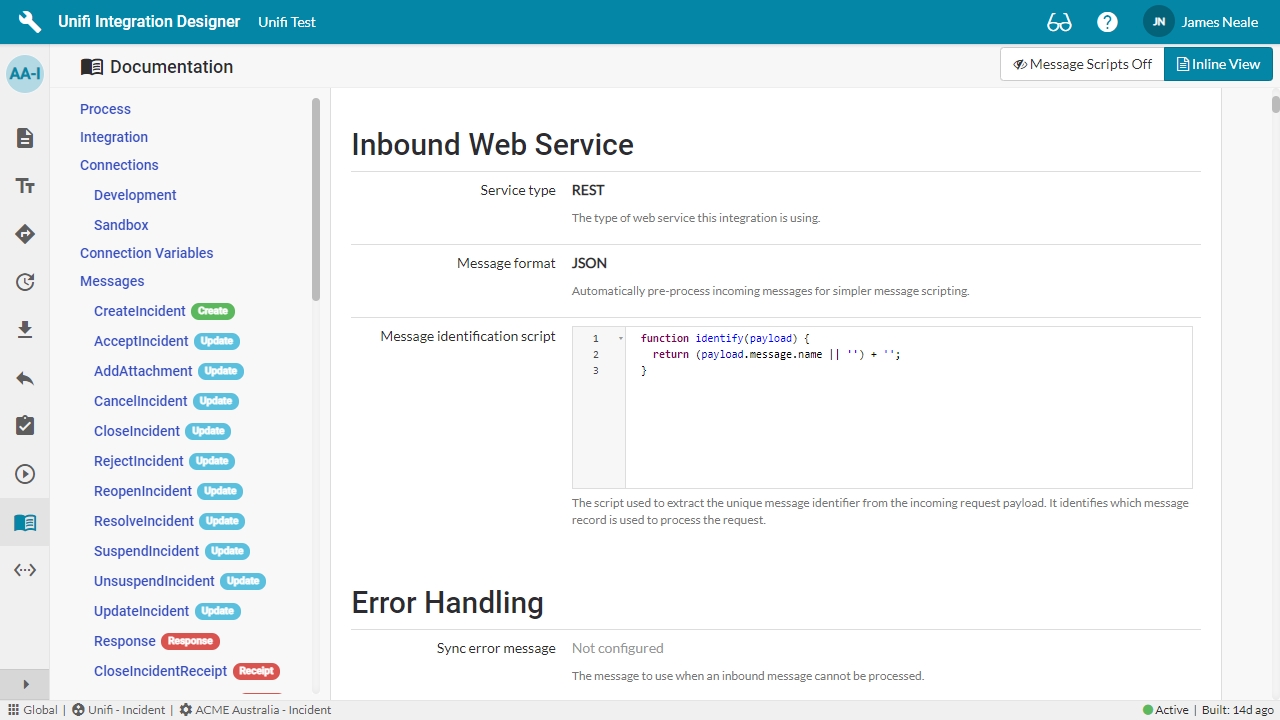

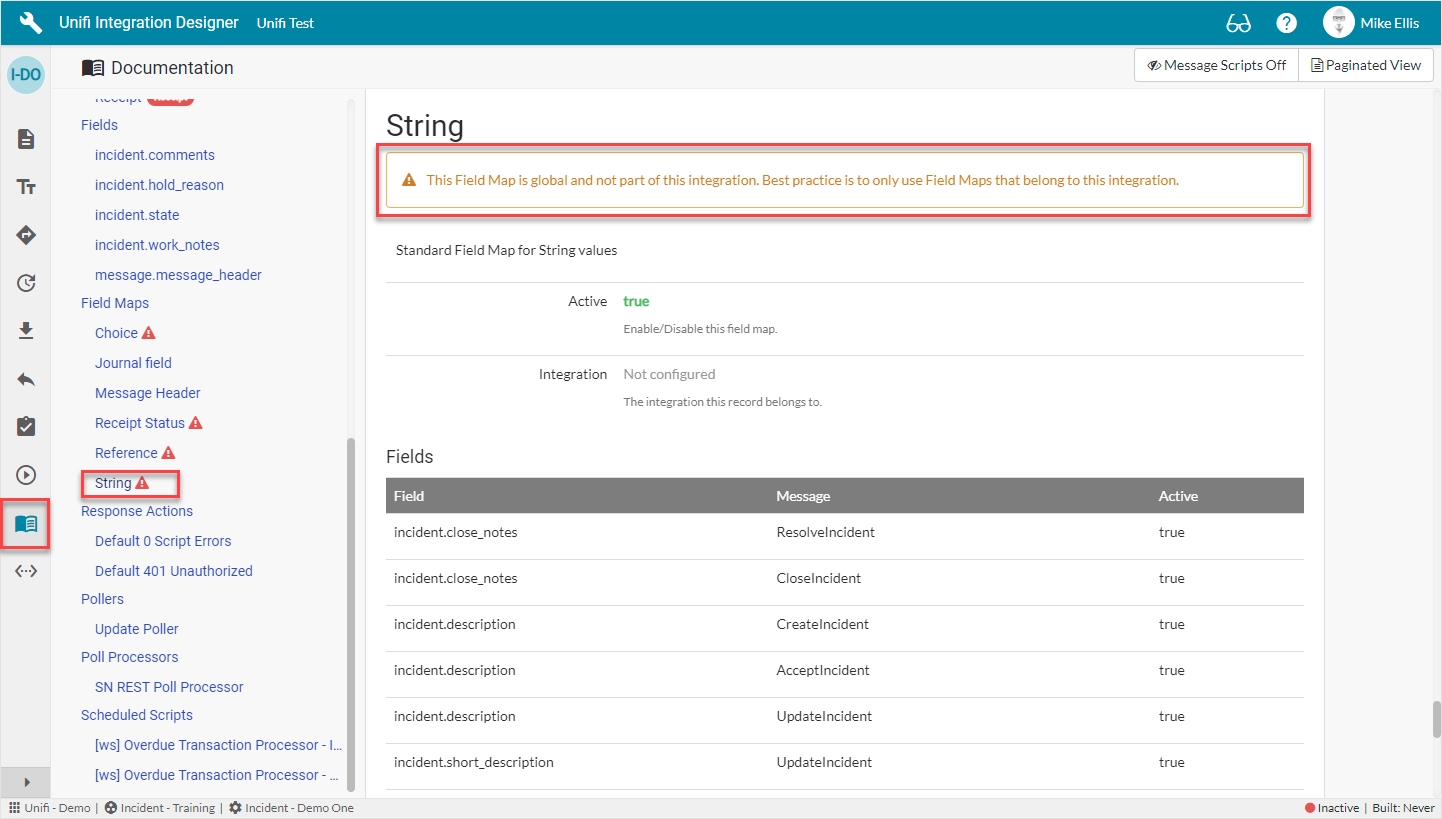

Documenting your integration build is made simple and easy with Unifi's auto-documentation features. From any integration, select the Documentation button to see exactly what has been configured in an easy to read format.

By default, the documentation is viewable as a list of separate pages for easy navigation.

To view the documentation as a single document that can be printed or exported, click the Paginated View button to change it to Inline View. Depending on the size of your integration, this can take some time to generate.

Click the Message Scripts button to toggle having Message Scripts included with each Message definition. Enabling this option with the Inline View can significantly increase the time to generate the documentation.

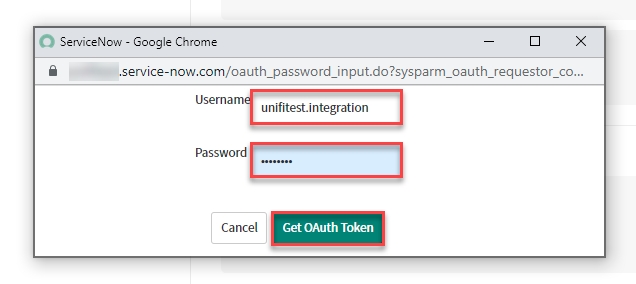

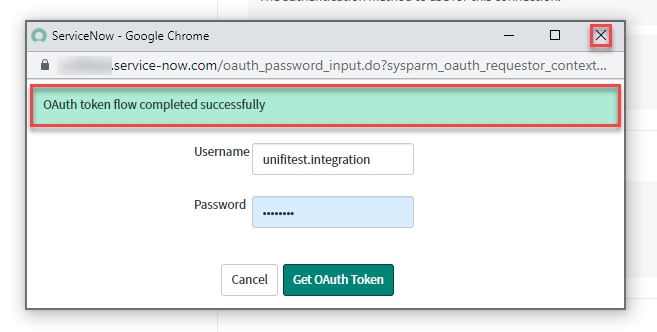

Follow this guide to learn how to setup an OAuth Connection in Unifi.

This document will guide you through the process of configuring an OAuth Connection for your Unifi Integration (ServiceNow to ServiceNow). This will involve making configuration changes in both the identity provider and identity consumer instances. As such, this guide will examine the changes for each instance separately on the subsequent pages.

In this guide, you will configure an additional OAuth Connection to another ServiceNow instance as part of the Incident Guide Integration (created when following the ). The external instance will act as the Identity Provider whilst the original instance will act as the Identity Consumer.

It is assumed that the Integration has been configured, packaged and moved to the external instance (see for details). Therefore, the Process, Web Service & Integration are already in place (if not, please ensure that at least those elements are in place before continuing).

Release date: 5 September, 2024

Release date: 15 April, 2024

If you are using Advanced Datasets with query scripts, please be sure to read our.

Follow these steps to install or upgrade Unifi.

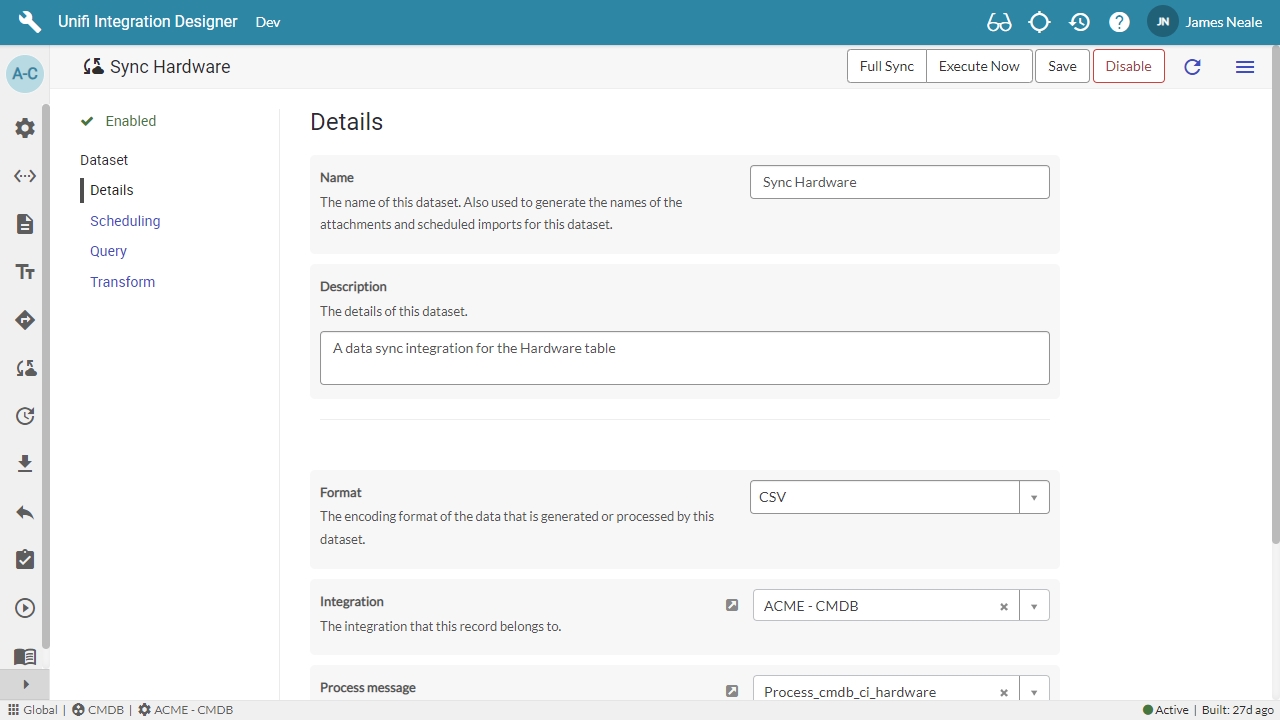

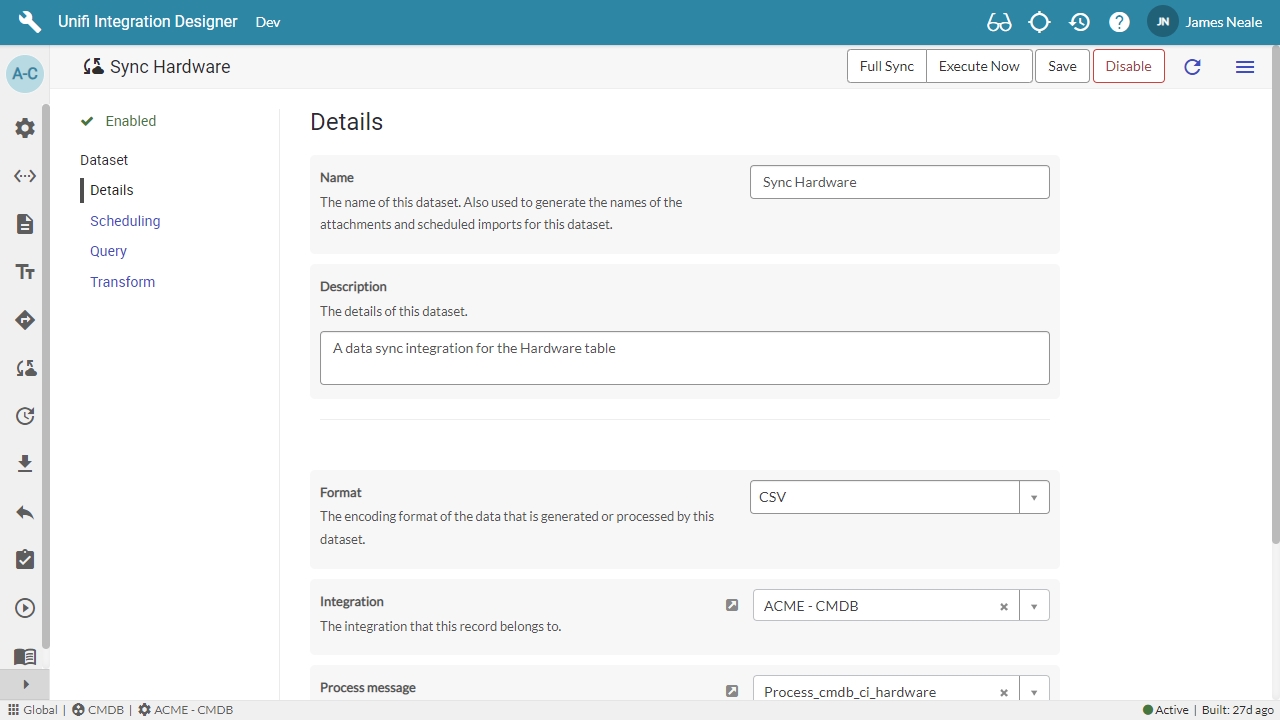

With the introduction of Datasets, it is now possible for large amounts of user, hardware, network and other supporting data to be sent to and received from remote systems. While this has been possible for some time using Pollers, they are not specific to handling large amounts of data in the way that Datasets are.

Datasets provide easy configuration that is supported by platform intelligence and automation, making it incredibly easy to setup a robust and efficient mechanism for handling large sets of data.

Unifi will automatically generate the Scripted REST Resource to cater for inbound streaming. If using a Resource created on a release prior to v3.0 this will need to be updated manually.

This guide is supplementary to the Bidirectional Asynchronous Incident Guide and assumes the Process (& subsequent Scripted REST Resources) are already in place (See the page of that guide for details).

We will now examine the automatically generated Attachment Resource. If you are manually updating an existing Resource to cater for inbound streaming, ensure that it looks like the this.

Heartbeat messages can be used to help identify if the integration is up or down. Both inbound and outbound heartbeat messages are supported.

Create a new Message and select type Heartbeat. Although specific configuration is not required, you can configure Heartbeat messages as you would any other message. The only difference is the Heartbeat transactions are bonded to the active connection.

You should only have one active Heartbeat message per integration. Activating an outbound Heartbeat message will create a scheduled job which sends the message according to the frequency set on the Integration.

No response message is required for Heartbeat messages unless you would like to customise how responses are sent and received.

Each Poll Request stores the details and outcomes of a scheduled poll.

The Poll Request is an operational record which stores the details and outcomes of a scheduled poll. It represents a single instance of a being run (a Poll Request record is created each time a Poller is run).

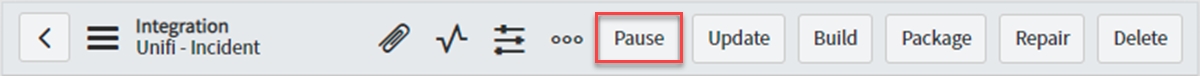

You can now Pause & Resume an Integration. This will cause Transactions to queue and then be processed in the order they were queued.

Pause is a UI Action on the Integration. Clicking it will pause the Integration, causing all subsequently created Transactions to queue in the order they were created.

Pause is different to deactivating the Connection. Deactivating the Connection would stop all processing completely, whereas Pause simply prevents the Messages from being sent until the Integration is resumed.

To pause an Integration, click the the Pause UI Action in the header of the Integration record in native ServiceNow.

Several domain related updated including inbound receipts now use the correct domain from the transaction instead of the user.

Dataset cleanup will now remove old inbound attachments from the Dataset whenever the related Bonded Attachments are cleaned.

Snapshots are now controlled by a new system property “Enable Snapshot” which is disabled by default. The property must be enabled before user testing for automated integration tests to be generated from the bonds.

The debugging property “Core Debug Mode” is now deprecated. Instead, full logging is enabled with the “Trace Mode” property.

Fixed inbound JSON datasets.

Two previously deprecated system properties have been re-purposed in this release. If you have a very old installation, please check that they have been updated and revert to the latest version if necessary. x_snd_eb.snapshot.enable /sys_properties.do?sys_id=a001502e1bd991106eefea4cbc4bcb8a x_snd_eb.designer.auto_diagnostic /sys_properties.do?sys_id=9901502e1bd991106eefea4cbc4bcbd6

The 'Core Debug Mode' system property has been deprecated. Enabling Trace Mode is the equivalent of setting 'Core Debug Mode' to "Trace".

New fields on Datasets can now be configured for Coalesce during creation.

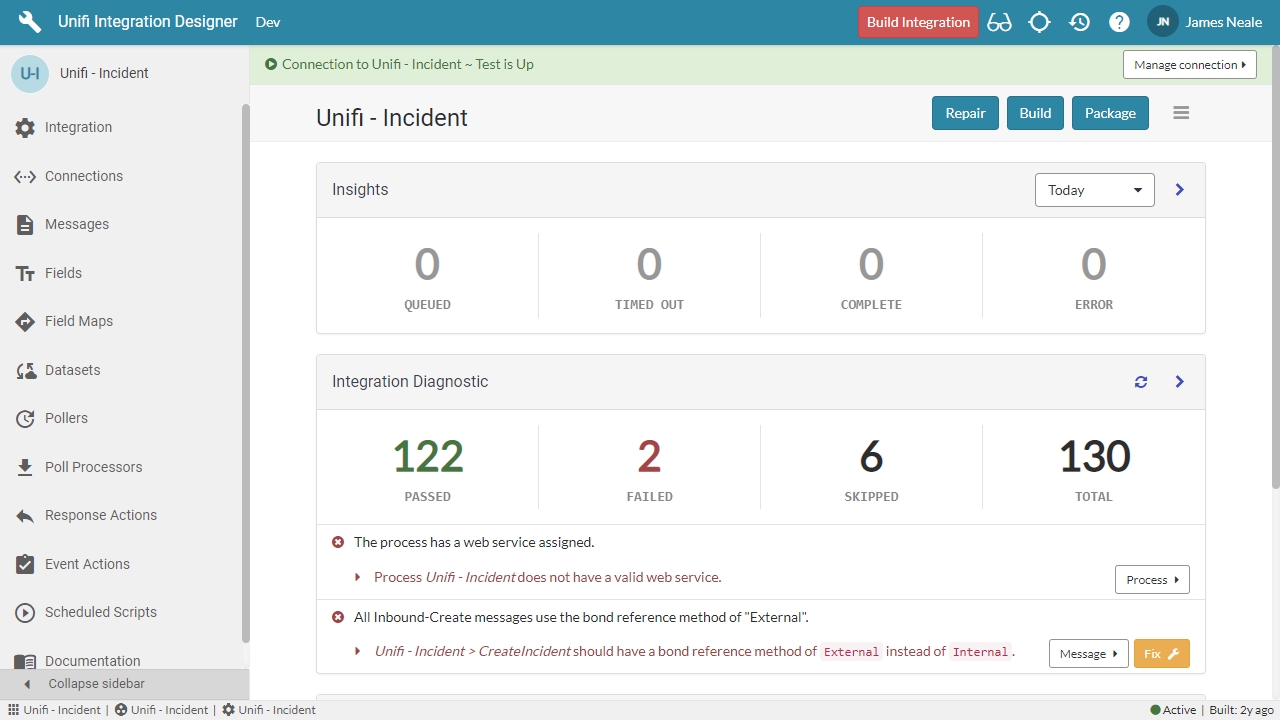

Improved the integration dashboard diagnostic that checks and validates versions of Unifi, hotfix, and global utility.

Dataset clean-up now also removes attachments used to send and receive Dataset data.

A system property has been added to disable integration diagnostics being run automatically when viewing an integration dashboard in Designer.

The Script Include containing integration diagnostics can now be modified.

Updated the Bond cleanup logic to prevent errors generated by one cleanup from stopping the entire cleanup process.

Integration diagnostic checks that the system property glide.businessrule.async_condition_check is set to false.

Limited the number of duplicate bonds being checked during diagnostic to 20. A custom limit can be defined with the system property x_snd_eb.diagnostic.duplicate_bond_limit.

Introduced a new system property to control snapshots. Snapshots are now disabled by default. This property must be enabled before testing to allow Integration Tests to be generated.

Added Process message and Send message columns to the Dataset list view in Designer for easy navigation.

Diagnostic now checks for the use of current in message scripts

Any open submenus are now automatically hidden when collapsing the sidebar in Designer.

Fixing an integration diagnostic issue from the integration dashboard will now automatically update the counters.

Support for inbound JSON processing has been addressed. Please make sure to update to the latest Global Utility for this (including versions 4.0, 4.1, and 4.2).

Improved inbound REST API processing to help identify catastrophic internal errors caused by external scripts and cross scope access issues for scoped applications dependent on Unifi.

All references to gr in the standard Unifi field maps have been removed.

Fixed payload formatter UI Action script so XML parse errors are simply ignored.

Sets the requests domain to that of the transaction when processing a receipt.

Test result chart colours now correctly match the labels.

Updates the HTTP Request domain to be the same as the transaction when processing the inbound receipt.

Removed extra parentheses from automatically generated trigger rules.

The domain of a datastore is always the same as that of the record it is attached to.

Fixed the Active/Inactive field filter on the fields list shown in a message. Selecting Active will show only active fields belonging to the message while selecting Inactive will show both inactive fields and integration-level fields which are not yet included on the message.

Fixed $stage generation in import set transform maps to use only u_ field names.

Trigger business rules, REST services and SOAP services are now easily accessible from the navigator menu.

Async receipts will now send correctly for inbound create requests that fail.

Fixed an issue with inbound request content-type processing which could result in "HttpRequestError: Unable to identify message name from request".

Fixed the Integration copy logic to prevent duplicate Dataset messages from being created.

Added a new Field Map for ServiceNow GlideList fields.

Links to open hotfix and global utility can now be find in the "Check Available Updates" window, available from the main menu in Designer.

Added search capability for name on the inbound user on connections.

New Dataset field map added for choice fields.

New trigger business rules now use a specific table for the ActivityLog description rather than relying on current.getTableName().

Added a new module "Trigger Rules" to the Administration menu group to show Business Rules that are integration triggers.

Added two new modules under the Administration menu group: “REST Services” and “SOAP Services”, each linking to respective web service methods that call Unifi.

Links to Global Utility and Hotfix are now available from the "Check Available Updates" window in Designer.

Updated the way users can check for application, Hotfix and Global Utility versions to make it more useful.

Updated the Replay button on an Http Request so it does not show when the transaction is in the Ignored state.

The data source is no longer packaged with integrations.

Added a new module to show integration templates, and added a new filter to the integrations list to exclude templates from actual integrations.

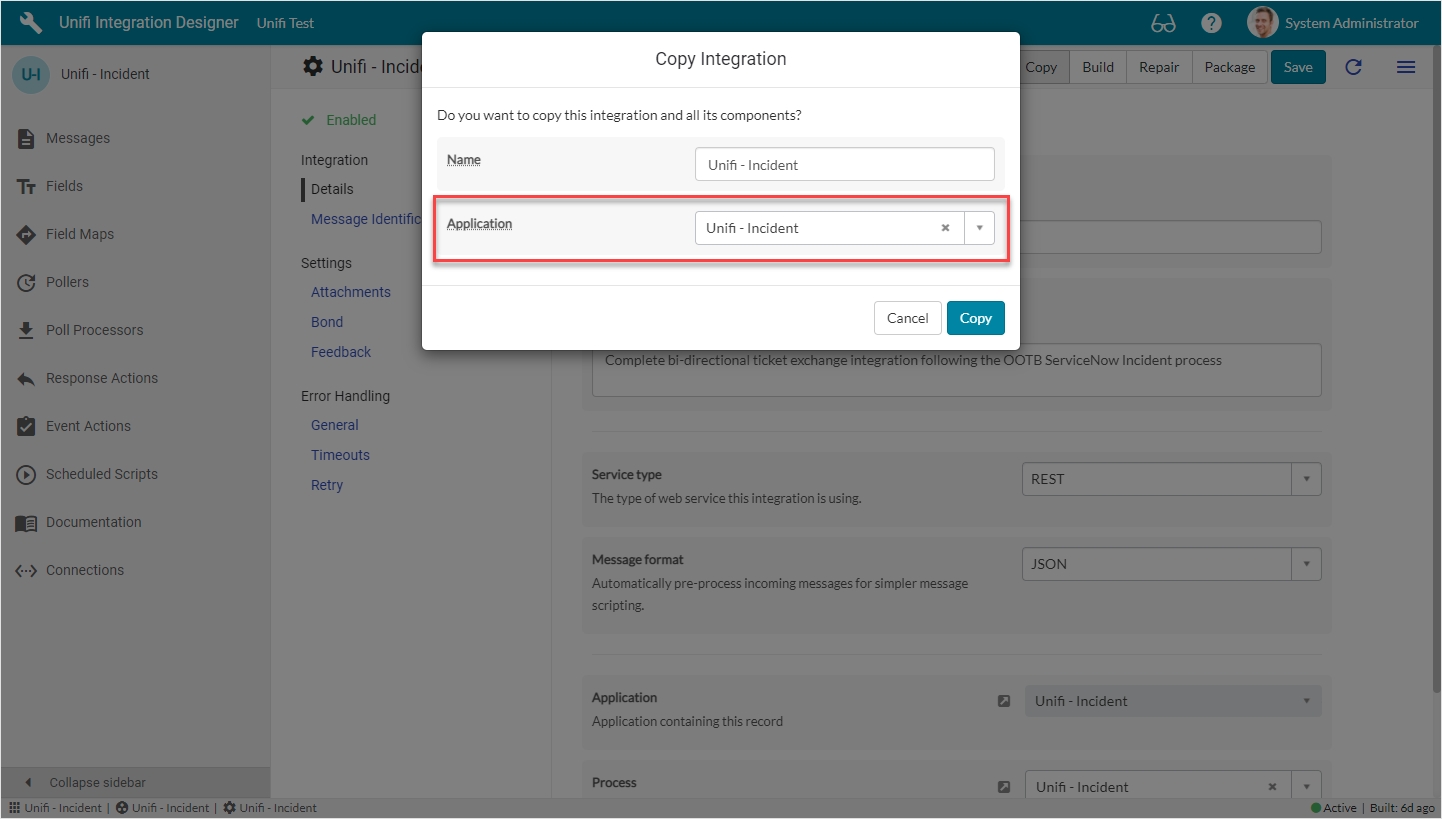

A new Copy button has been added to the Integration Dashboard.

Number field values are now automatically appended to the record title when viewing in Designer.

Improved the sidebar menu. A collapsed sidebar will show submenus on hover. Clicking on a sidebar item will navigate directly to its page.

Updated transaction processing to clear the bond and document values on the transaction when an inbound create message fails during Stage to Target processing (no bond or document records exist when an inbound create fails). This allows async receipts to be sent as expected.

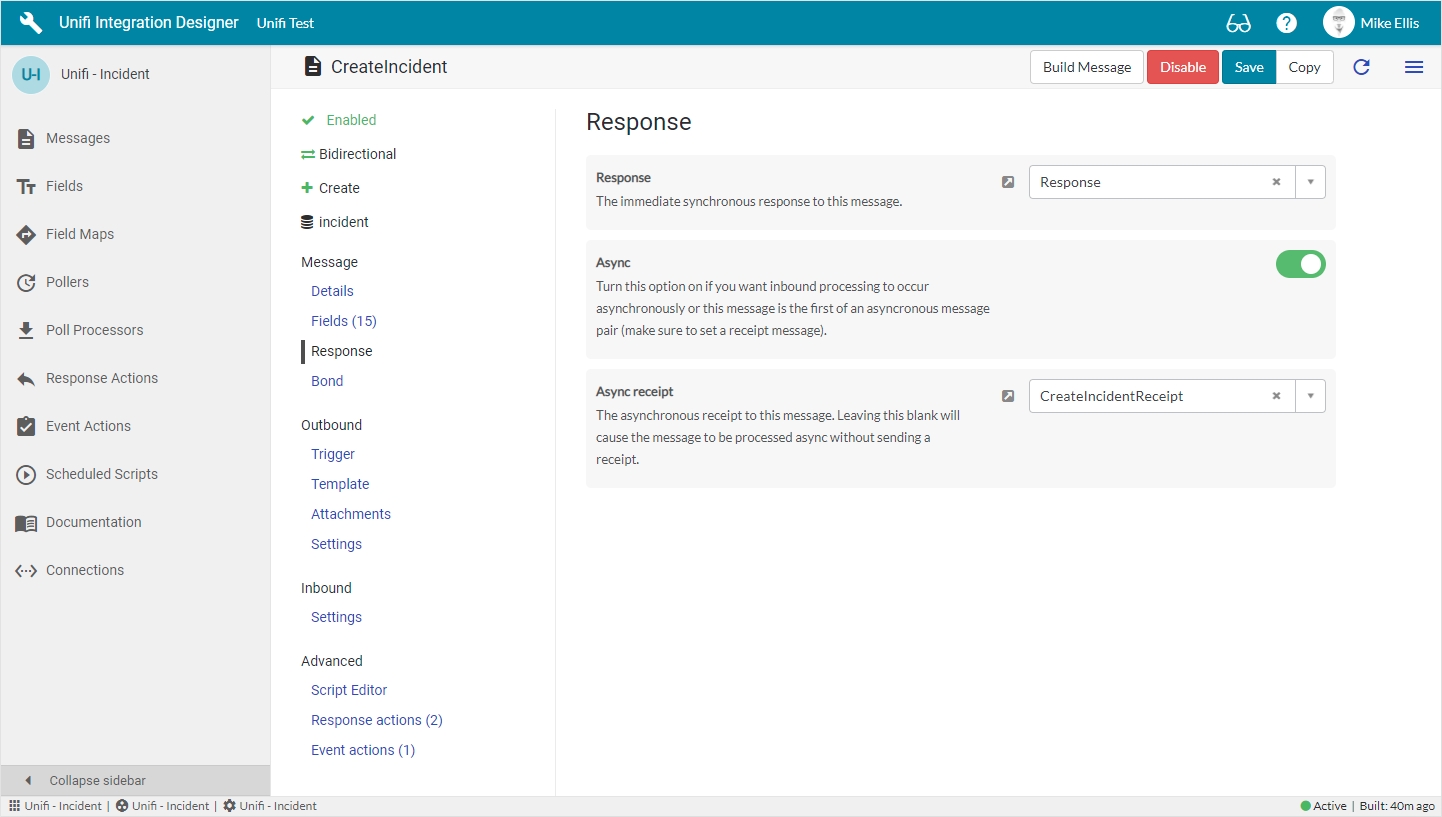

The Async flag is now available on receipt messages. By default (async off), inbound receipt messages will now be processed while the inbound request waits which will help prevent failed concurrent requests due to large amounts of business logic on high-throughput systems. Turning Async on for a receipt will cause the inbound Stage to Target processing to happen asynchronously as in previous versions.

Fixed an issue with the VersionCheck which caused an error to be thrown when no data was found.

Fixed an issue which prevented scripts using the internal Unifi DataSetProcessor to batch process large amounts of data.

Fixed an issue with the Repair integration process which would repair one transaction before exiting.

Links for hotfix and global utility now work correctly

Fixed an issue with Dataset Cleanup script action caused by the ServiceNow event object now being read only which prevented parameter updates from being passed to the next auto-queued event.

Fixed visual styling of embedded fields list in a message form in Designer.

Updated Designer to prevent announcements from breaking the layout.

Updated loading logic to prevent loading modal when another modal is already open.

Updated HTTP Request replay logic to clear errored transaction values and make it clear what happened in the latest request.

Added logic to send attachments via Unifi integrations when they are added to a record via an inbound email.

Fixed an issue with section visibility checks in Designer portal forms.

Fixed an issue with inbound request content type validation that could sometimes prevent messages from being identified.

An empty Read-only Role property will no longer allow access to records.

Fixed an issue with mandatory field form validation that would show an invalid field modal which looked broken.

Added support for processing a response payload for an outbound async message where the transaction state is Awaiting Receipt.

Fixed issue with duplicate Dataset messages being created when copying an integration containing one or more Datasets.

Updated inbound processing logic to help prevent unique key violations when creating the Bond.

Added configuration to fix navigation issues with Datasets when viewing them in Designer.

advancedAs a result, in current versions of Unifi (prior to 4.4), there is a risk that Datasets not marked as Advanced may be reclassified as Advanced by ServiceNow based on their run type. If this occurs and no Advanced query is present, the Dataset may attempt to perform an unfiltered export.

Unifi 4.4 resolves this conflict by introducing a new internal field: Advanced query (x_snd_eb_advanced).

This field replaces Unifi’s use of the legacy advanced flag and cleanly separates Unifi’s Advanced query logic from the Zurich platform field.

A Fix Script runs immediately after upgrading to 4.4. It:

Finds all existing Datasets where the legacy Advanced (advanced) field is true

Sets the new Advanced query (x_snd_eb_advanced) field to true

Makes no other changes to Dataset logic

This ensures Unifi continues to function as before, without interference from the Zurich platform field.

To prevent accidental full exports:

If a Dataset is marked as Advanced (via the new field)

But no Advanced query is defined → Unifi will block execution and report a validation error.

This ensures Dataset behaviour remains predictable and prevents unintended bulk exports.

If you upgrade to Zurich before upgrading Unifi:

ServiceNow may automatically treat certain Datasets as Advanced

This depends on the Dataset run type applied by the platform

Because no Advanced query exists for these Datasets, an unfiltered export may occur

This behaviour does not originate from Unifi; it results from the Zurich platform logic applied to sysauto.advanced.

We strongly recommend upgrading to Unifi 4.4 before upgrading to Zurich. This ensures all Dataset records are safely aligned before Zurich applies its new logic to sysauto.advanced.

If you upgrade to Zurich before upgrading to Unifi 4.4, there is a risk that data will be exported without the intended filters in place.

In either case, as a precaution, please follow these suggested steps to avoid any issues:

Turn off all dataset integrations before upgrading the instance

After the upgrade, ensure that the new "Advanced query" flag is set correctly for each dataset (it will be in the same place that the old "Advanced" flag was)

Re-enable each dataset integration

If you have any further questions or require assistance, please contact ShareLogic Support.

When an outbound heartbeat message is sent and fails, the integration will be paused and its Status marked as "Down". This will force all outbound messages to be queued. When a future outbound heartbeat message is successful, the integration will be resumed and its Status marked as "Up".

The frequency of outbound heartbeat messages is controlled by the Heartbeat Frequency setting on the Integration. By default, this is set to 300 seconds (5 minutes), meaning a heartbeat is sent every 5 minutes to the endpoint specified by the connection and message.

A heartbeat is seen to fail when it does not receive a 2xx status code response, e.g. 200. If you need to support other status codes, you can use Response Actions to intercept and modify the request response.

To help identify heartbeat messages, all outbound heartbeat messages contain the header x-snd-eb-heartbeat with a value of 1.

You can allow third party systems to send a heartbeat request. A Heartbeat message configured for Inbound processing is required. It can be configured to operate similarly to any other message, although no additional configuration is required.

Inbound heartbeats will run through the full connection process for the integration. By default, a 200 response is returned when authentication and Heartbeat message processing has been successful.

POST https://myinstancename.service-now.com/api/x_snd_eb/unifi/incident

Example to send a Heartbeat message to check if the API is available.

x-snd-eb-message-name*

String

Your heartbeat message name, e.g. Heartbeat

x-snd-eb-heartbeat

Integer

1

Authorization*

String

Setup authorization as required by your connection.

Unifi is exclusively available through the ServiceNow Store with a limited trial or paid subscription.

To install Unifi on your instance, you will need to ensure it has been given entitlement to the application. You must do this through the ServiceNow Store.

Once you have setup entitlement, you can use the Application Manager in your ServiceNow instance to install and upgrade Unifi. You can find more information on how to Install a ServiceNow Store Application in the ServiceNow Product Documentation.

When upgrading, make sure you also install the latest versions of Global Utility and Hotfix.

Unifi has some powerful features that will only work with access to the global scope. Access is granted through the Unifi Global Utility.

Full details can be found on the Global Utility page.

Unifi has the ability to be patched between releases by using a special Script Include called hotfix.

Full details can be found on the Hotfixes page.

You're good to start building your first integration or import one you already have.

If you’re new to Unifi then you might like to follow one of our integration guides. You can access the Integration Guides from the menu.

Global system settings for Unifi.

The Properties module is where you will find the global system settings for the Unifi application.

Control all integrations using this master switch.

Allow users with this role to read bond records for informational purposes. Intended for giving access using related bond lists on the process tables. It does not expose the application menu. For greater control use the x_snd_eb.user role**.**

Choose the required logging level for the application: 'debug' writes all logs to the database, 'info' writes informational, warning and error logs to the database.

Debug mode is extremely resource intensive. Only choose 'debug' for troubleshooting when session debugging is not adequate.

Use for general integration debugging to capture debug messages in the console in addition to info, warn and error.

Enable input/output trace logs for functions that have been wrapped for tracing.

Requires Debug mode to be enabled.

Enable input/output trace logs for core application methods. This produces extremely verbose logs from all core scripts which will help ShareLogic Ltd if you ever run into trouble with the app.

Not required for general integration debugging.

The choices are:

Off: No debug mode is active. Performance is not affected.

Silent: Enhanced exception handling and error logs. Performance is slightly affected.

Trace: Functional profiling in addition to enhanced exception handling and error logs. Peformance is affected. Requires Trace mode to be enabled.

Turns on Activity Logs for Service Portal interfaces.

How many days should Activity Logs be kept before being removed?

Configure which columns are always shown on lists in the Unifi Integration Designer portal. Multiple columns should be separated by a comma.

How many days should orphaned transactions be kept before they are removed?

What is the maximum number of orphaned transactions to remove at any one time?

How many hours should successful heartbeat transactions be kept for before they are removed?

How many days should failed heartbeat transactions be kept for before they are removed?

Enables logs and payloads generated by Unifi transaction processing to be attached to transactional records.

Control whether to allow files to be created in the Unifi scope on Unifi tables (prefix x_snd_eb_)

Each Dataset Request stores the details and outcomes of a dataset import or export.

The Dataset Request is an operational record which stores the details and outcomes of an incoming dataset import or a scheduled dataset export.

An Dataset export can create many Dataset Requests depending on the dataset query and batch size.

A Dataset import is created when an incoming Dataset message containing an attachment with data is received.

This is particularly handy in a uni-directional integration where polling the remote system is necessary. You can store data such as:

The last time a request was made

Identifiers that have been seen before

Watermarks

All Unifi model objects have data store built in. Calling these methods will read/write data stores on the record the object is representing. Note: not all objects available in Unifi scripts are Unifi model objects.

Use getData and setData to work with simple string-like data.

You can also work with objects just as easily by using getDataObject and setDataObject. These functions automatically take care of encoding the object for database storage and decoding it for JavaScript usage again.

Data stores can be used with any record in ServiceNow using the Unifi dataStore API. We recommend prefixing dataStore with the Unifi scope x_snd_eb to prevent cross-scope issues, e.g. x_snd_eb.dataStore.get(document, "DomainID").

Get a string value from a data store. The default_value parameter will be returned if no data store exists with the specified key.

Retrieve a data value and parse it as JSON to return an object. The default_object parameter will be returned if no data store exists with the specified key.

Remove a single data store.

Remove all data stores for a document.

Store a key/value pair against a document. An optional description can be provided for future reference.

Encode an object as JSON and store the value against a document. An optional description can be provided for future reference.

The following code is extracted from the automatically generated Attachment Scripted REST Resource:

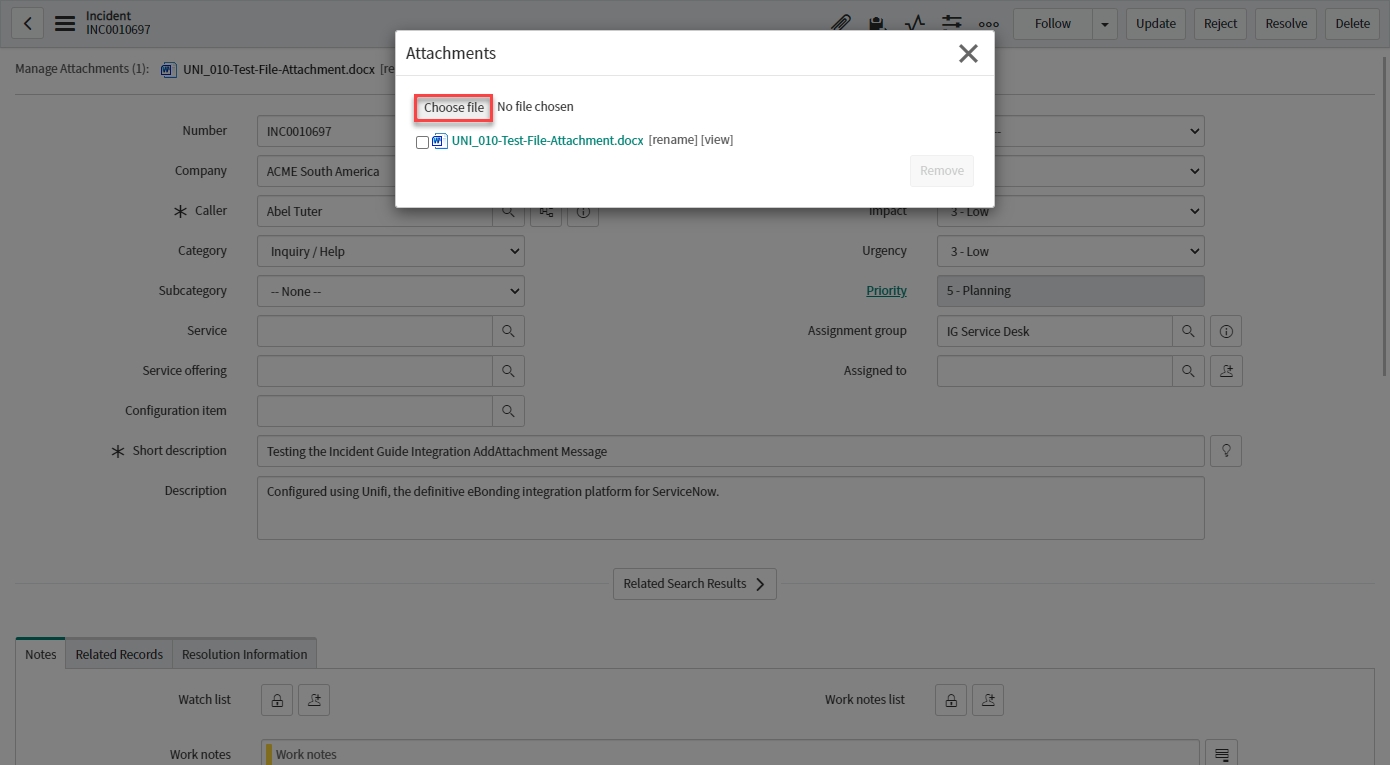

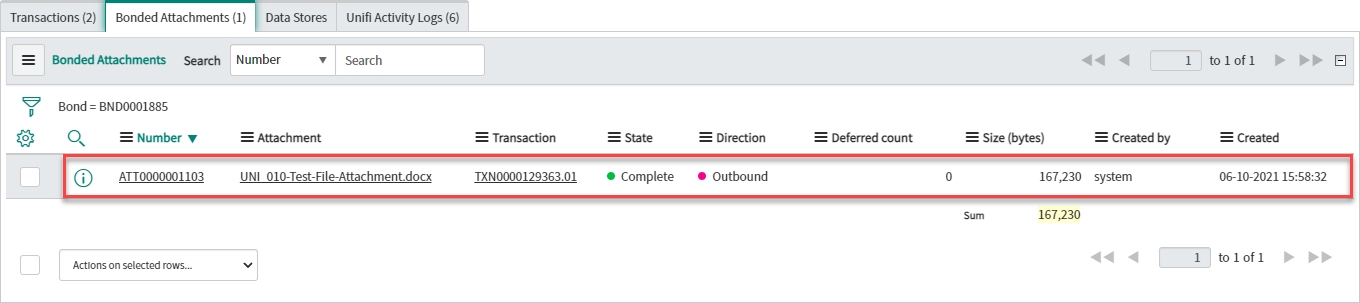

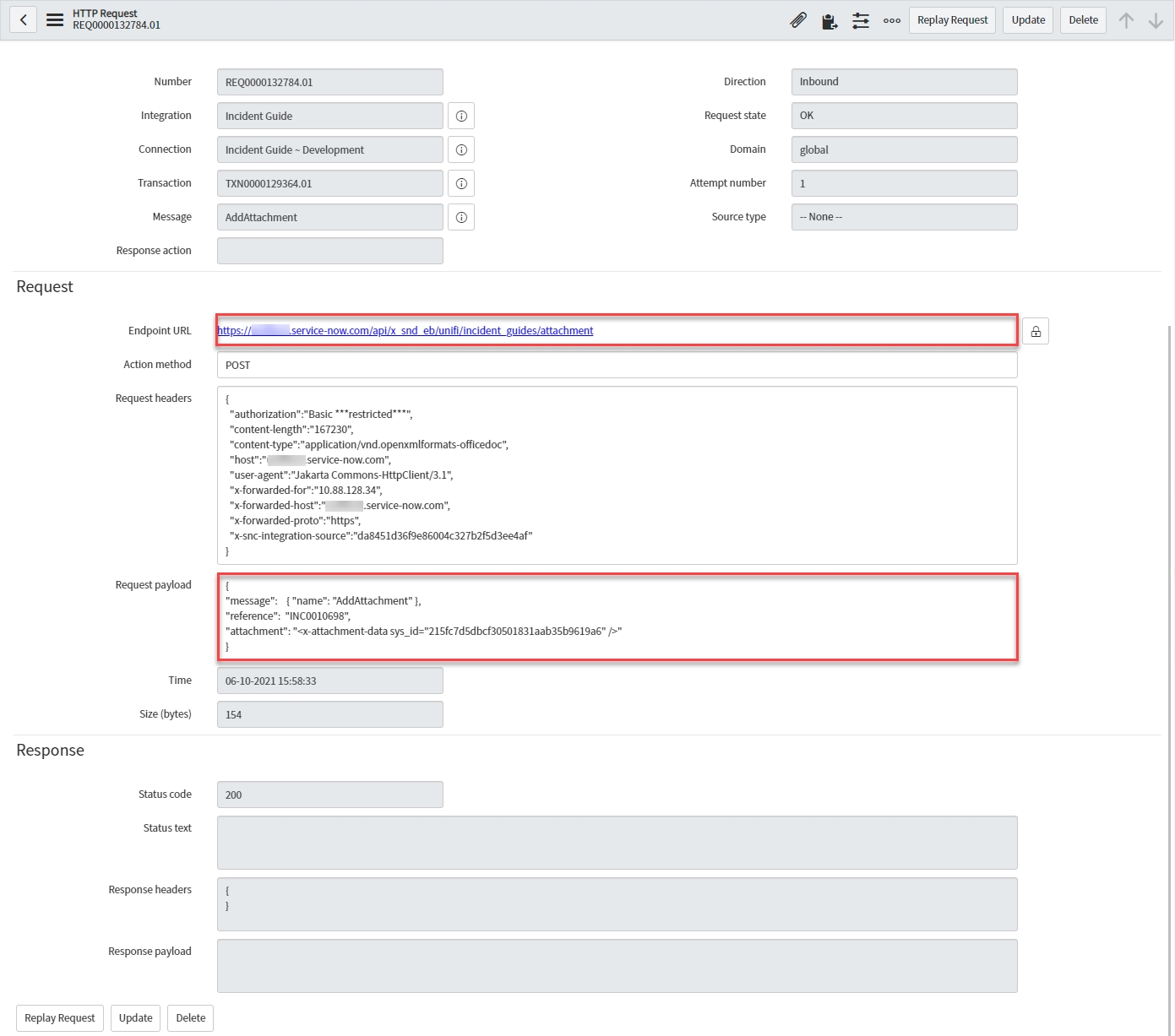

With the Message & Scripted REST Resource in place, you are now ready to Test the AddAttachment Message.

// Store the current log count

var log_count = bond.getData('log_count');

bond.setData('log_count', parseInt(log_count, 10) + 1)// Store the logs that have been seen in an array

var logs_seen = bond.getDataObject('logs_seen'); // [1375, 1399, 1748]

logs_seen.push(2140);

bond.setDataObject('logs_seen', logs_seen);x_snd_eb.dataStore.get(current, "MyStr");x_snd_eb.dataStore.getAsObject(current, "MyObj");x_snd_eb.dataStore.remove(current, "MyStr");x_snd_eb.dataStore.remove(current);x_snd_eb.dataStore.set(current, "MyStr", "My value", "This is my value");x_snd_eb.dataStore.setFromObject(current, "MyObj", {foo: "bar"}, "Hello world!");(function process( /*RESTAPIRequest*/ request, /*RESTAPIResponse*/ response) {

var helper = new x_snd_eb.RestHelper('incident_guides'); // the API name of the Unifi Process

processAttachment(helper.getRequest().getRecord());

helper.processRequest(request, response, 'POST'); // the HTTP method of the resource

/**

* This scripted REST API allows an attachment to be sent into Unifi in a

* similar way to the OOB api/now/attachment REST API, but it processes it

* in Unifi.

*

*/

function processAttachment(target_record) {

var file_name = request.queryParams.file_name;

var reference = request.queryParams.reference;

var mime_type = request.getHeader('Content-Type');

if (!file_name || !reference || !mime_type) return;

var ga = new GlideSysAttachment();

// save the body to an attachment on the request

var attachment_id = ga.writeContentStream(target_record, file_name, mime_type, request.body.dataStream);

// return custom payload for the body to process

helper._getRequestBody = function _getRequestBody() {

return '{\n' +

'"message": { "name": "AddAttachment" },\n' +

'"reference": "' + reference + '",\n' +

'"attachment": "<x-attachment-data sys_id="' + attachment_id + '" />"\n' +

'}';

};

}

})(request, response);Datasets require the latest Unifi Global Utility for them to build the necessary configuration correctly.

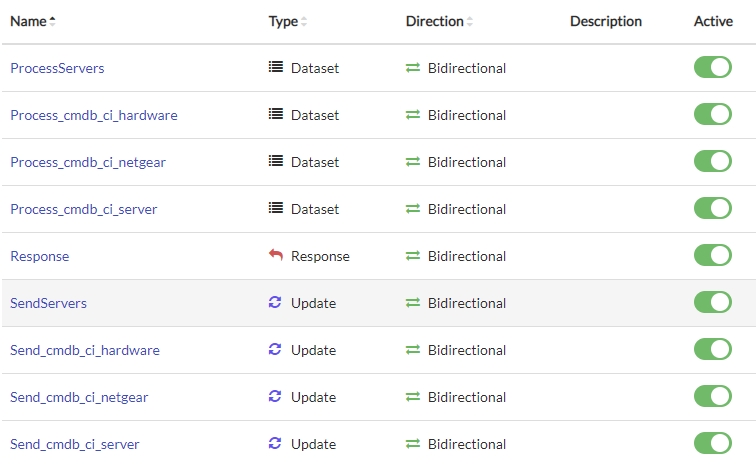

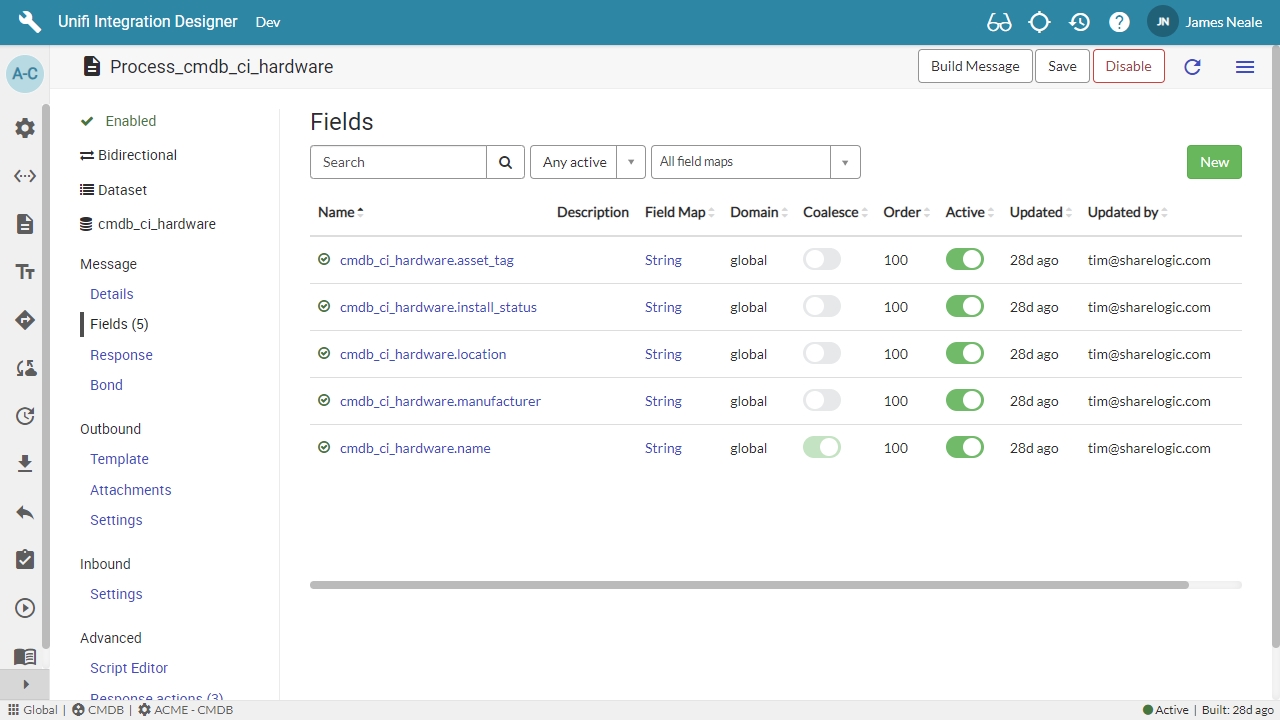

Each Dataset will create the following configuration:

The Send message is used for sending the data in an attachment to the other system. The name is automatically generating using the prefix "Send" and the name of the table.

The Process message is used for processing the record data both inbound and outbound. The name is automatically generating using the prefix "Process" and the name of the table.

The Import set table is used for staging inbound data. The name is automatically generated using the prefix "dataset" with the sys_id of the dataset.

The Scheduled import record is automatically created for processing import data. It is executed when an inbound Dataset Request has created the import set rows and is ready to be processed.

The Transform map and associated coalesce fields are used for processing the import set.

Data is automatically collected, transformed, packaged and sent to another system on a schedule. Each export creates one or more Dataset Requests depending on the number of records and a series of limits configurable on the Dataset.

Unifi automatically creates a Process message which can be used with outbound Fields and Field maps (via the Source to Stage and Stage to Request Message Scripts) to extract the data from the specified records.

The extracted data is written to an attachment which is then sent using the Send message which is also automatically created by Unifi.

When an inbound Send message (as specificed on the Dataset) with a data attachment is received, the attachment will be processed with each record being inserted into an import set table directly related to the Dataset. The import set table is automatically created and maintained during the Integration Build process.

Unifi uses ServiceNow Import Sets since they offer a well understood mechanism for importing data with performance benefits. The mapping is automatically managed by Unifi and handled through a transform script which uses inbound Fields and Field maps (via the Stage to Target Message Script) to transform the data and write it to the target records.

The process message related to the Dataset is used for inbound and outbound mapping and transformation.

Simply create the fields that you want to export/import and configure them in the same way you would for any other Unifi message. Note: the field maps will likely need to be specific to the dataset fields.

Use the Coalesce field (specific to Dataset messages) to indicate which field should be used for identifying existing records to update during inbound processing.

An Info Message will be displayed, stating the Integration is paused.

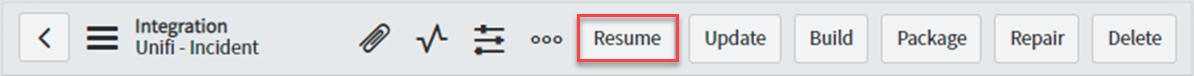

Once an Integration has been paused, the Resume UI Action is available on the Integration. Clicking it will restart the queue, processing the queued Transactions in order.

To resume an Integration, click the the Resume UI Action in the header of the Integration record in native ServiceNow.

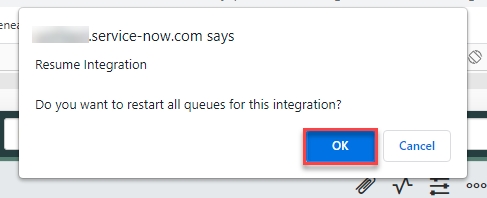

A modal is displayed asking to confirm Resume Integration. Click OK.

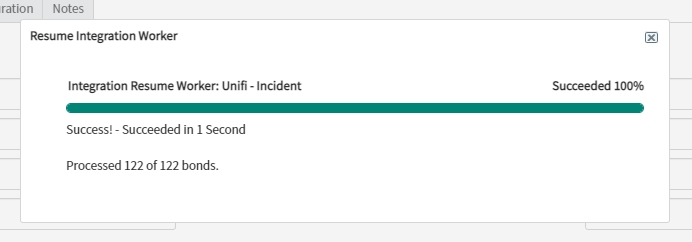

The Resume Integration Worker modal is displayed, showing progress.

It is not envisaged to use this feature when dealing with errors (use 'Ignore' in that case). The primary use case for Pause and Resume would be for occasions where there are planned outages. For example, the other system is undergoing planned maintenance.

Here you will find details of what's changed in this release, including new features & improvements, deprecated features and general fixes.

Welcome to the release notes for Unifi - Version 2.2. Please have a read through to see new features and fixes that have been added.

Please note that, as with every release, there may be some changes that are not entirely compatible with your existing integrations. While we do everything we can to make sure you won’t have to fix your integrations when upgrading, we strongly encourage all our customers to perform full end-to-end tests of their integrations before upgrading Unifi in Production.

We also highly recommend aligning your Unifi upgrade with your ServiceNow upgrade. This means you only need to test your integrations one time rather than once for the ServiceNow upgrade and once for the Unifi upgrade.

We really appreciate feedback on what we’re doing - whether it’s right or wrong! We take feedback very seriously, so if you feel you’d give us anything less than a 5 star rating, we’d love to hear from you so we can find out what we need to do to improve!

If you would rate us 5 stars, and haven’t left a review on the ServiceNow Store yet, we’d be grateful if you would head over there to leave us your feedback. It only takes a few minutes and really does help us a lot. Go on, you know you want to

We’ve tested and verified that Unifi continues to work as expected on the latest Paris release of ServiceNow.

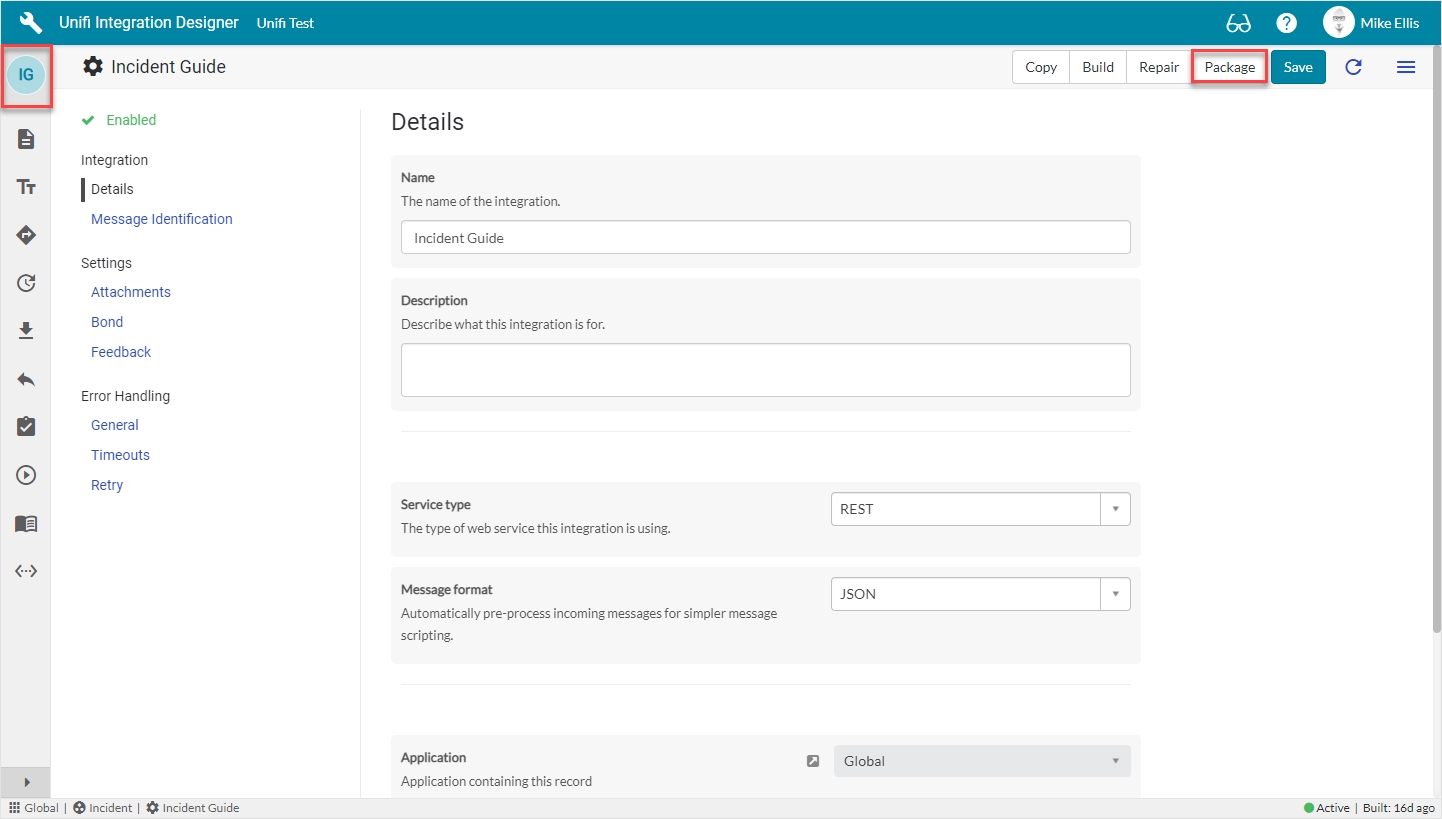

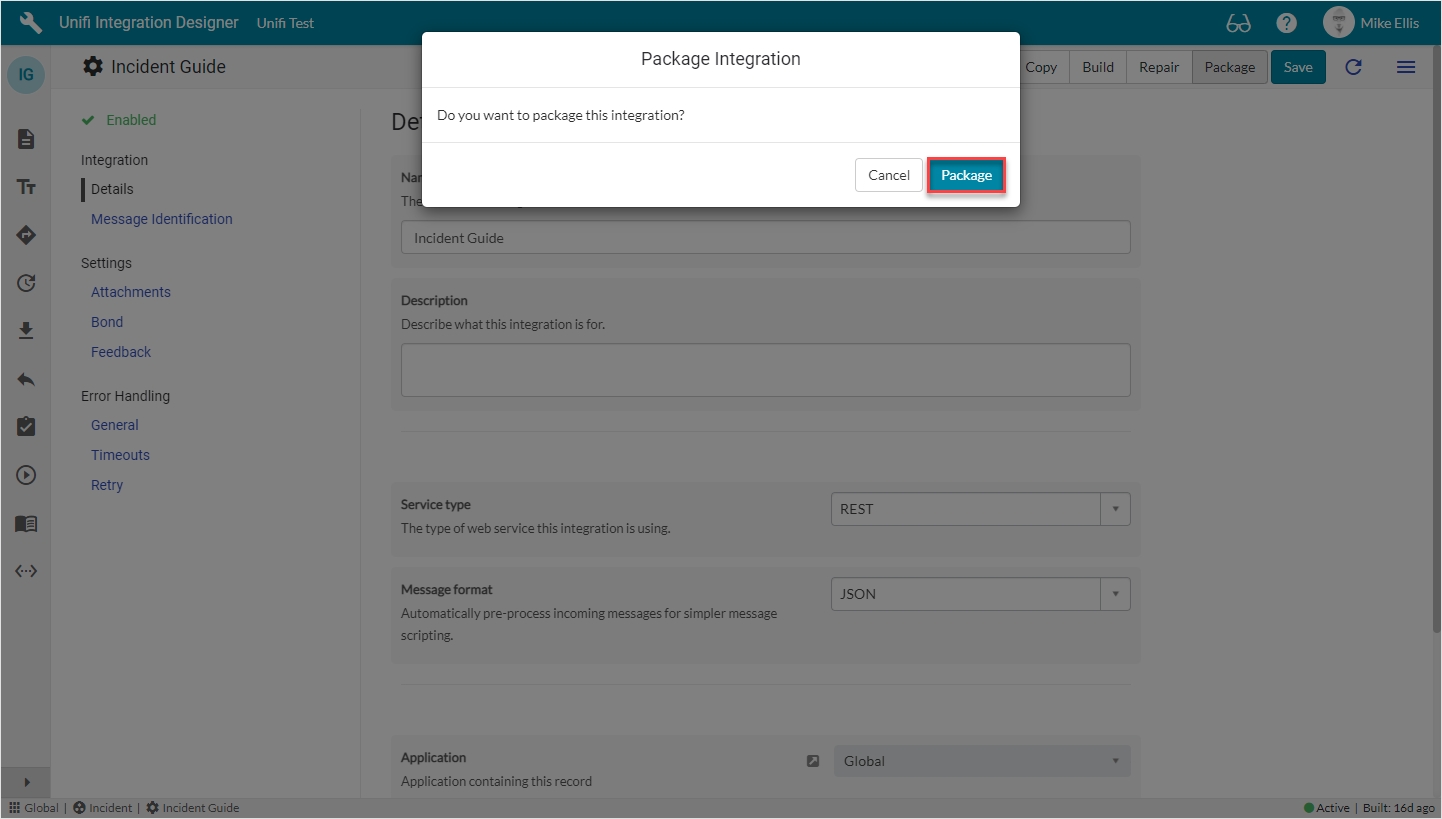

We’ve introduced a brand new feature that makes exporting and migrating integrations seriously easy, 1-click easy in fact. You can now click the “Package” button on an integration in the Portal and the system will gather all the related files that make that integration work and put them in a brand new update set and download it to your desktop. This feature does require the latest version of the Unifi Global Utility.

An API name is automatically generated when creating a new Process. [UN-192]

Integration Diagnostic now checks for global utility installation and version. [UN-369]

Millisecond time fields have been added to transactional records. [UN-541]

Integration Diagnostic now checks for hotfix version. [UN-682]

The display value for Connections now includes the Integration. [UN-347]

Bond reference method is no longer available for Response and Receipt type Messages. [UN-603]

Integration no longer controls number of attachments per message as this is done by the message itself. [UN-651]

Added the connection variable page to Portal. [UN-656]

Copying a Connection Variable now works. [UN-657]

Fixed icon alignment on Messages list in UID. [UN-755]

Fixed typo with Event Action. [UN-659]

Unifi can be patched between releases by using a special Script Include called hotfix. If you find a bug in Unifi we may issue an update to hotfix so you can get the features you need without having to upgrade.

When upgrading Unifi, you can revert to the latest version of hotfix included in the upgrade. We reset hotfix with each release when the fixes become part of the main application.

We occasionally release a hotfix when minor issues are found. Simply replace the script in the hotfix Script Include with the one shown below and you will instantly have access to the fixes.

These hotfixes will be shipped as real fixes with the next version of Unifi, so make sure you have the correct hotfix for your version.

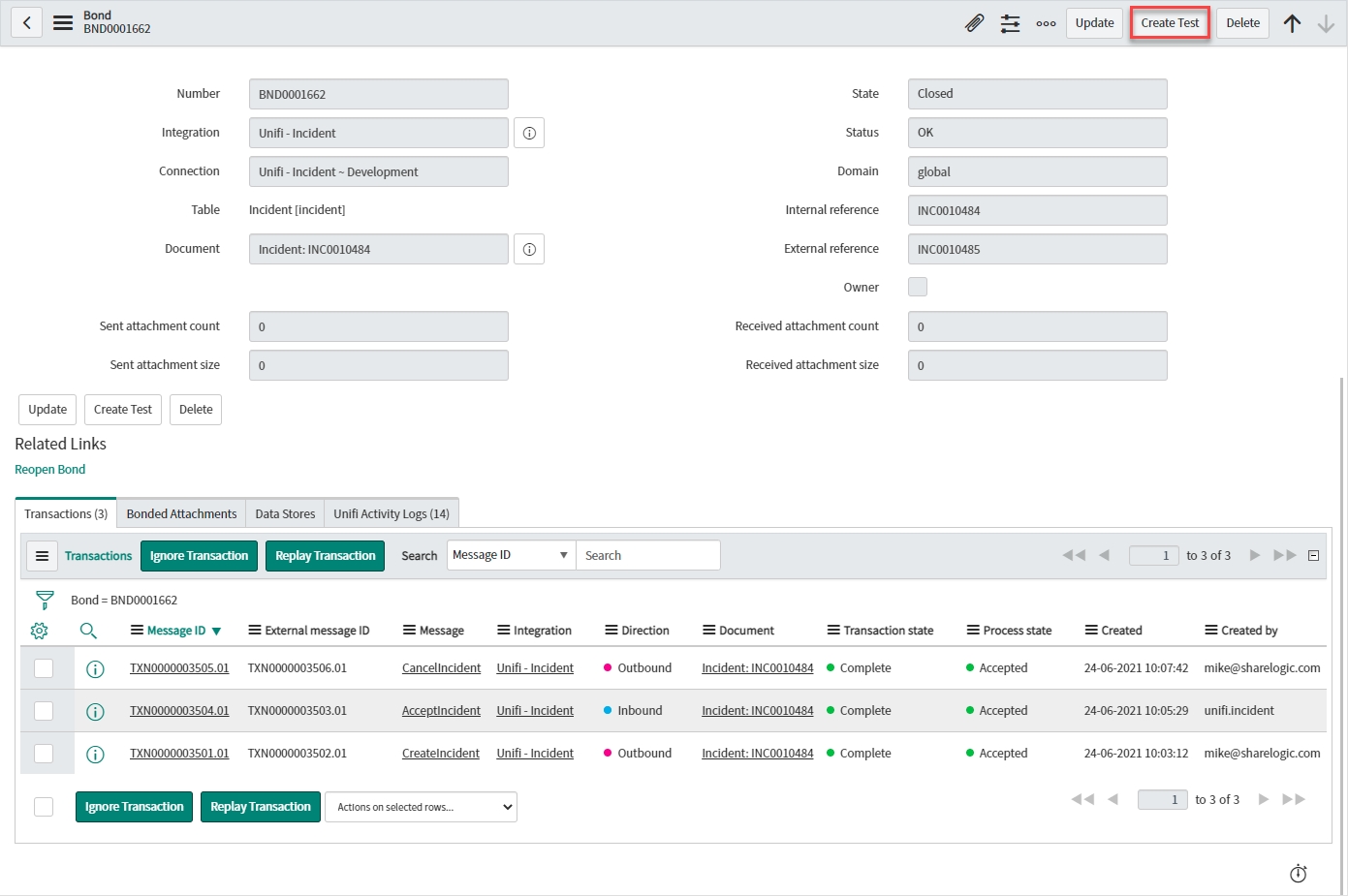

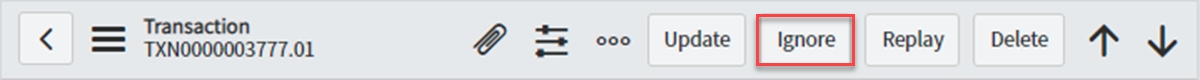

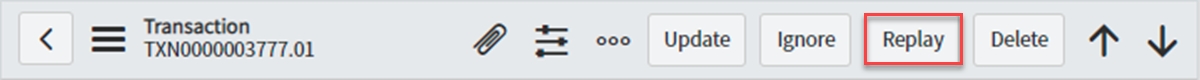

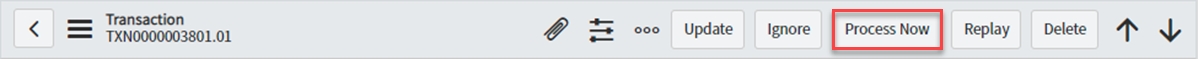

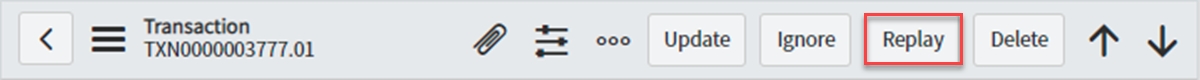

You can easily replay Requests and Transactions directly from the records themselves. This is an invaluable tool for debugging and error handling.

Unlike retries (which are automated), replays are a user initiated action. You can replay both Requests and Transactions directly from their respective records by simply clicking the UI Action.

You can replay a single HTTP Request (whether inbound or outbound). Having the ability to focus in on and replay the specific Request which has errored allows you to identify and correct errors more accurately, quickly and easily.

To replay a Request, click the Replay Request UI Action in the header of the Request record.

A Transaction comprises a Request/Receipt pair of HTTP Requests and represents an instance of a Message being sent/received. You can replay the whole Transaction again (not just a single Request). Replaying a Transaction works differently to replaying a Request and both would be used at different stages of the debugging process - though they are both equally as simple to perform.

You can replay a Transaction either from the record itself, or the list view:

Click the Replay UI Action in the header of the Transaction record

Click the Replay Transaction UI Action from the list view.

Firstly, having the ability to replay errored Requests/Transactions can save a significant amount of time and effort when debugging and error handling. For example, typically after spotting an error, you would have to step into the config & make a change, step out to the bonded record and send data again, step into the logs to check what happened and continue around until rectified. Compare that to being on the errored HTTP Request, making a change to the data in that request, replaying it and getting immediate feedback (seeing the response codes) all from within the same record.

Not only that, Unifi automatically replays as the originating user*. You don't have to impersonate to replay. Not only does this also save time & effort, but you can debug with confidence - being able to replay as the original sender (not the user replaying), allowing functionality that relies on the identity of the user to run correctly.

When you replay a Request, you replay that specific instance of that data (as-is) at that time. When you replay a Transaction, Unifi takes the Stage data and reprocesses it before sending - building the payload/HTTP Request again.

You would normally replay a Request during development & testing, when debugging (making changes during investigation).

You would normally replay a Transaction once you have completed your investigation and made configuration changes, as reprocessing the data would take those changes into account when building a new payload/HTTP Request. Operationally, you are perhaps more likely to replay a Transaction in cases where, for example, the other system was unavailable and the attachment was not sent - so you want to reprocess and attempt to resend the attachment.

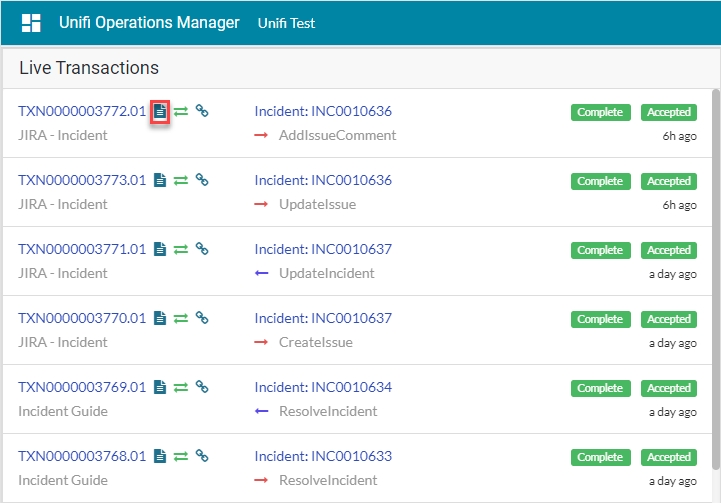

Each new attempt to replay either a Transaction or a Request will be incremented with a decimal suffix (.1, .2 etc.)*. This means you can easily identify which replay relates to which record and in which order they were replayed. For example, TX00123 will replay as TX00123.1 and then TX00123.2 etc.

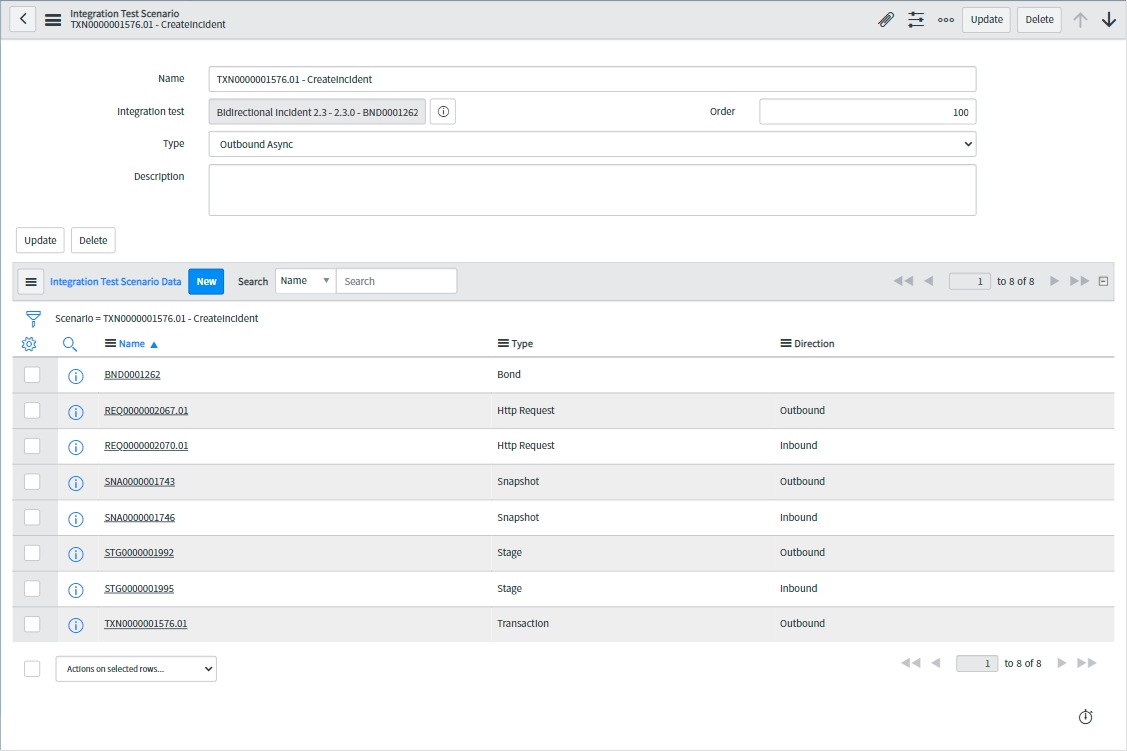

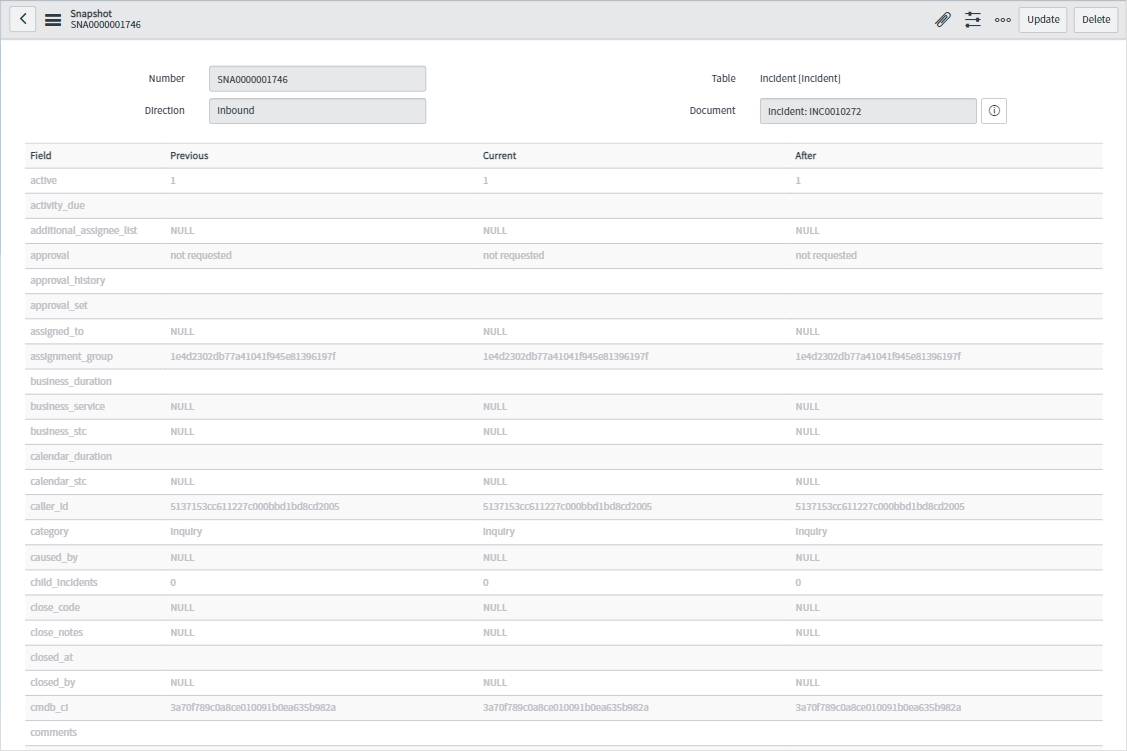

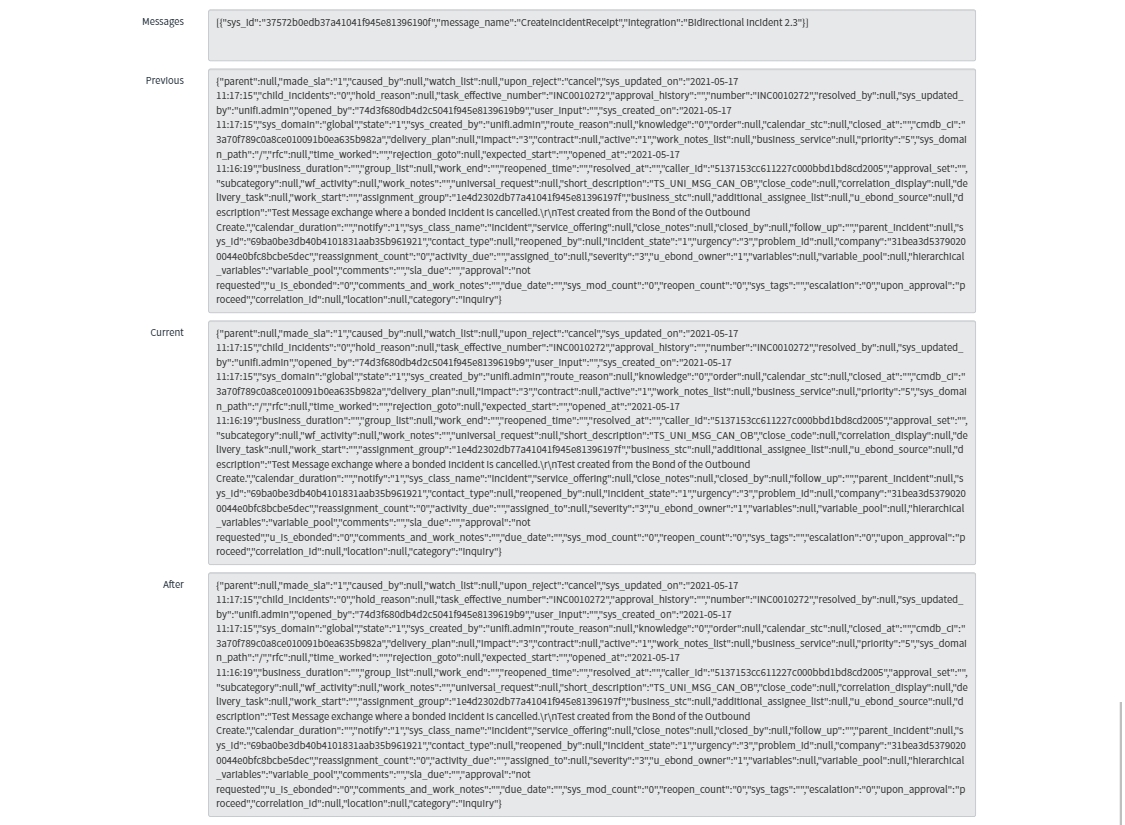

Test Scenario Data is a snapshot of all the relevant records created during the processing of a Transaction and is used to both generate the test and ascertain the results of each test run.

Integration Test Scenario data is used to initially create the new test record (the record created during the running of the test) and then to subsequently compare the test results to the expected results.

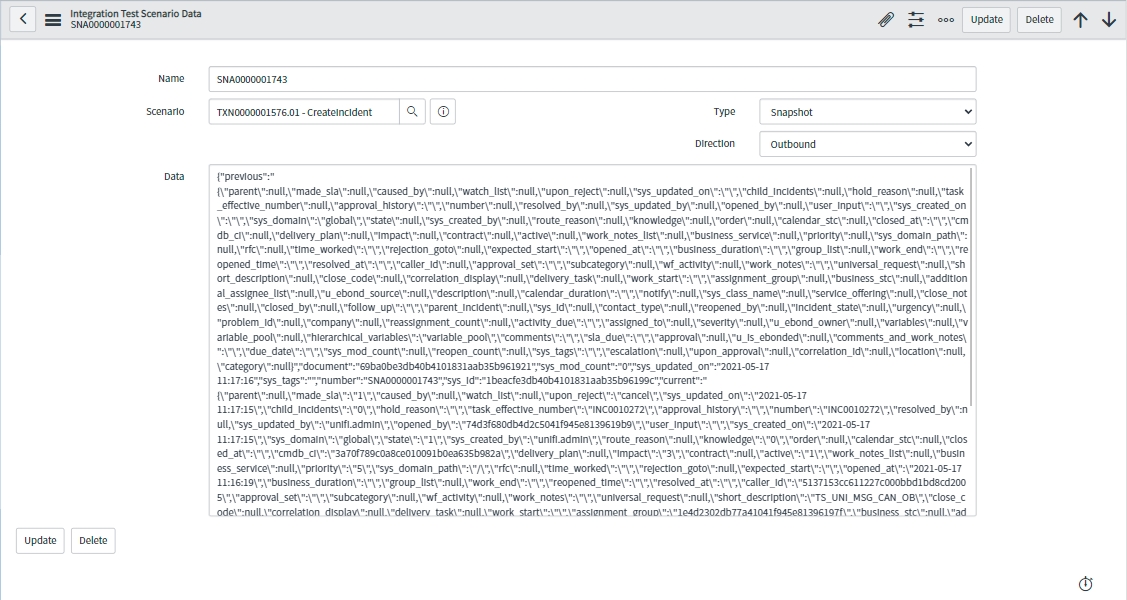

This is the final step in the generation and setup of Integration Tests. There are various 'Types' of Test Scenario Data which represent the different records used at different points in the processing of data through Unifi. Each of the 'Types' of data are actually a JSON representation of each of those records and are disscussed below.

This is a JSON representation of the Snapshot record taken when processing the original Transaction. This data is used to create or update the new test record.

This is a JSON representation of the Stage created when processing the original Transaction (both inbound and outbound Stages). It is used to compare to the results of the test run.

This is a JSON representation of the Bond created when processing the original Transaction. It is used to compare to the results of the test run.

This is a JSON representation of the Bonded Attachment created when processing the original Transaction. It is used to compare to the results of the test run.

This is a JSON representation of the Transaction created when it was processed originally. It is used to compare to the results of the test run.

This is a JSON representation of the HTTP Request created when processing the original Transaction (both inbound and outbound Requests). It is used to compare to the results of the test run.

The screenshot below is a Snapshot 'Type' Integration Test Scenario Data record, but is representative of each of the various types.

The following table describes the fields which are visible on the Integration Test Scenario Data record.

Follow this guide to configure a simple outbound integration to the table API of your Personal Developer Instance. It is given as an aid for those new to Unifi, or to play as part of a trial.

Congratulations on your decision to use Unifi, the only integration platform you need for ServiceNow. We are sure you will be more than satisfied with this extremely powerful, versatile and technically capable solution.

We have created this Outbound Incident Guide as an aid to customers who are beginning their journey in deploying the Unifi integration platform. We would not want you to be overwhelmed by exploring all that Unifi has to offer here, so we have deliberately limited the scope of this document. It will guide you through an example of how to configure a basic Incident integration, sending outbound messages via the REST API to the table API of another ServiceNow instance (i.e. your Personal Developer Instance, ‘PDI’).

We do not recommend synchronous integrations for enterprise ticket exchange. This Guide is purely here for you to have a play as part of a trial. It is designed to connect to a PDI without Unifi being installed on the other side.

For more technical information on how to use Unifi, please see our .

Do not build integrations directly within the Unifi application scope. This can create issues with upgrades and application management.

The prerequisite to configuring Unifi is to have it installed on your instance. As with any other ServiceNow scoped application, Unifi must be purchased via the ServiceNow Store before installation.

We recommend you follow the Setup instructions prior to configuring your Integration.

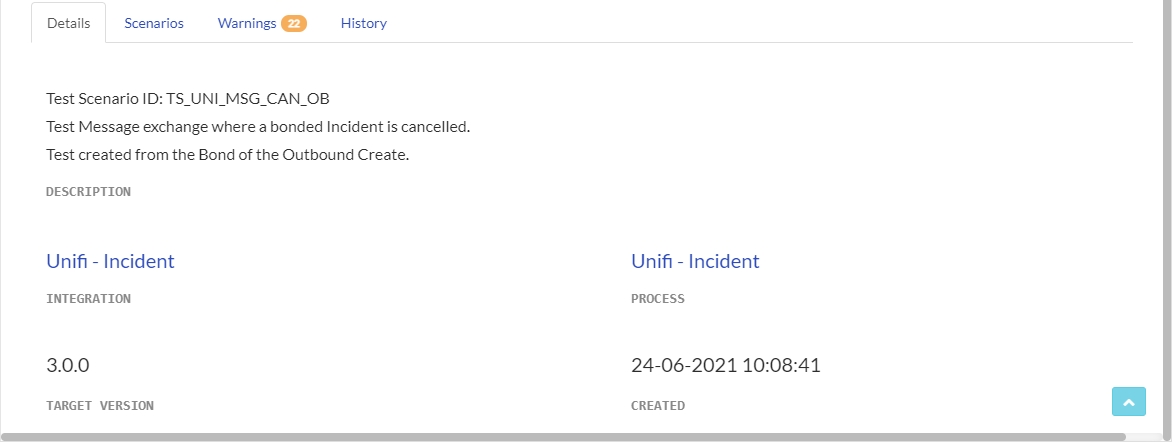

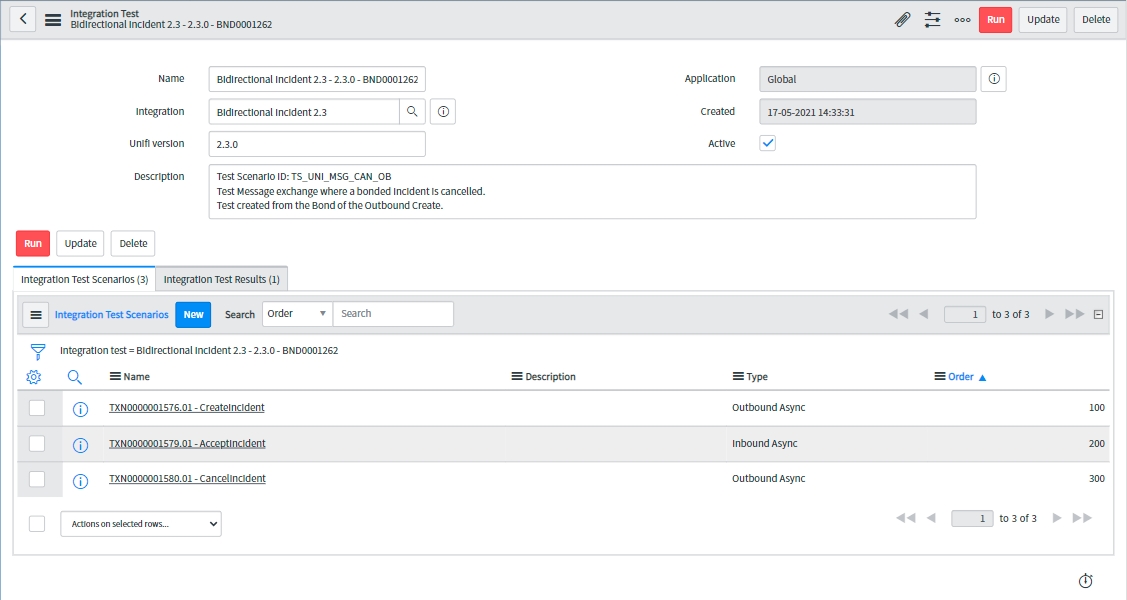

Integration Test Scenarios are the elements that make up an Integration Test. Each Scenario will correlate to the relevant Transaction on the Bond from which the test was created.

An Integration Test Scenario represents a Transaction on a Bond. Each contains the relevant Test Scenario Data objects for the particular Scenario.

The following table describes the fields which are visible on the Integration Test Scenario record.

The 'Integration Test Scenario Data' related list is also visible on the Integration Test Scenario record.

For each of our Scenarios we will need to configure the relevant Messages & Fields. This scenario will need to be tested before moving on to the next.

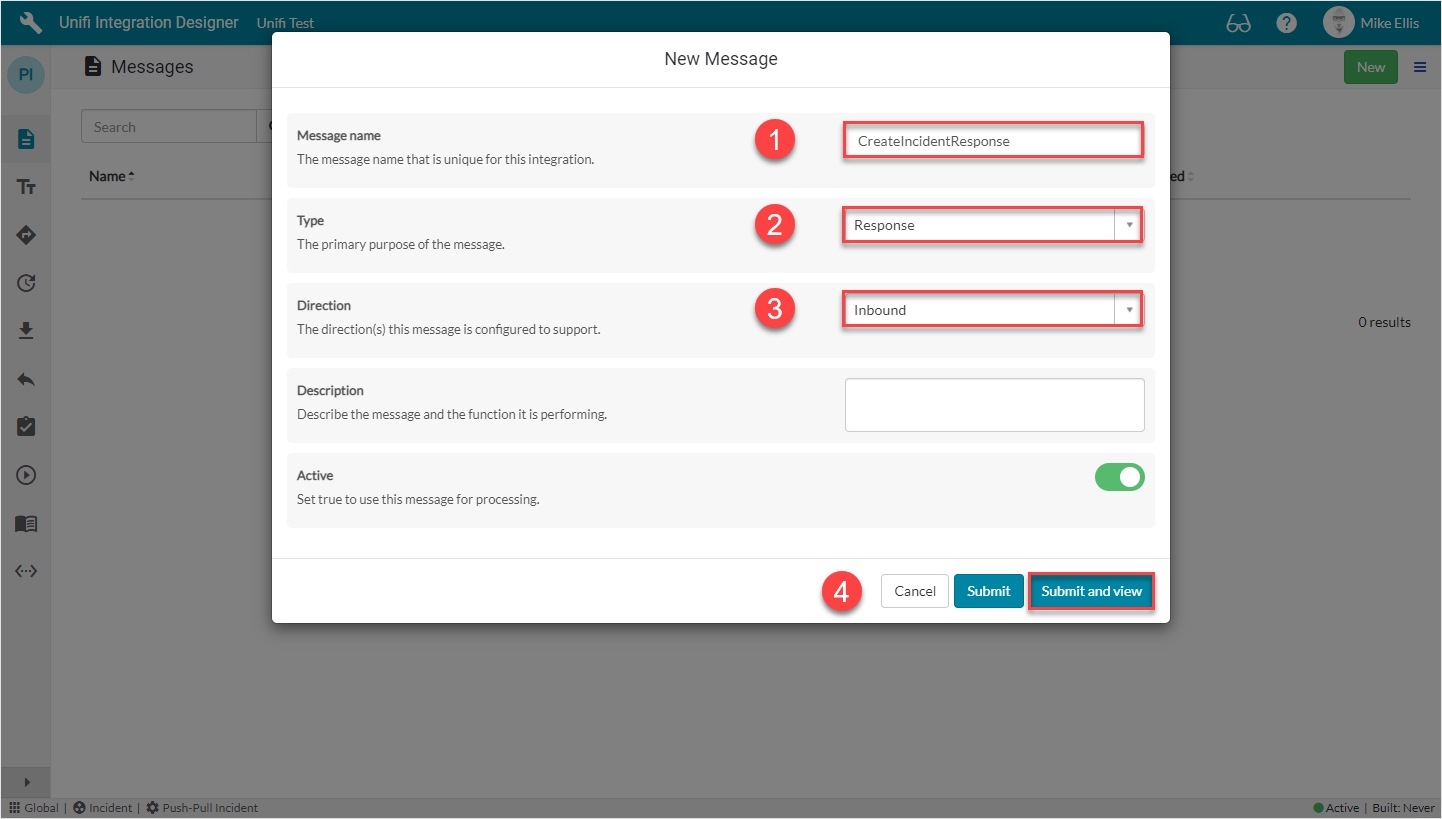

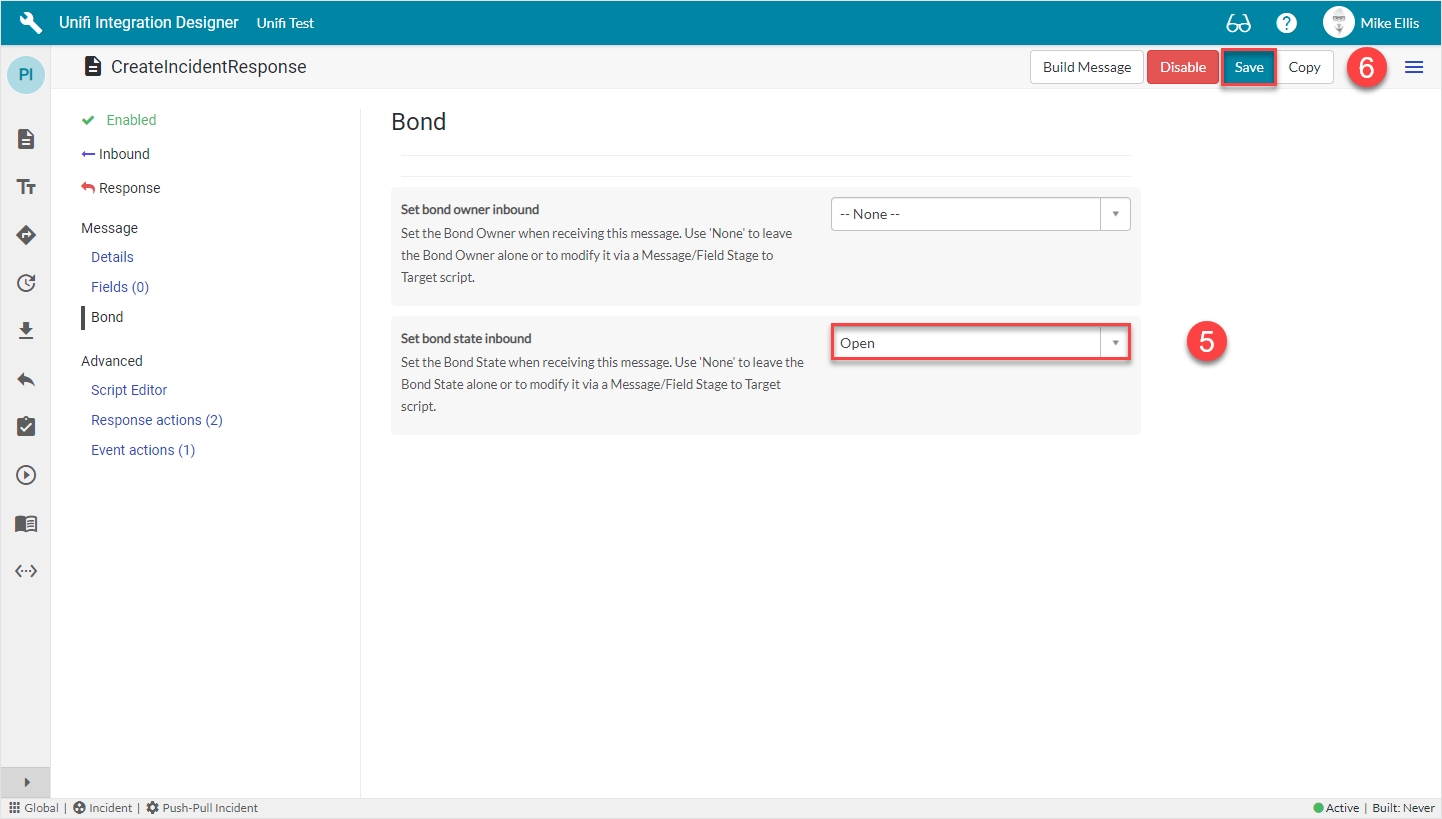

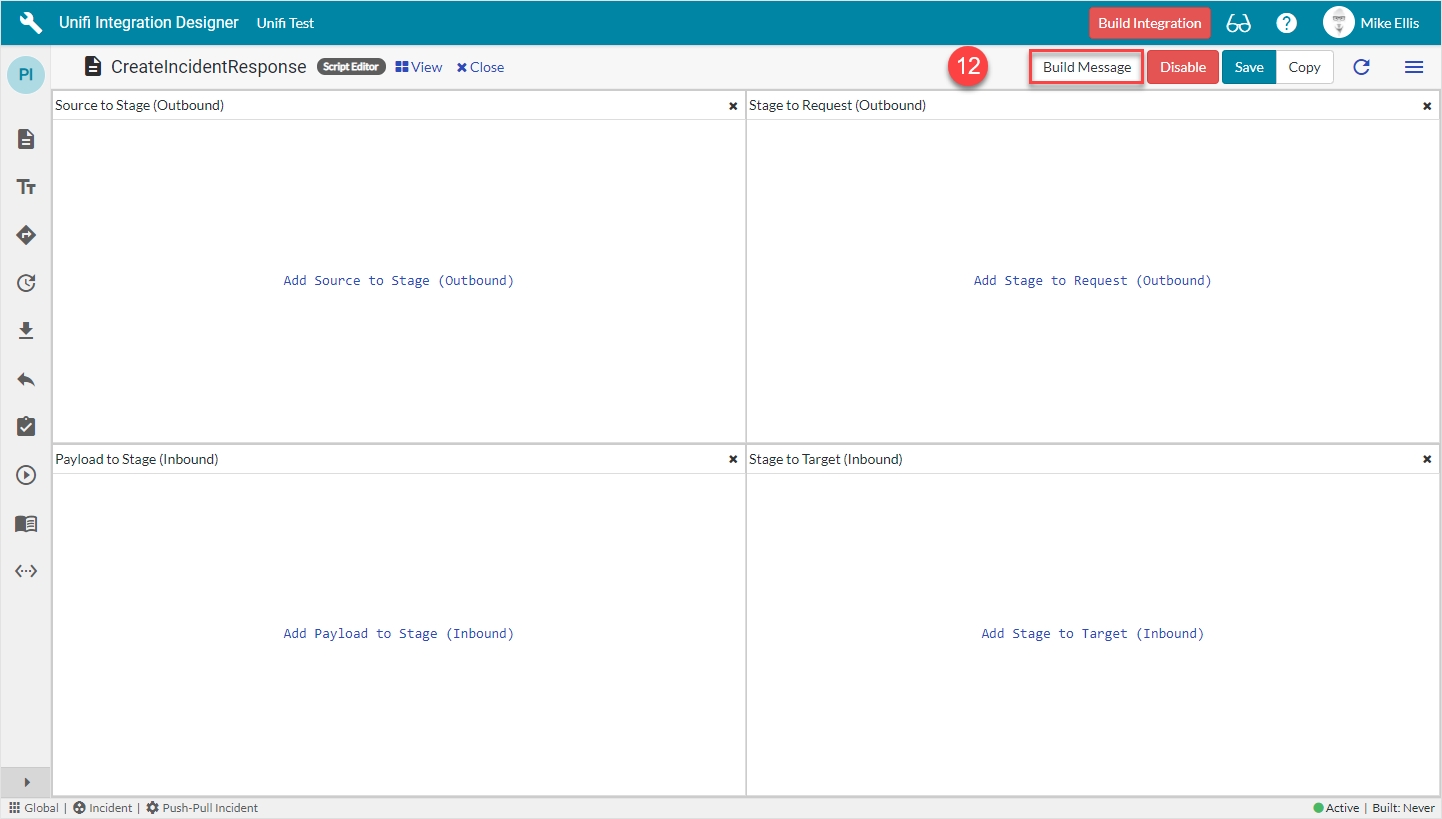

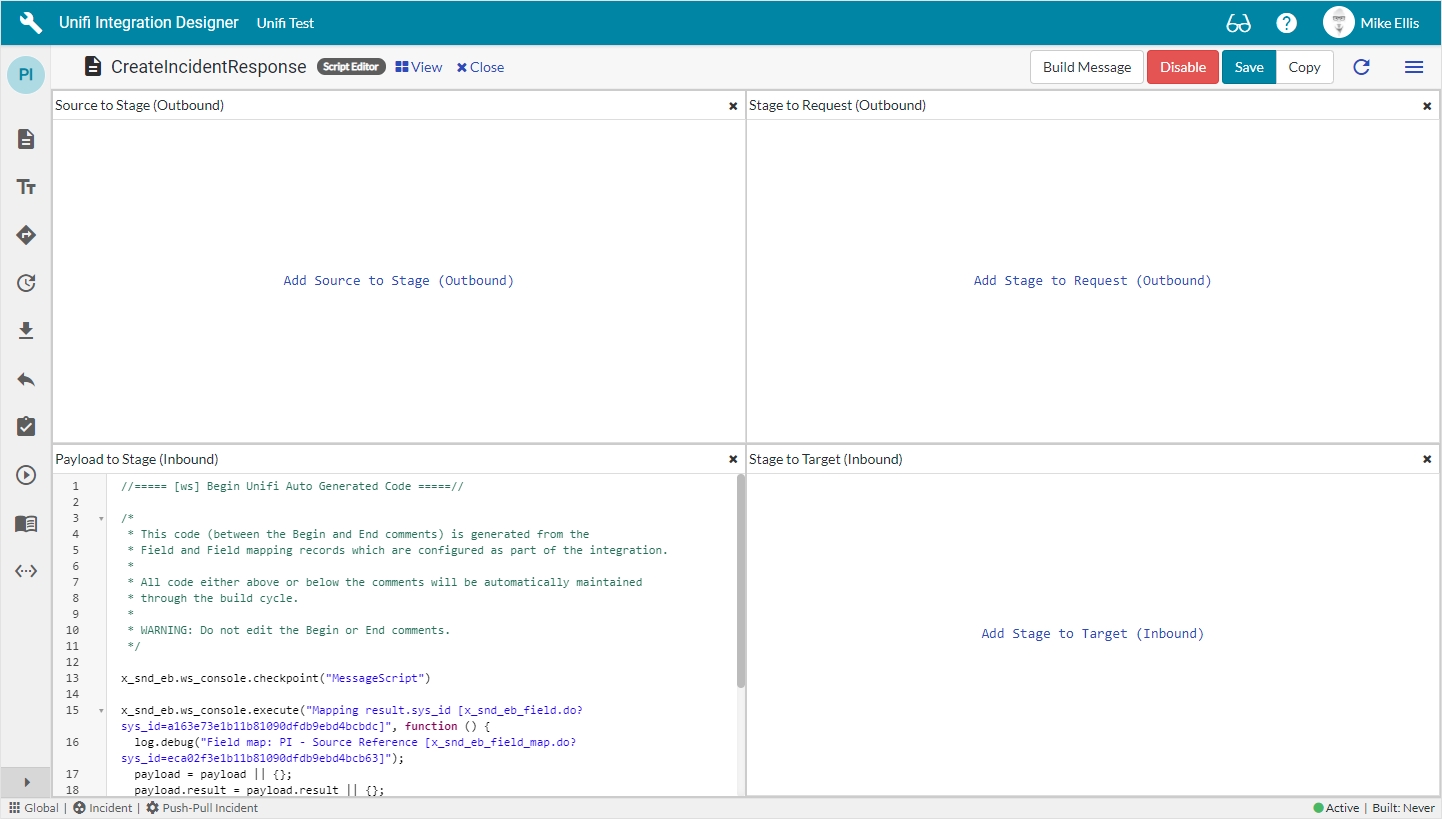

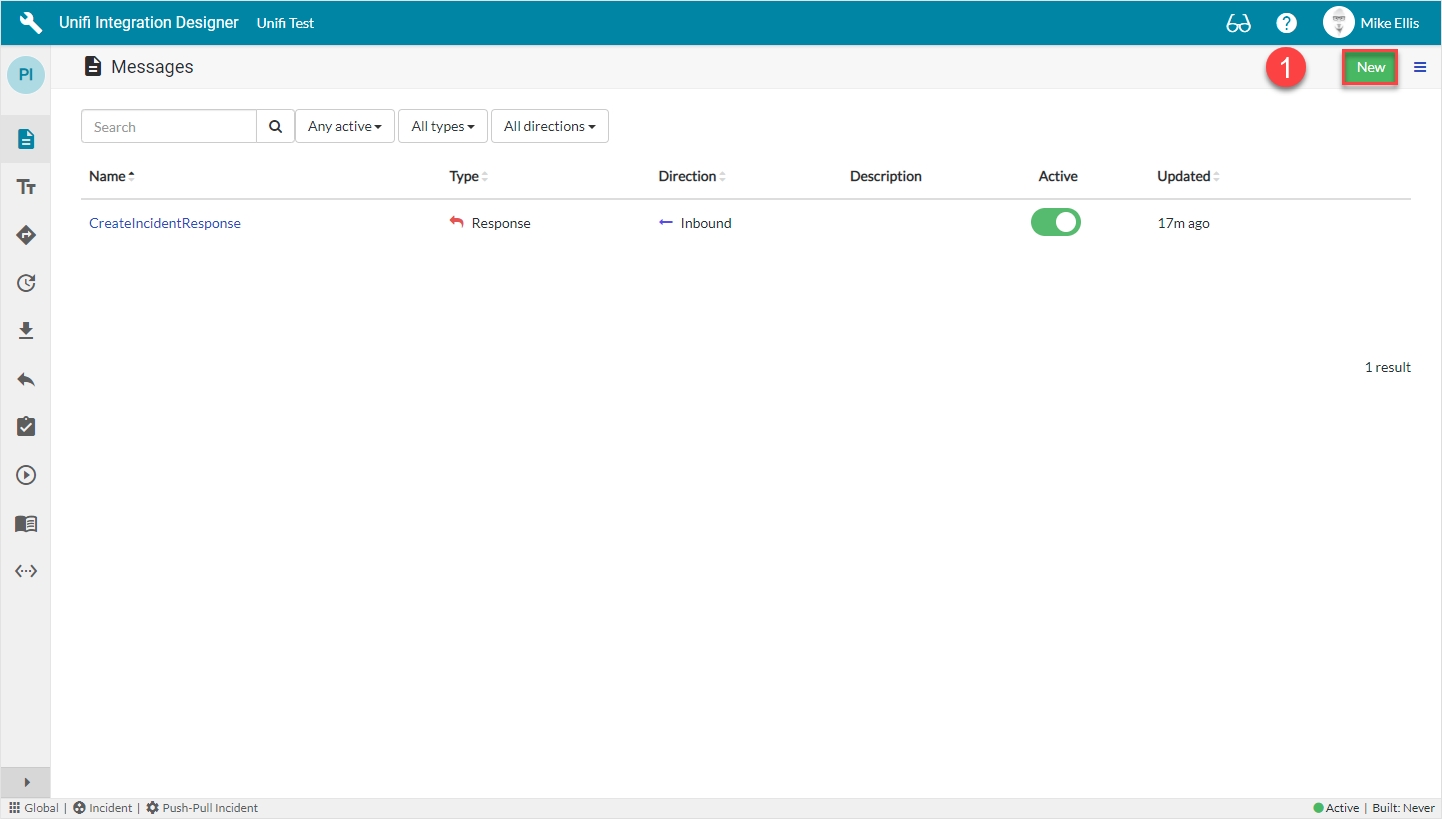

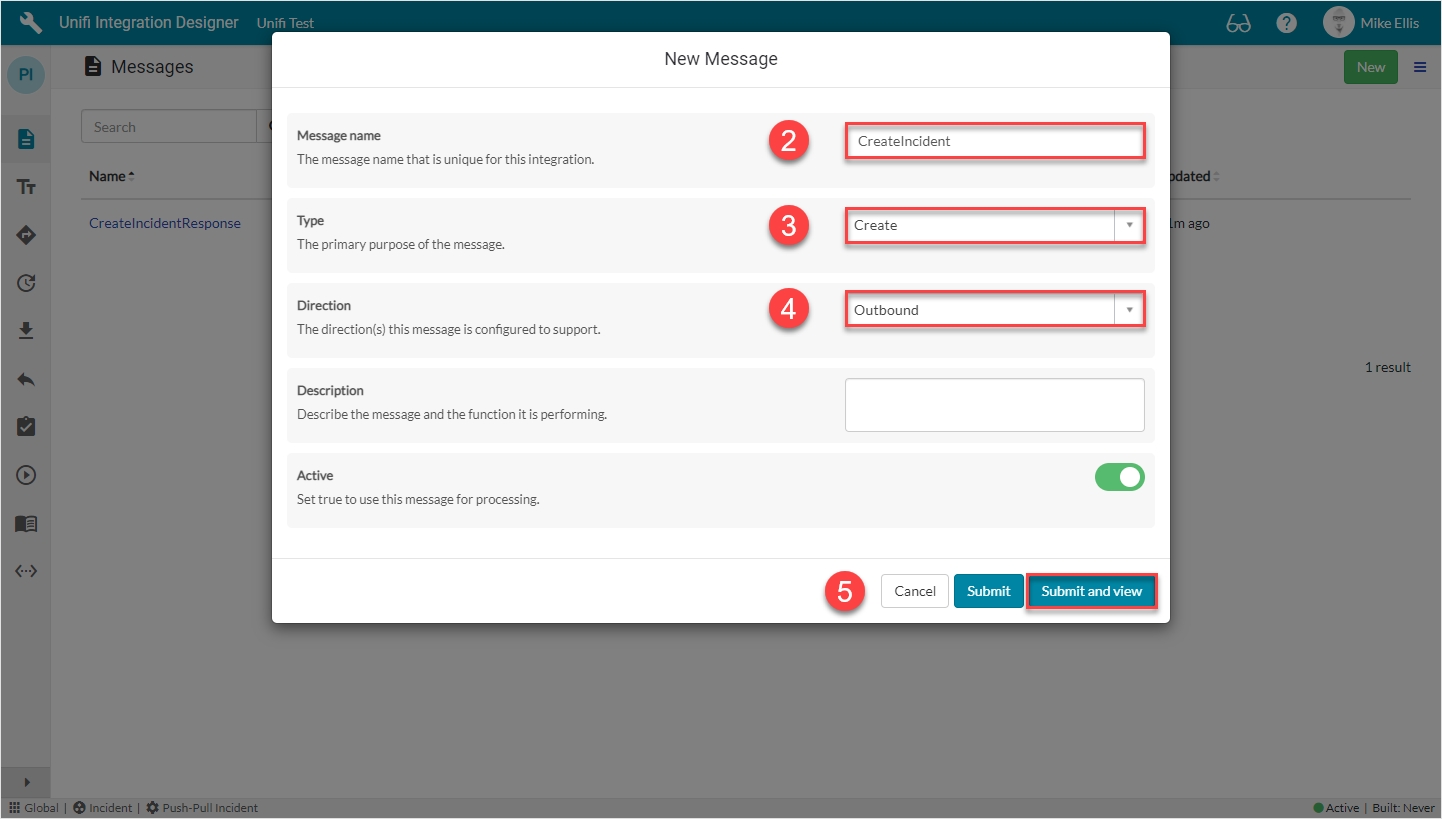

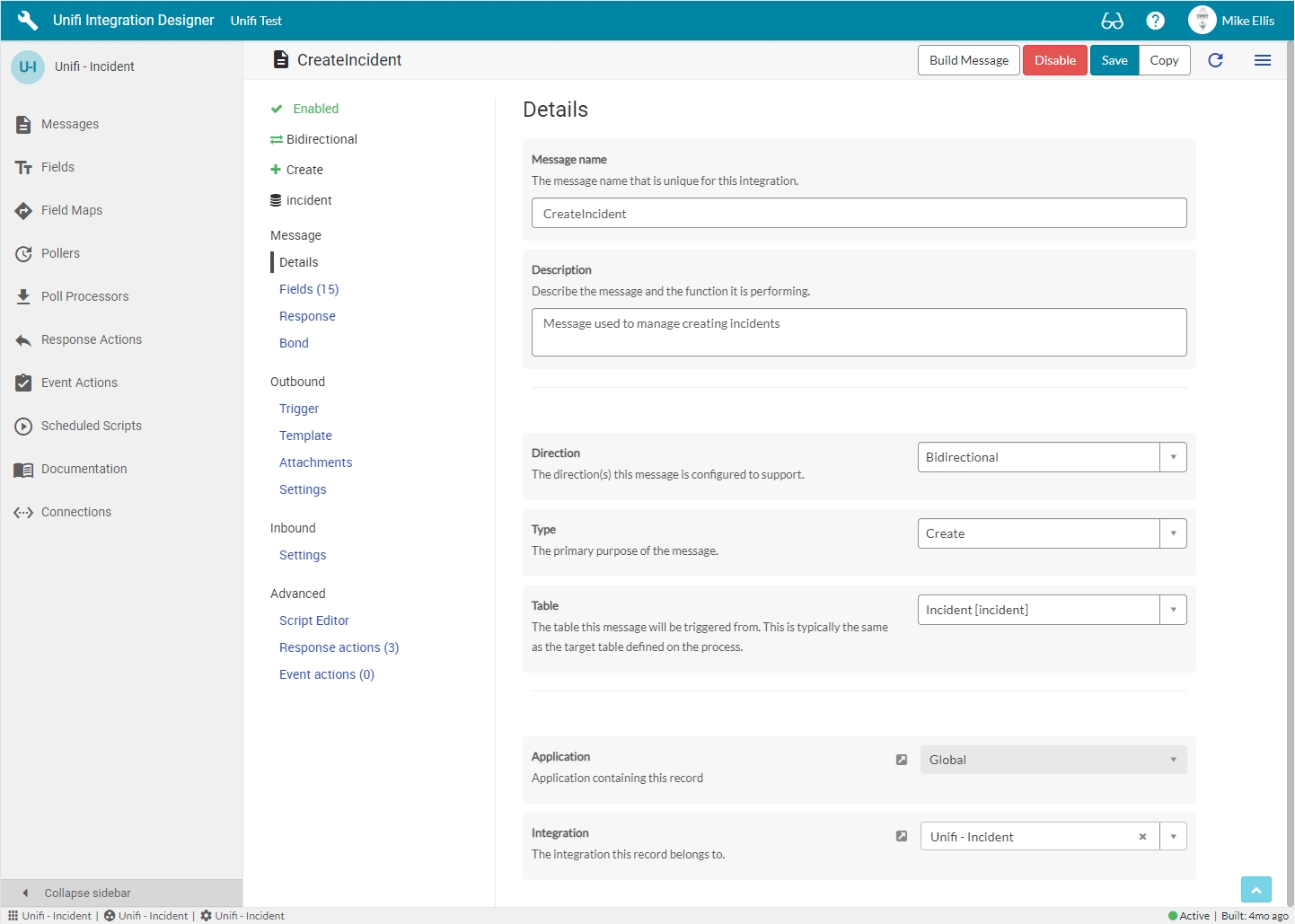

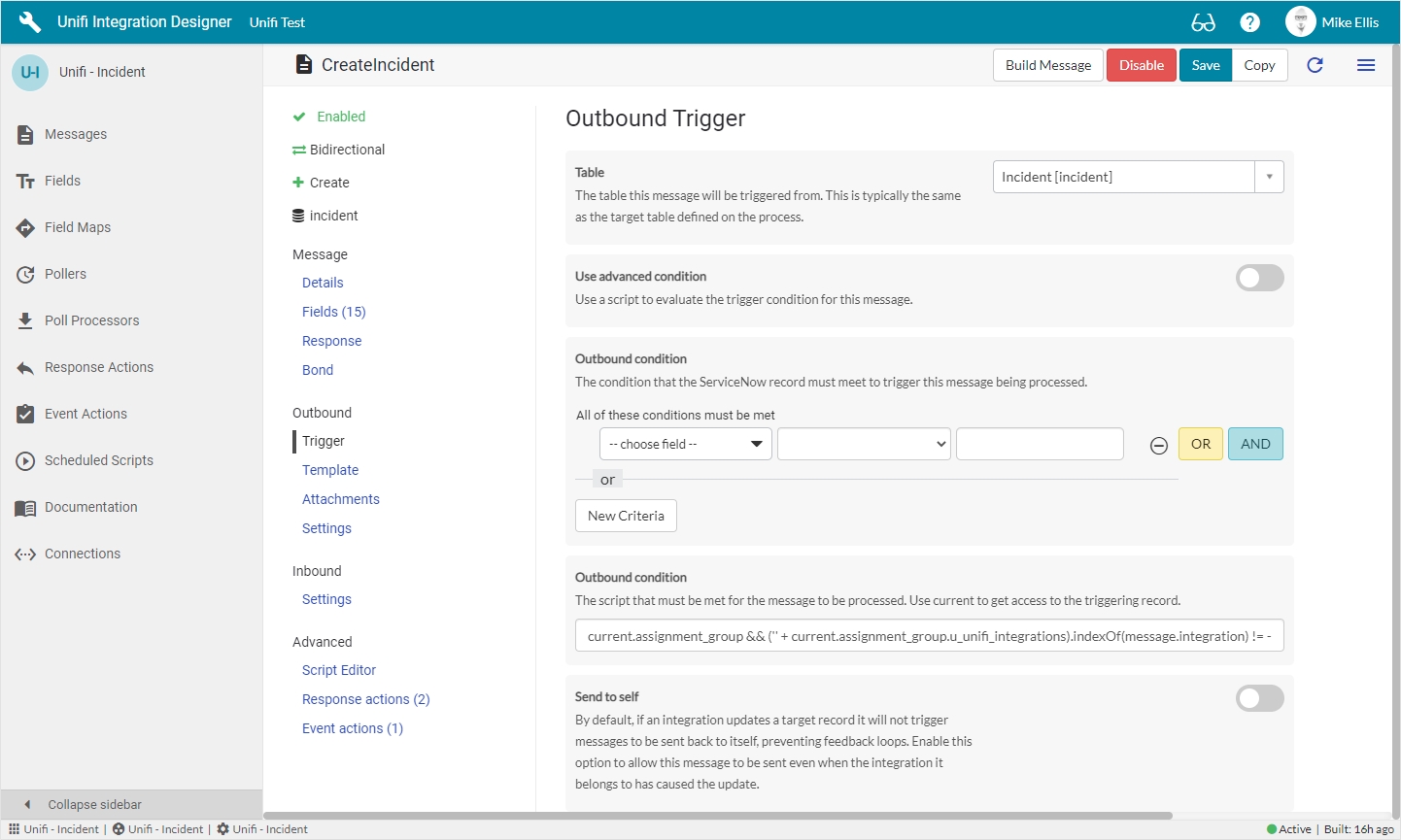

The Messages we shall be configuring for the Create Scenario are:

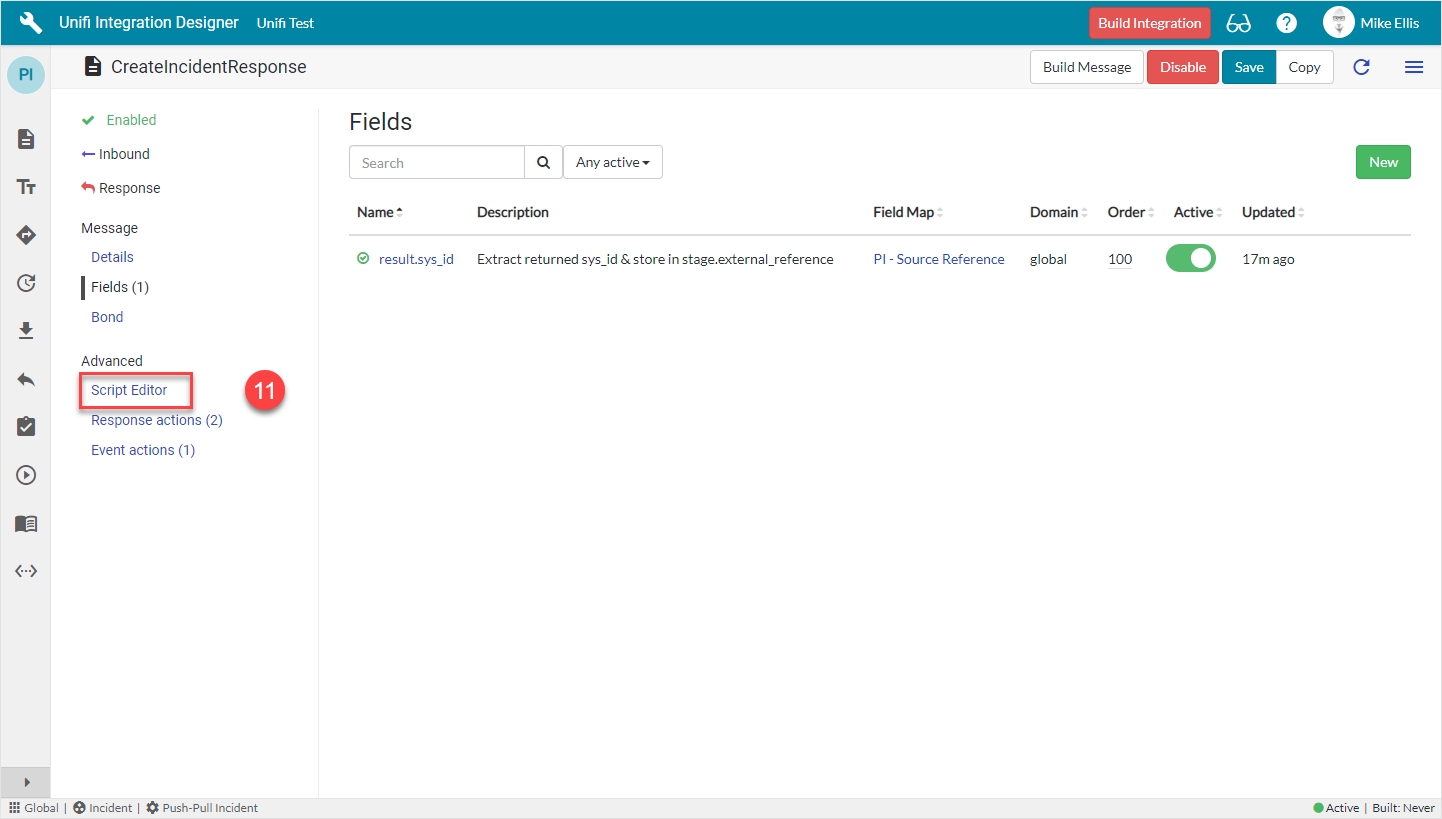

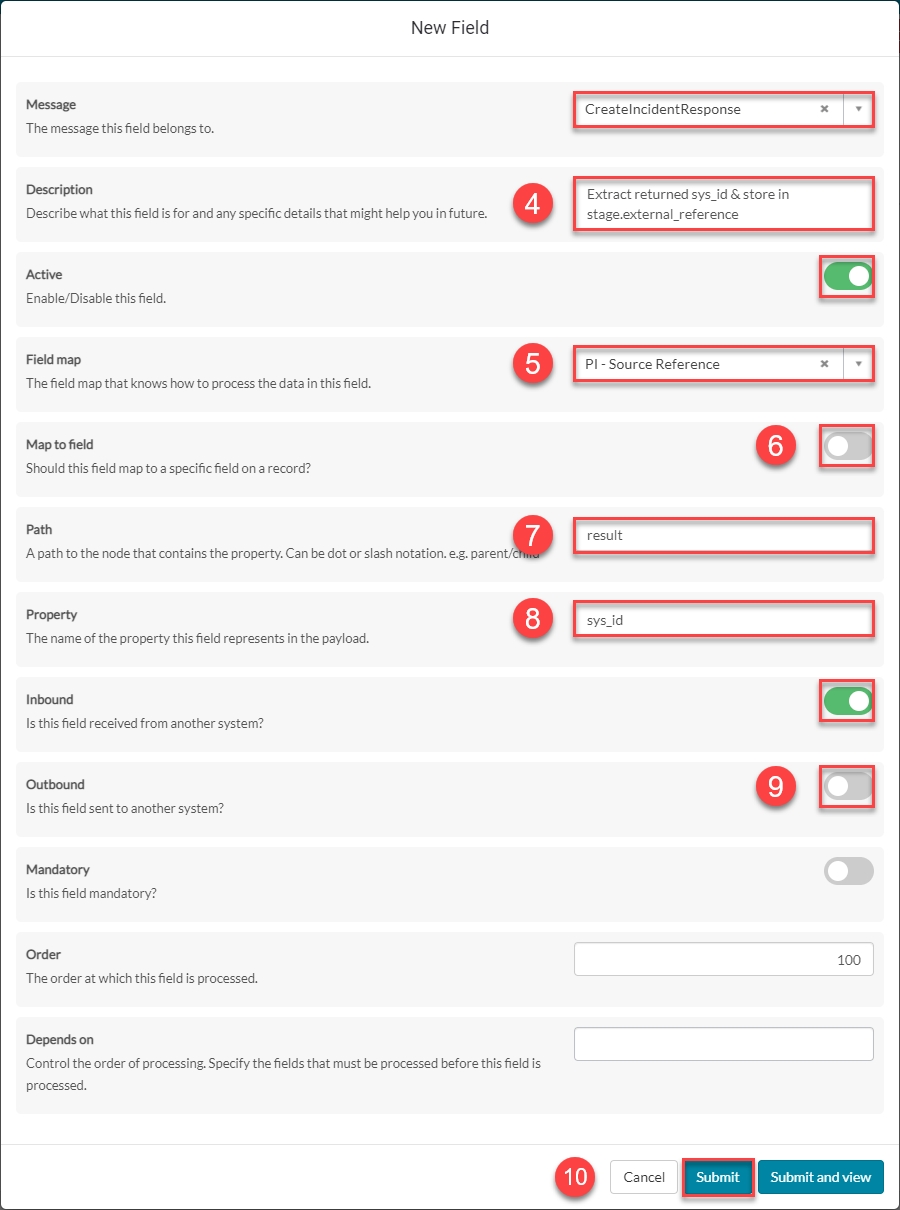

CreateIncidentResponse

CreateIncident

We will define which Field records require configuring for each of those Messages at the appropriate time.

The scenario will need to be successfully tested before we can say it is complete.

We shall look in detail at each of the Messages and their respective Fields in turn over the next few pages, before moving on to Test.

Unifi has some functionality that requires access to methods not available to scoped applications. We grant Unifi access to those methods through a single global utility Script Include which you can install via Update Set.

It is strongly advised that you install this utility to get the most out of Unifi.

Download Unifi Global Utility.

Creating a new Dataset for exporting and importing data is a simple 3-step process.

Create a new Dataset

Configure the Process Message

Configure the Send Message

Setup a scheduled job to ensure refresh tokens do not expire.

We recommend customers using outbound OAuth use this scheduled job script to ensure outbound OAuth connections remain alive, as explained in from ServiceNow.

Without this job, the refresh token will eventually expire which means ServiceNow will no longer be able to retrieve an access token. This in turn will cause outbound requests to fail.

If your OAuth service is including refresh tokens with each access token request, this job may not be required.

This script has been reformatted from the KB Article for ease of use.

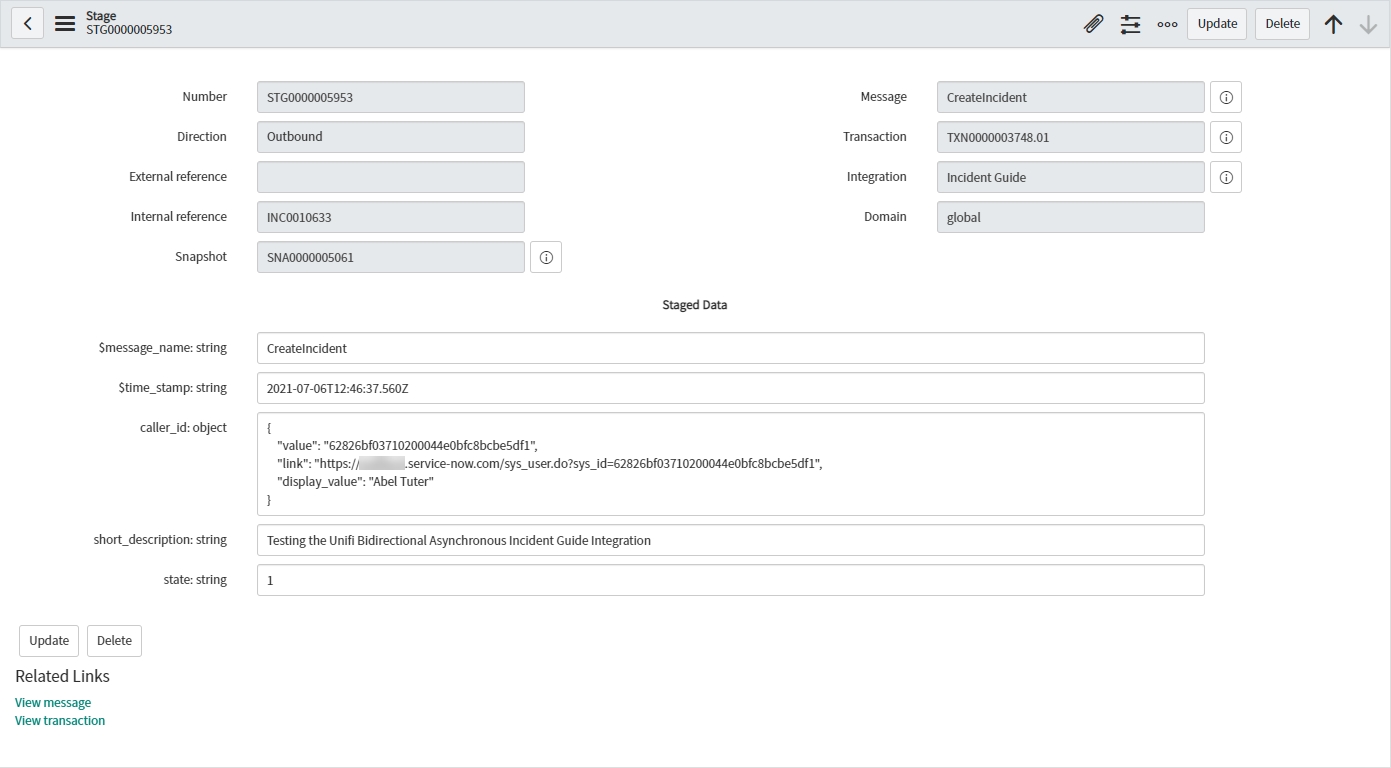

The Stage is the root staging table for all data mapping. Stages are created dynamically at the time of data being sent/received. The Staged Data fields will vary dependent on the data being sent.

Stages are a snapshot of the data either at the time of being sent or received. The Stage stores the snapshot of data, which is what facilitates the asynchronous exchange of Messages. Having that data called out into separate fields provides a clean & clear view of the data being sent/received, which in turn can provide a clearer picture of the cause of any potential discrepancies in the mapping of data that is being translated*/transformed**.

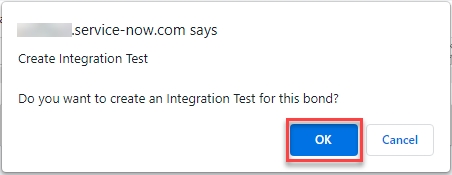

How to generate an automated Integration Test.

Before you can run an automated Integration Test you must first create one. Unifi makes that extemely easy for you.

Integration Tests are created directly from the Bond record. At the click of a button, Unifi will generate a test which comprises each of the Transaction scenarios on that Bond.

Navigate to the Bond you wish to create the automated Integration Test from and click 'Create Test'.

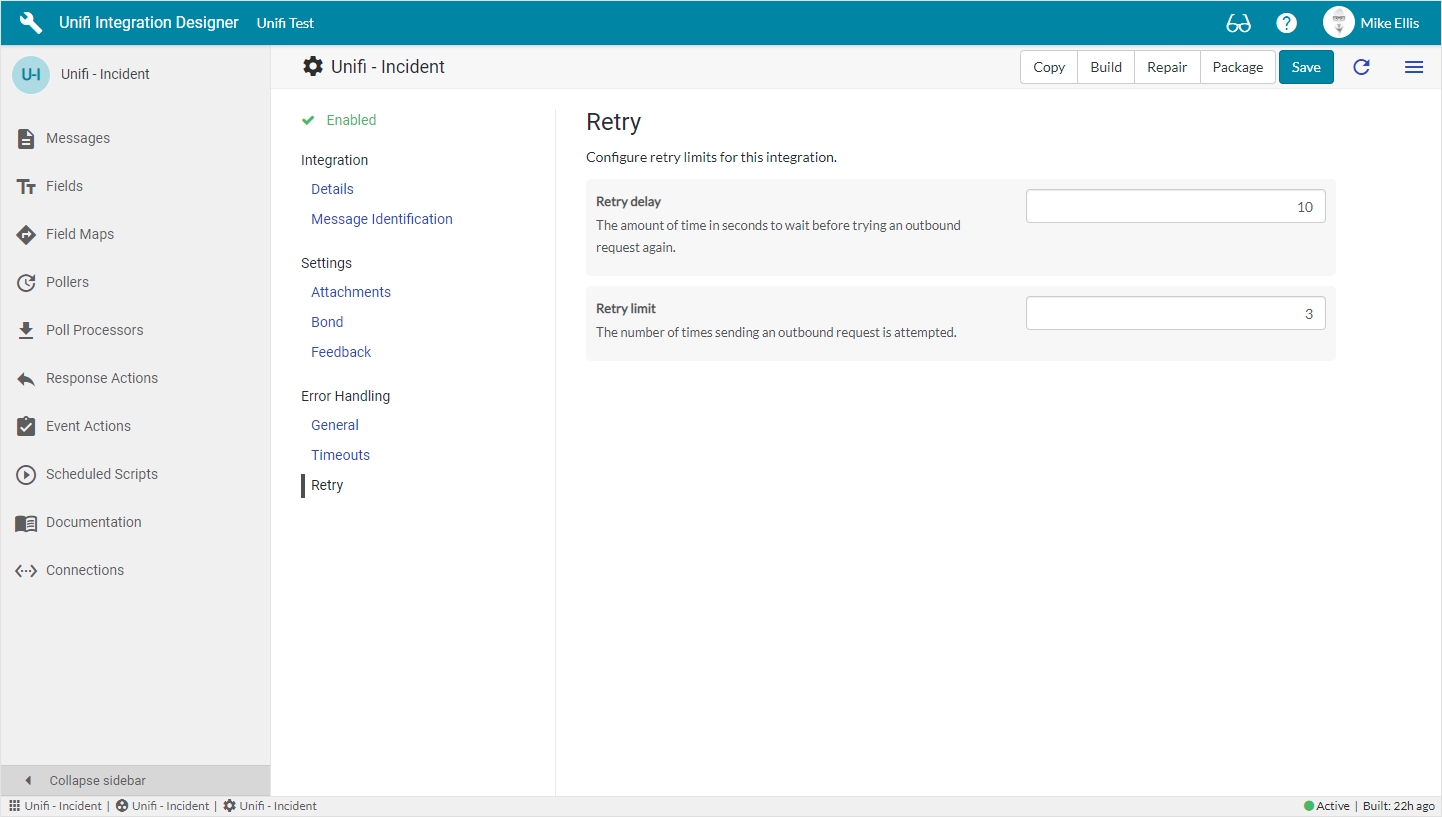

Retry logic is configurable per Integration and controls how Unifi will automatically retry errored HTTP Requests.

The retry functionality is like a first line of defence when it comes to error handling. It builds in time to allow the system to deal with potential issues in the first instance and saves the analyst or administrator having to step in at the first sign of any problems. This can be useful in scenarios where, perhaps the external system is temporarily preoccupied with other tasks and is unable to respond in sufficient time.

Rather than fail or error a Transaction at the first unsuccessful attempt, Unifi will automatically retry and process it again. The number of times it attempts to do so and how long it waits (both for a response and before attempting to retry again) are configurable parameters.

Although the retry logic itself is applied at the HTTP Request level, the settings to configure it can be found on the Integration. This means that they can be configured uniquely and specifically for each Integration. Unifi will automatically retry errored Requests according to those settings.

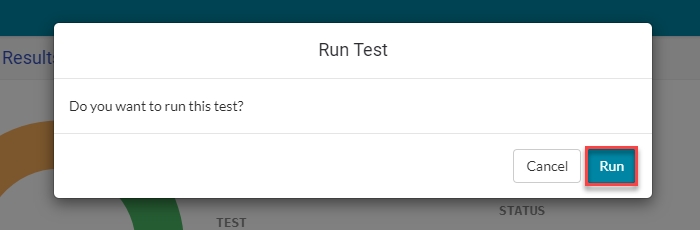

Follow this guide to learn how to generate and run automated Integration Tests in Unifi.

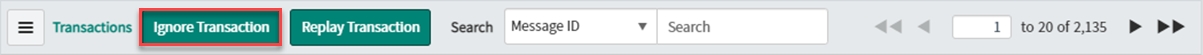

Setting Transactions to Ignored stops the queue from processing.

Ignore is a UI Action on the Transaction record which allows administrators to manually ignore Transactions. Clicking it prevents queued Transactions being processed.

You can ignore a Transaction either from the record itself, or the list view:

Click the the Ignore UI Action in the header of the Transaction record.

Click the Ignore Transaction UI Action from the list view.

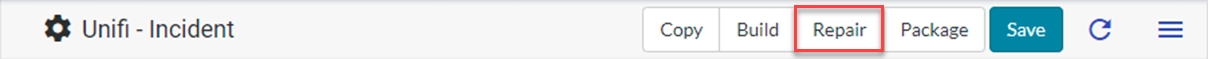

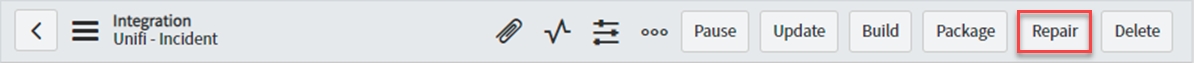

The Repair feature allows you to manually replay all Transactions on an Integration which are in either an Error or Timed Out state.

Repair is a UI Action on the Integration. Clicking it will cause a bulk replay of all its broken Transactions (those in an Error or Timed Out state).

You can repair an Integration either from Unifi Integration Designer, or native ServiceNow:

Click the Repair UI action on the Integration record in the Unifi Integration Designer portal.

Click the the Repair UI Action in the header of the Integration record in native ServiceNow

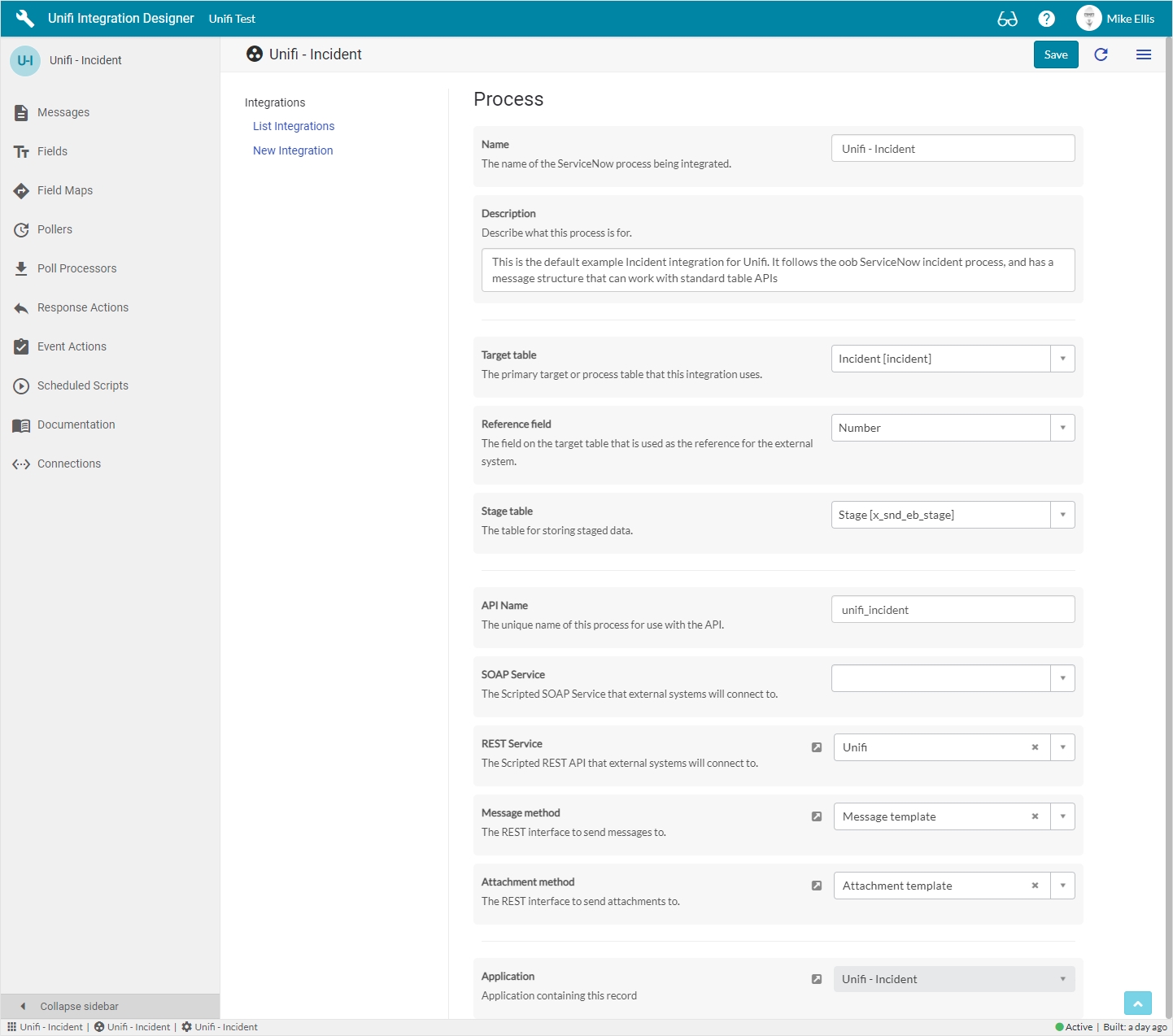

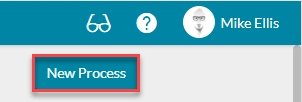

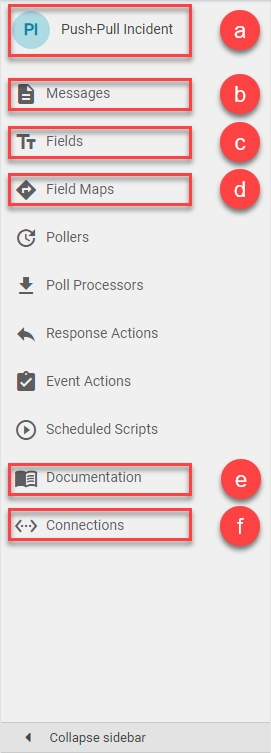

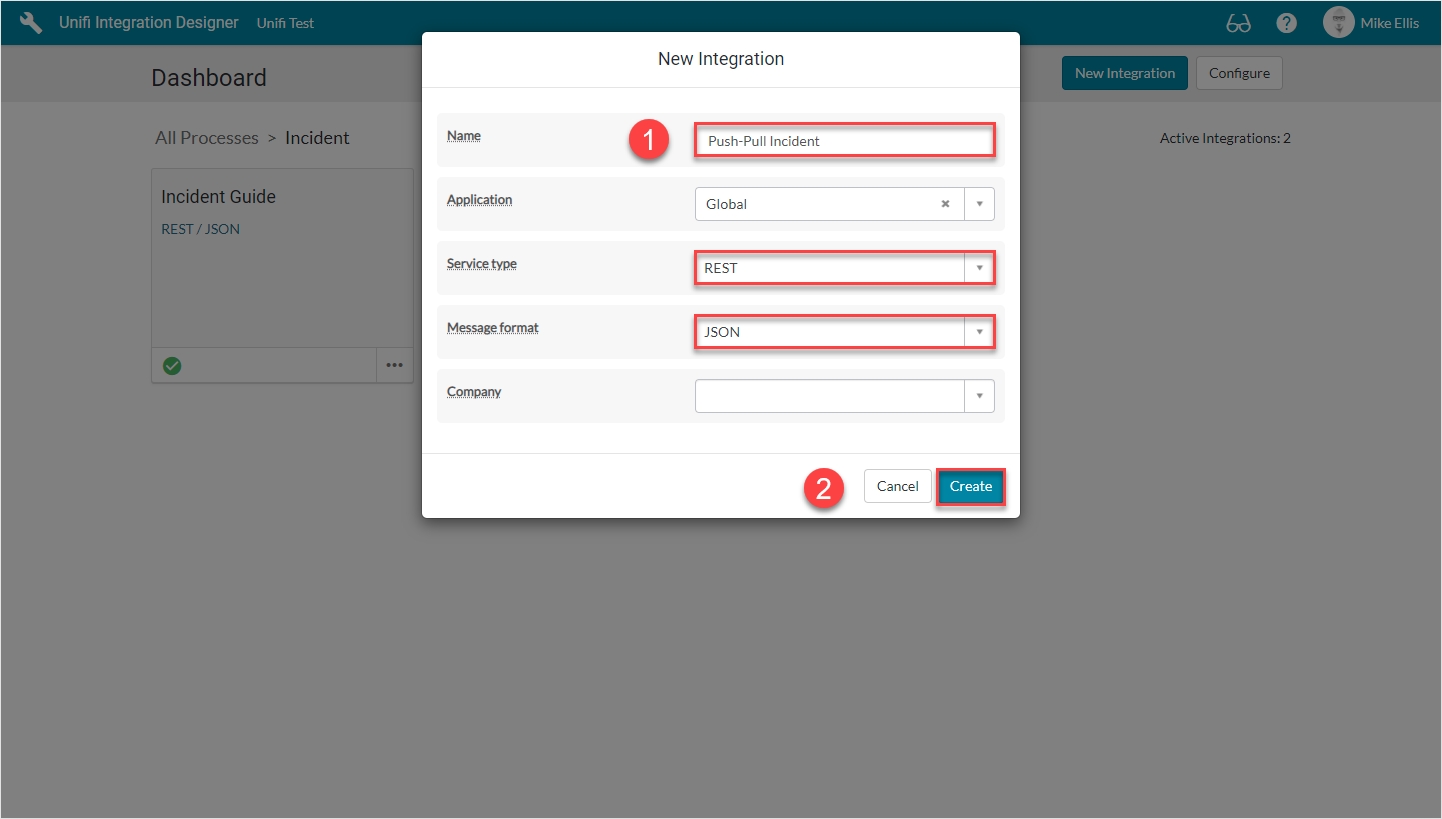

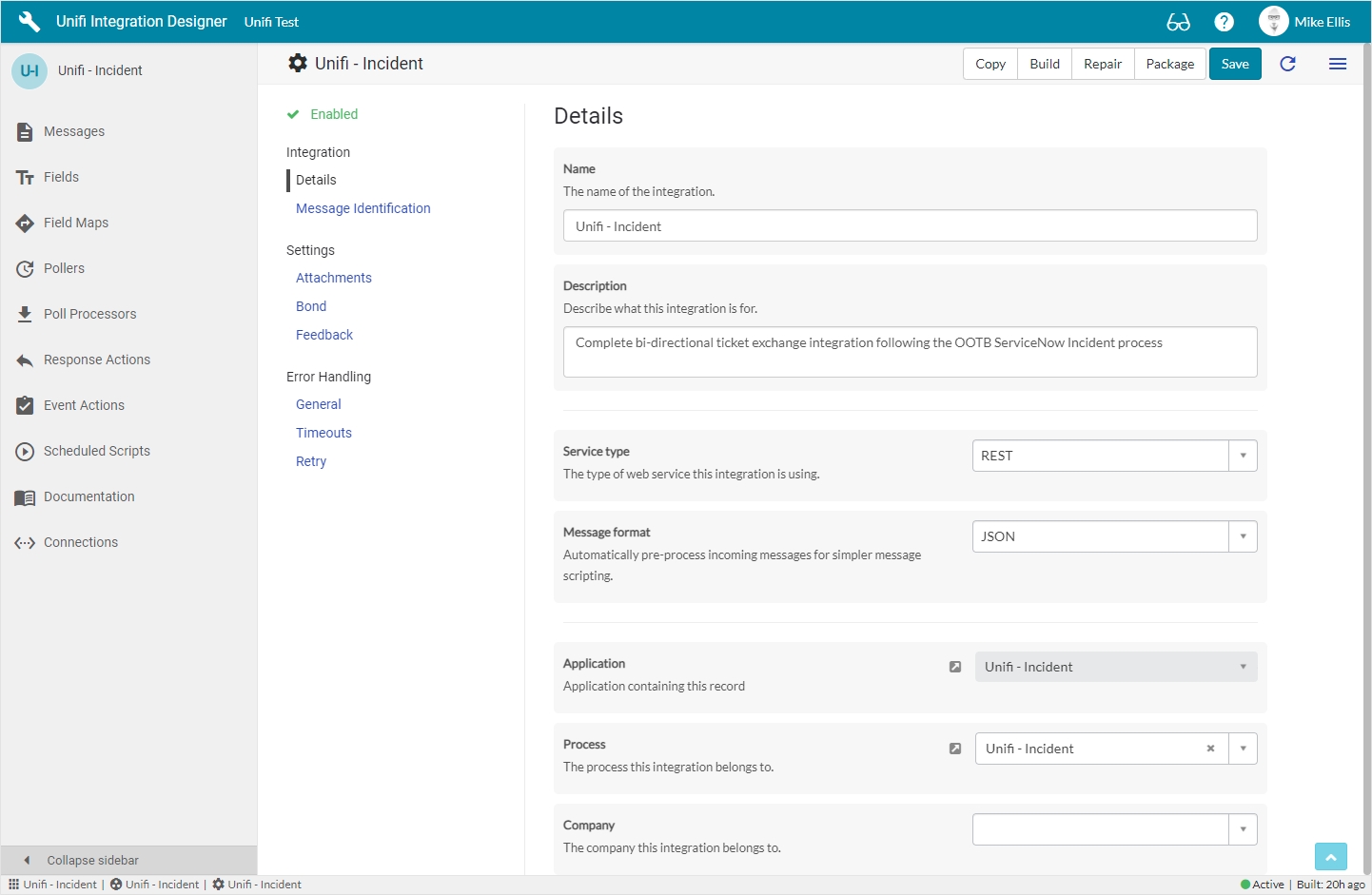

The first element to configure is the Process, which is the top level configuration element where all Integrations are contained.

The first thing to do when creating a new integration is to assign it to its Process. For instance, a new Incident integration would be created within an Incident Process. If you do not yet have a Process defined, then you will need to create a new Process

From the Unifi Integration Designer Dashboard, click on New Process.

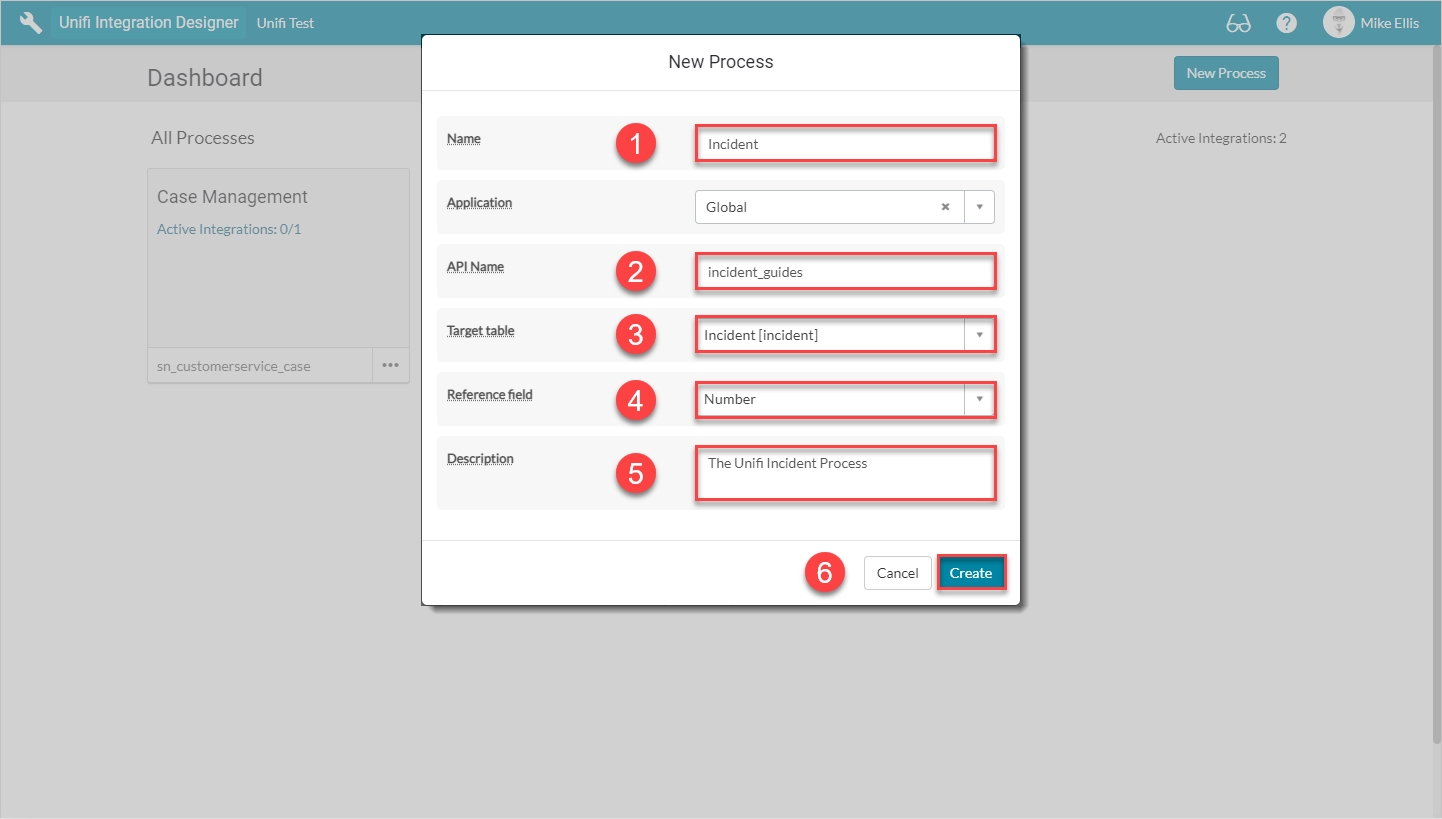

On the 'New Process' modal, the fields to be configured are as follows:

Follow these instructions to Package your Integration, ready for migration between ServiceNow instances.

Care needs to be taken when cloning an instance that uses Unifi, as the connections from that instance could potentially be active in the clone target. E.g. if you clone your production instance over a development instance, then without managing the connections you could connect to production instance target from your development instance.

Unifi comes with ways to deal with these scenarios:

Unifi has clone data preservers to prevent connection records in clone targets from being overwritten, and therefore preventing production credentials and endpoints making it to sub production instacens. However, care must be taken here as you will need to add these preservers to any clone profiles you use.

This Guide utilises the Unifi Integration Designer portal interface which allows you to configure and manage integrations much more intuitively and with greater efficiency.

Setup an export schedule

Build

Open Integration Designer and select or create an Integration.

From the main integration menu, select Datasets.

Click New.

Fill in the required information and choose your configuration.

Click Submit and view.

Creating a new Dataset will automatically configure several dependencies in your instance. These are:

A Message called Process_<table>

A Message called Send_<table>

A Scheduled Import Set with the same name as the Dataset

A Transform Map

The Process Message handles the data mapping. Use the Fields list to configure which fields are being imported/exported.

From the Dataset details tab, navigate to the Process message and use the clickthrough button to open it.

From the Message, open the Fields list and add the fields that should be imported/exported. Each field will need to have a Dataset specific Field Map for transforming the data.

Ensure at least one field will Coalesce so the import can match to existing records.

Click Build Message.

The Send Message handles the data to be imported or exported as an attachment.

The Path will need to be configured depending on where the data is being sent. If you are connecting to another ServiceNow instance using a ShareLogic endpoint, you will likely need to configure the Path to use the dataset web service at path "/dataset".

From the Dataset details tab, navigate to the Send message and use the clickthrough button to open it.

Open the Outbound > Settings page.

Configure the Path as required, e.g. "/dataset".

Click Save.

Datasets have the same schedule logic as any other scheduled job and can be configured to export data whenever needed.

Open the Dataset.

Click Scheduling to open the scheduling tab.

Configure the desired schedule.

Click Save.

Several important features are built into the integration build process for Datasets. Ensure you build the integration whenever you have finished adding or making major changes to a Dataset.

Scheduled Import Sets are created and updated.

Transform Maps are created and updated.

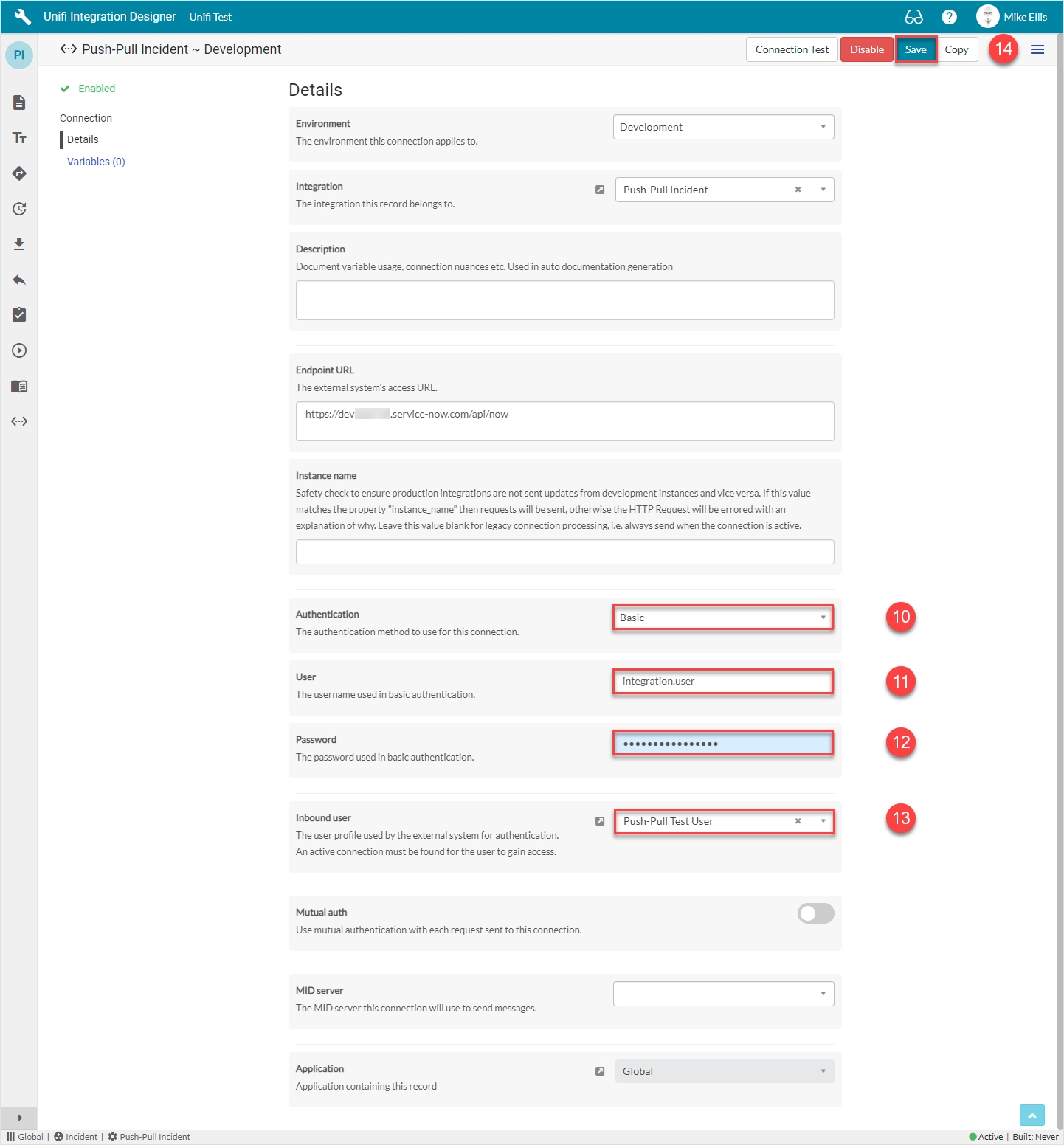

This is the best way to guarantee a production connection cannot be triggered from a sub production instance, or in fact any connection being triggered from an instance it is not specified for. The connection record contains a string field called instance name. In this field add the instance name that this connection should run against, i.e. if ACMEs production instance is acme.service-now.com then the production connection instance name field should be set to acme, and if ACMEs development instance is acmedev.service-now.com then the development connection instance name field should be set to acmedev. Only when the connections instance name matches the actual instance its being triggered from will a message be sent. If an attempt is made to trigger an integration from an instance that doesn't match, the corresponding transaction will be errored, and the reason why will be provided.

There are clone clean up scripts which will deactivate Unifi in the target clone instance, as well as turning off all active connections. The same issue with data preservers affects clone clean up scripts as well, so you need to make sure that any clone profiles which you use referene them. Also make sure to enable these scripts if you want to use them, they are disabled by default (as we prefer the data preservers).

Make sure you add Unifi to your post clone checklist. Even if you employ the highlighted methods above, its prudent to double check Unifi integrations after a clone.

Activity Log will show “No logs.” if no logs are generated. [UN-768]

Errors are no longer duplicated in Activity Log to make it easier to debug. [UN-769]

Improved documentation interface in UID. [UN-772]

Message Scripts now only throw their own errors (e.g. with Fields). [UN-749]

Improved spacing with UID Dashboard grid items. [UN-770]

Import the file as an update set, then preview and commit it. You can find more information on how to Load customizations from a single XML file in the ServiceNow Product Documentation.

Method: snd_eb_util.executeNow(job)

This is necessary for Datasets to execute their associated ServiceNow Import Job.

Method: snd_eb_util.getSoapResponseElement(xml)

When working with Scripted SOAP Services, it’s important to be able to set the soapResponseElement directly in order to preserve the exact payload to be sent back to the calling system. This can only be done with the Global Utility.

Method: snd_eb_util.moveAttachments(attachment_ids, record)

The ServiceNow scoped attachment API does not support moving attachments from one record to another. This is necessary for inbound attachments which initially reside on the HTTP Request and are then moved to the Target record.

Method: snd_eb_util.runAsUser(user, fn)

On occasion, Unifi needs to run some code as the given user. This is necessary things like replaying requests.

Method: snd_eb_util.runJelly(jelly_code, vars)

Jelly processing is not supported in the ServiceNow scoped API’s, however it is very useful for XML processing. Use of the Global Utility drastically improves XML payload capabilities if you are working with XML payloads.

Method: snd_eb_util.validateScript(script, scope)

Used by the Unifi Integration Diagnostic to check scripts for errors.

Method: snd_eb_util.writeAttachment(record, filename, content_type, data)

The Scoped Attachment API does not support writing binary attachments which can cause problems when receiving things like Word documents or PDF’s. This method allows Unifi to use the global attachment API to write those files to the database meaning they will work properly.

Method: snd_eb_util.packager.*

The packager methods included in the global utility allow Unifi to automatically export all the components of an integration in one easy step. The packager methods will allow Unifi to create an update set for the integration, add all the configuration records to that update set, and export it as a file download from the Integration page on the Unifi Integration Designer portal.

Method: snd_eb_util.web_service.*

The web service methods included in the global utility allow Unifi to automatically create and update REST Methods used by Unifi integrations.

Method: snd_eb_util.trigger_rule.*

The trigger methods included in the global utility allow Unifi to automatically create a Trigger Business Rule on a table if one doesn't already exist.

Method: snd_eb_util.datasetCreateDataSource(dataset, attachment)

Create a data source for datasets to use when importing data.

Method: snd_eb_util.datasetDeleteScheduledImport(scheduled_import_id)

Delete a scheduled import that belongs to and was created by a Dataset. Used when deleting a Dataset.

Method: snd_eb_util.datasetDeleteTransformMap(transform_map_id)

Delete a transform map that belongs to and was created by a Dataset. Used when deleting a Dataset.

Method: snd_eb_util.datasetEnsureTransformMap(dataset)

Update a transform map for a Dataset to use when importing data. Used when building a Dataset.

Method: snd_eb_util.datasetUpdateTransformMapFields(dataset)

Update the coalesce fields for a transform map. Used when building a Dataset.

function refreshAccessToken(requestorId, oauthProfileId, token) {

if (!(token && requestorId && oauthProfileId)) return;

var tokenRequest = new sn_auth.GlideOAuthClientRequest();

tokenRequest.setGrantType("refresh_token");

tokenRequest.setRefreshToken(token.getRefreshToken());

tokenRequest.setParameter('oauth_requestor_context','sys_rest_message');

tokenRequest.setParameter('oauth_requestor', requestorId);

tokenRequest.setParameter('oauth_provider_profile',oauthProfileId);

var oAuthClient = new sn_auth.GlideOAuthClient();

var tokenResponse = oAuthClient.requestTokenByRequest(null,tokenRequest);

var error = tokenResponse.getErrorMessage();

if (error) gs.warn("Error:" + tokenResponse.getErrorMessage());

}

function isExpired(expiresIn, withinSeconds) {

if (expiresIn > withinSeconds) return false;

return true;

}

function getToken(requestorId, oauthProfileId) {

if (!requestorId || !oauthProfileId) return null;

var client = new sn_auth.GlideOAuthClient();

return client.getToken(requestorId, oauthProfileId);

}

function checkAndRefreshAccessToken(grRestMessage) {

if (grRestMessage.getValue("authentication_type") != "oauth2") return false;

var accountMsg = grRestMessage.getValue("name");

if (!accountMsg)

accountMsg = grRestMessage.getUniqueValue();

accountMsg = "Account=\"" + accountMsg + "\"";

var token = getToken(grRestMessage.getUniqueValue(), grRestMessage.getValue('oauth2_profile'));

var accessToken = token.getAccessToken();

if (accessToken) {

if (!isExpired(token.getExpiresIn(), 300)) return;

}

if (!token.getRefreshToken()) {

gs.error("No OAuth refresh token for Rest Message. Manual reauthorization required. " + accountMsg);

return;

}

if (isExpired(token.getRefreshTokenExpiresIn(), 0)) {

gs.error("OAuth refresh token for Rest Message is expired. Manual reauthorization required. " + accountMsg);

return;

}

gs.info("Refreshing oauth access token for Rest Message account. " + accountMsg);

refreshAccessToken(grRestMessage.getUniqueValue(), grRestMessage.getValue('oauth2_profile'), token);

}

var grAccount = new GlideRecord("sys_rest_message");

grAccount.addQuery("authentication_type", "oauth2");

grAccount.addNotNullQuery("oauth2_profile");

grAccount.query();

while (grAccount.next()) {

checkAndRefreshAccessToken(grAccount);

}Choice

The direction of the original record. Choices: None, Inbound, Outbound

Data

String

The JSON representation of the original record.

Name

String

The Number (unique identifier) of the original record.

Scenario

Reference

The Integration Test Scenario this data object belongs to.

Type

Choice

Choices correlate to the different transport stack records.

Direction

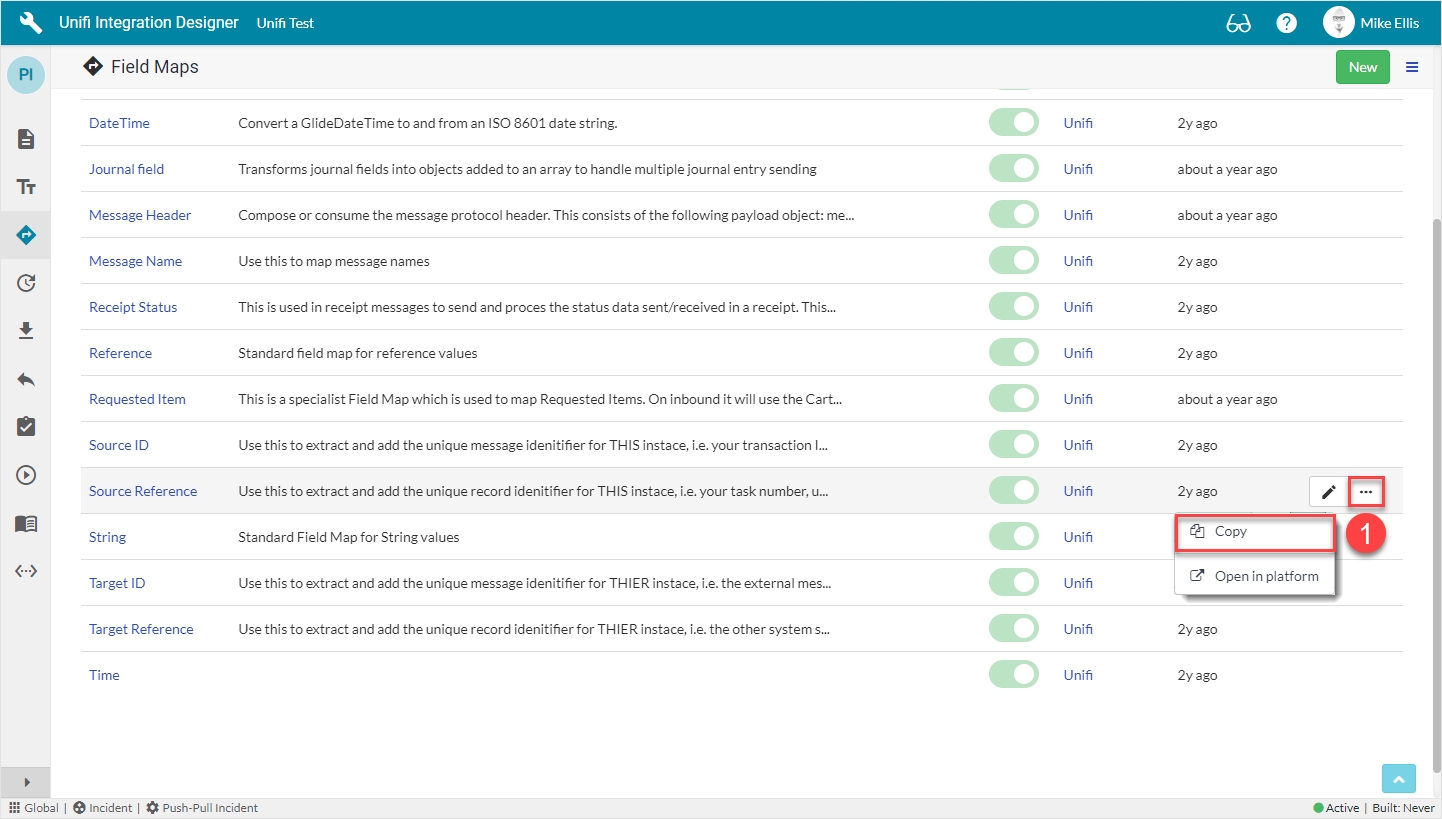

The data being sent for each Message is mapped using the Field records. For more information, see the 'Fields' & 'Field Maps' pages.

The following table gives a description of the fields that are visible on the Stage record:

Number

String

The unique Stage identifier.

Internal reference

String

The ServiceNow ticket reference.

External reference

String

The external system’s ticket reference.

The following is an example of a Stage record:

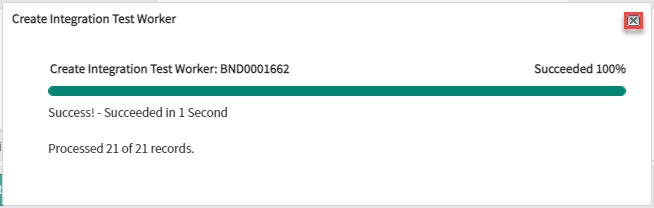

The Create Integration Test Worker modal is displayed, showing progress. Close the modal.

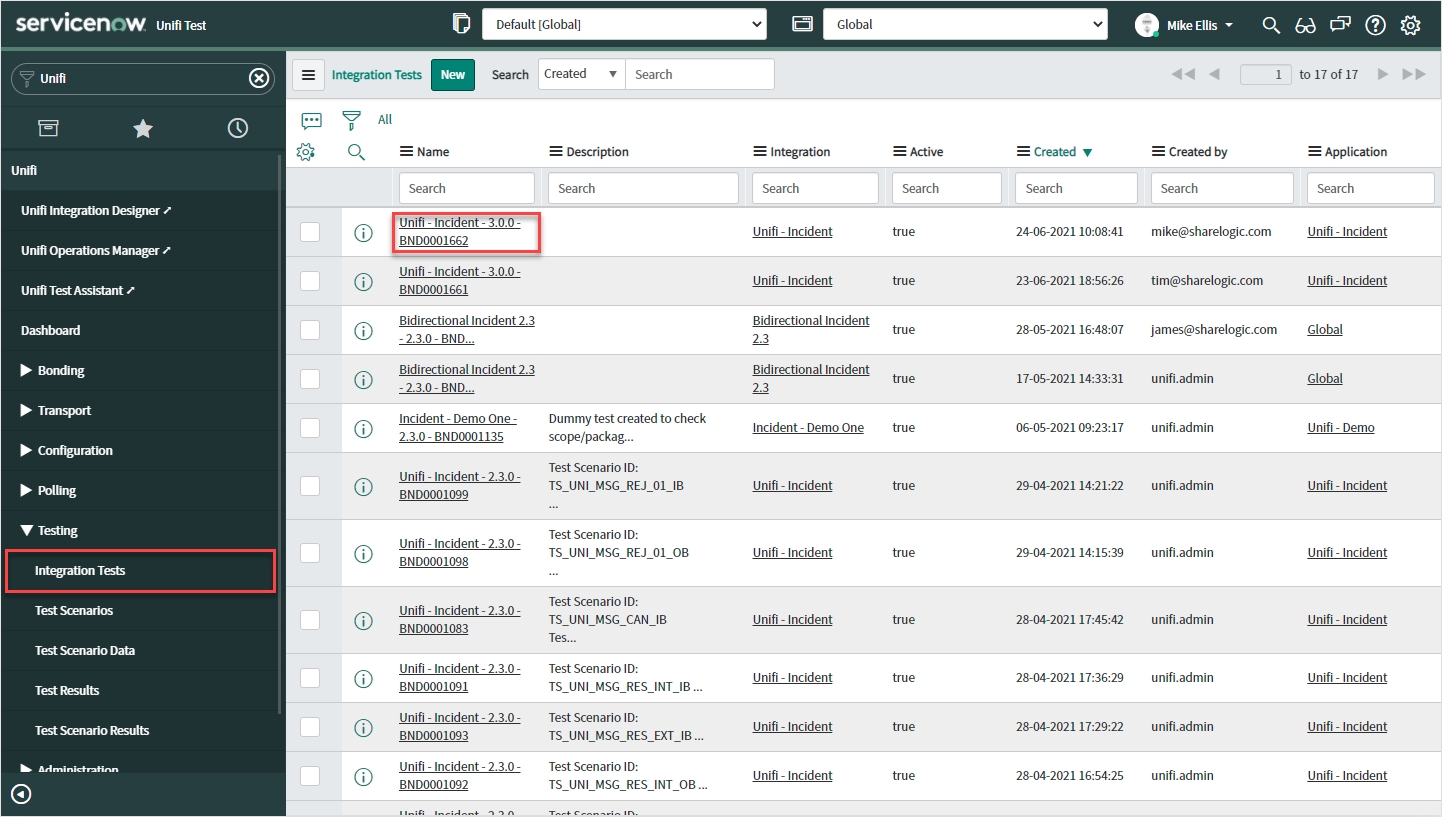

The automated Integration Tests have been created and can be viewed by navigating to Unifi > Testing > Integration Tests.

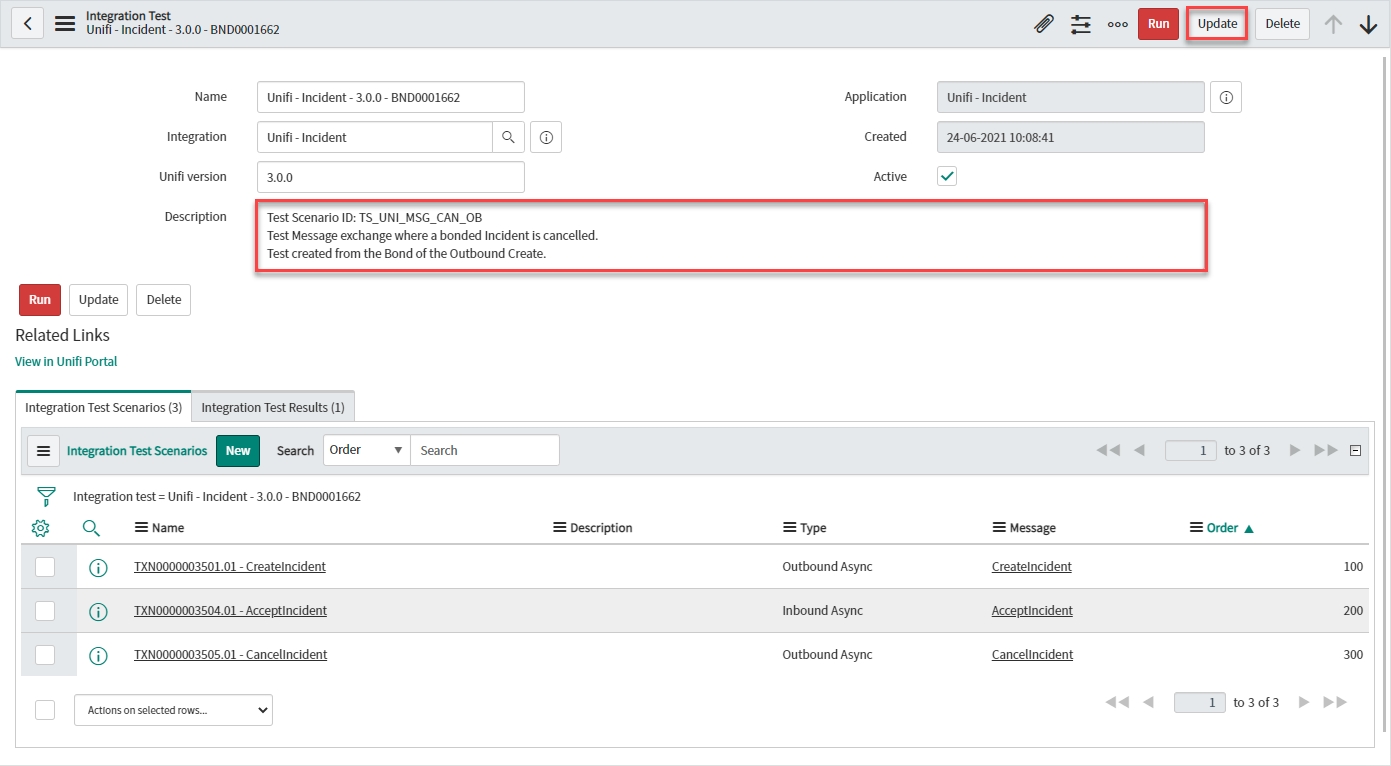

We recommend you add your own meaningful Description to the Test and Update.

Note the following about the Test that was created.

More information about each of the records created can be found in the Testing section of our Documentation.

If you're the kind of person that likes to know how things work, we've included this information just for you.

When you click 'Create Test', Unifi will create an Integration Test record for that Bond.

It will then take the first Transaction on the Bond and create an Integration Test Scenario record for that Transaction.

Once that is done it will look for all the relevant transport stack records (Snapshot, Stage, Bond, HTTP Request, Transaction) that pertain to that specific Transaction and create the relevant Integration Test Scenario Data objects for each record and adds them to that Integration Test Scenario.

It will then loop through each of the subsequent Transactions on the Bond, repeating the process for each (creating an Integration Test Scenario record and adding the relevant Integration Test Scenario Data objects).

The fields that can be configured are as follows:

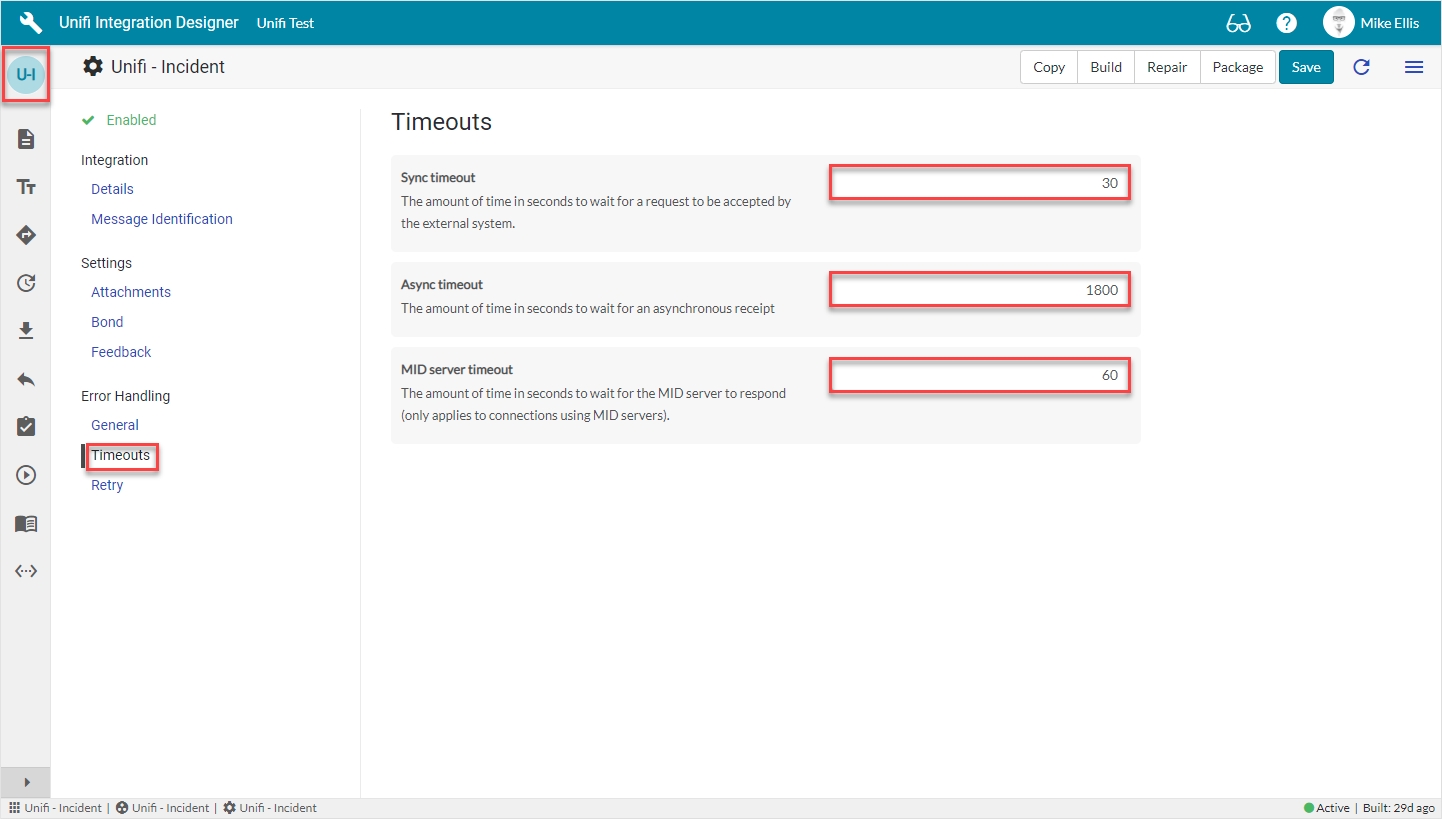

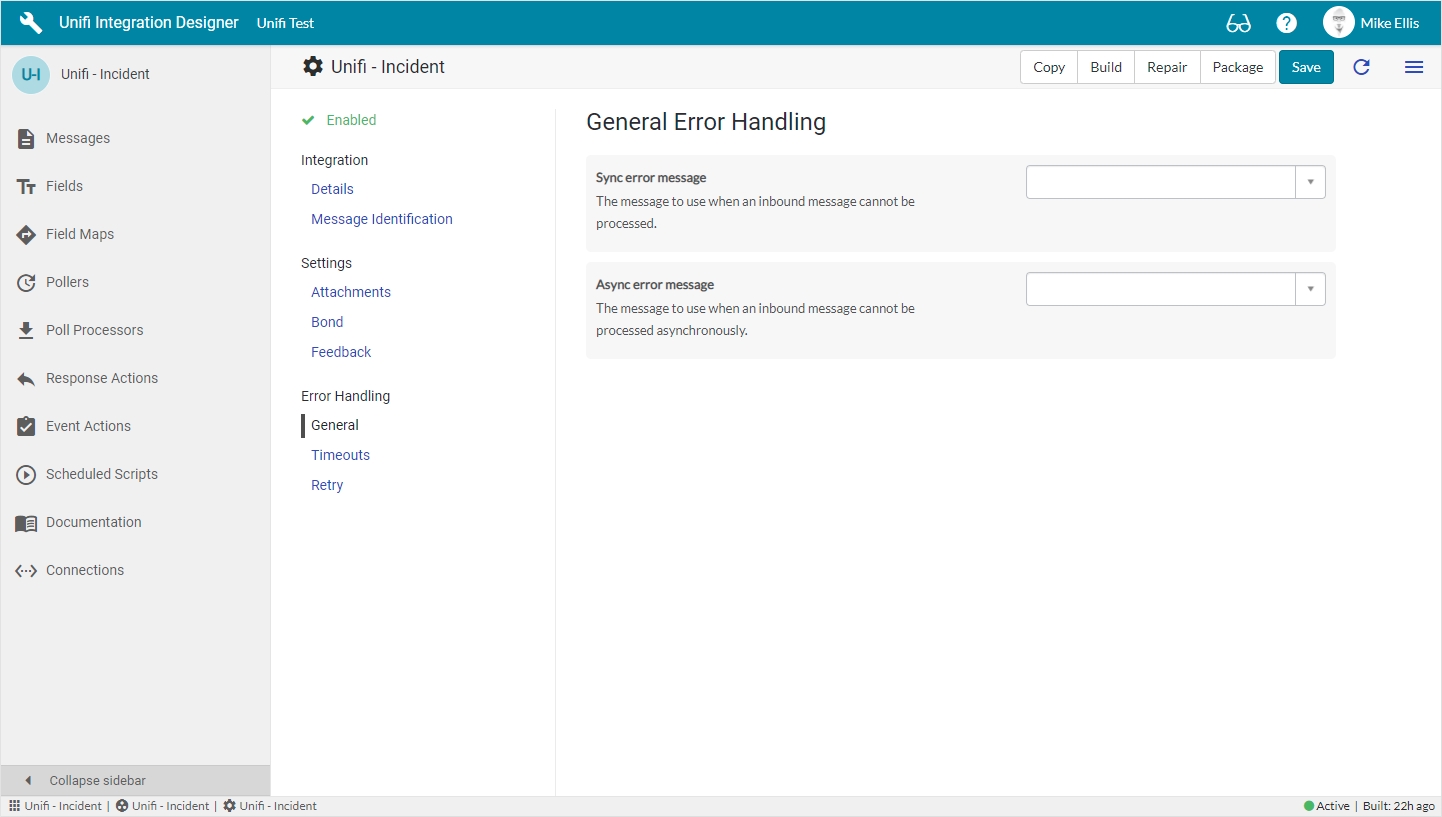

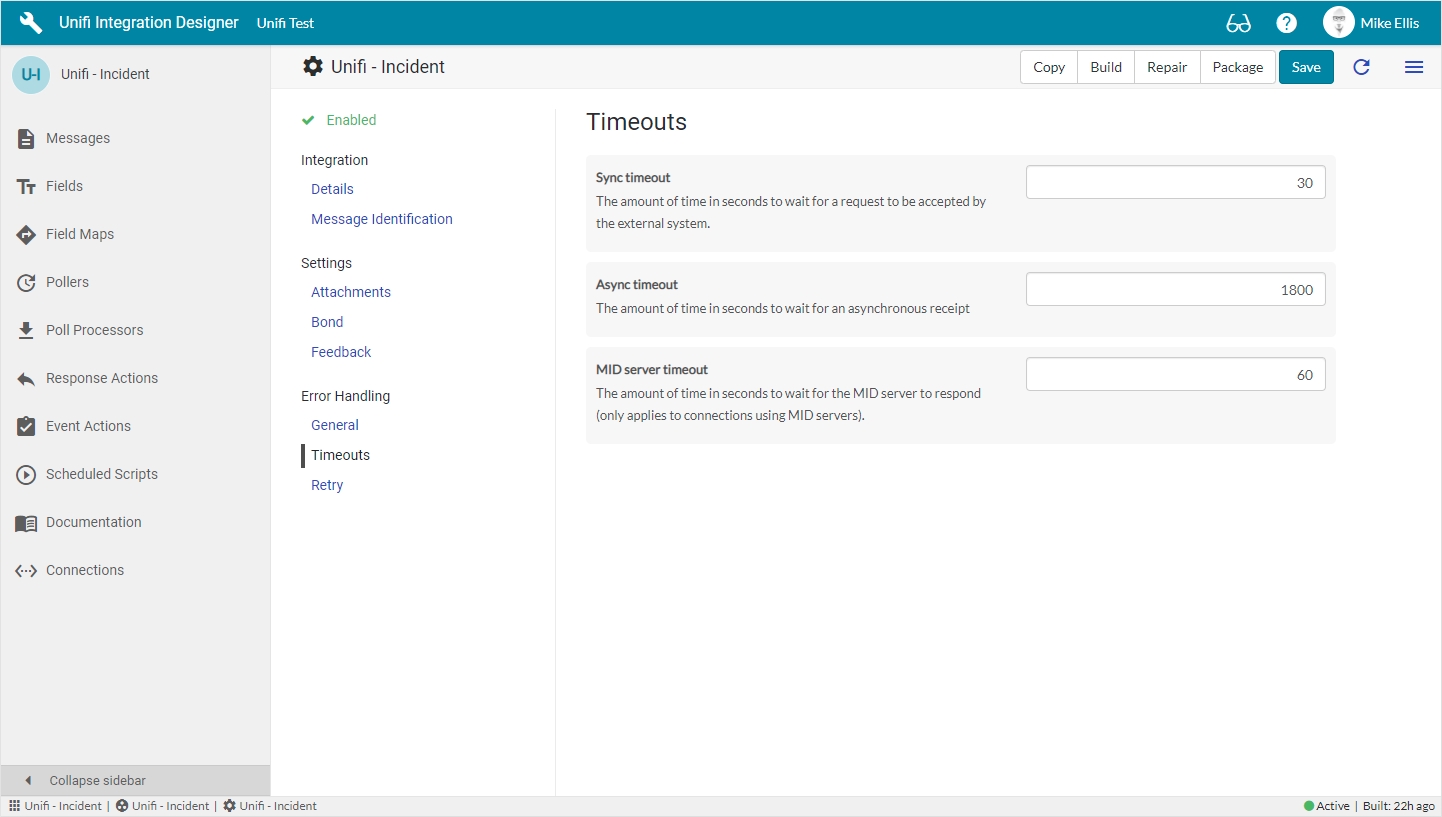

In Unifi Integration Designer, navigate to and open < The Integration you wish to configure >.

Click the ‘Integration’ icon (this will open the Details page).

Navigate to Error Handling > Timeouts.

The Timeout fields that can be configured for the Integration are as follows:

Sync timeout

The amount of time in seconds to wait for a request to be accepted by the external system.

Async timeout

The amount of time in seconds to wait for an asynchronous receipt.

MID server timeout

The amount of time in seconds to wait for the MID server to respond (only applies to connections using MID servers).

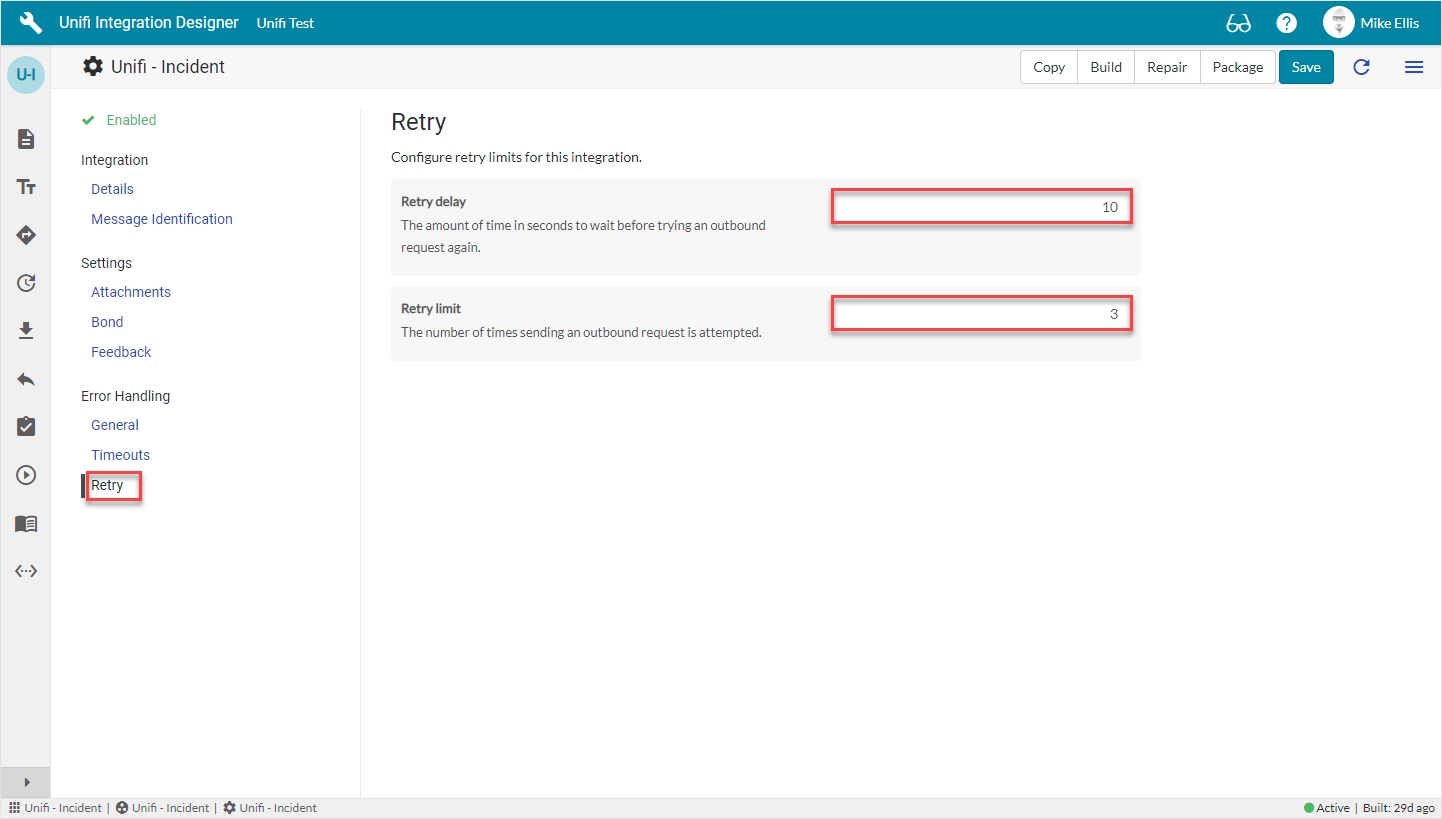

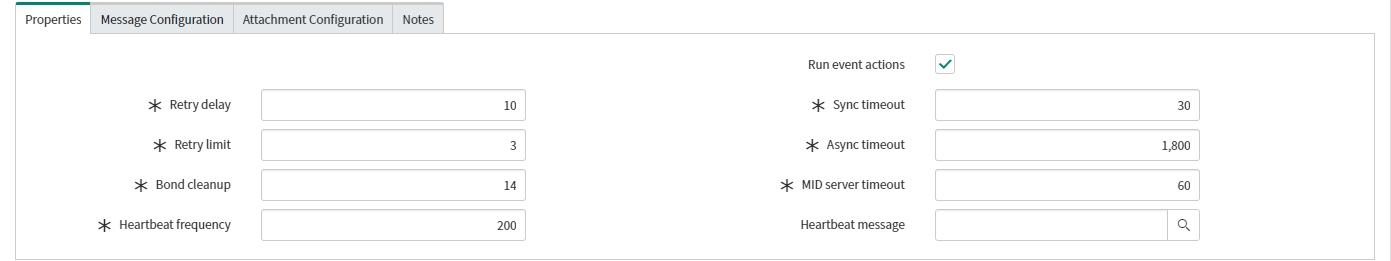

Navigate to Error Handling > Retry.

The Retry fields that can be configured for the Integration are as follows:

Retry delay

The amount of time in seconds to wait before trying an outbound request again.

Retry limit

The number of times sending an outbound request is attempted.

Retry is automated in Unifi. Should the number of retries be exhausted, the Transaction will be errored and any subsequent Transactions are queued. This prevents Transactions from being sent out of sync and updates being made to bonded records in the wrong sequence.

There are a number of UI Actions available to help and subsequent sections will look at each of those in turn.

In the next section, we'll look at the first of those UI Actions, the Replay feature.

Integration Tests are created directly from the Bond record. At the click of a button, Unifi will generate a test which comprises each of the Transaction scenarios on that Bond.

The generated tests are used to check Unifi's processing of the data i.e. to compare whether it is behaving in the same manner and producing the same results when processing the generated test as it did when processing the original records. It checks not only the data itself, but also the Unifi processes that trigger, transport and respond to that data moving through Unifi.

When running a test, no connection is made to the other system. Instead, Unifi calls a mock web service which responds with results from the original scenario. Unifi then tests what happens with that response. Doing this helps to ensure the accuracy of the test (testing the functionality of the Unifi process in your instance), without relying on input from an external instance (potentially adding further variables to the test).

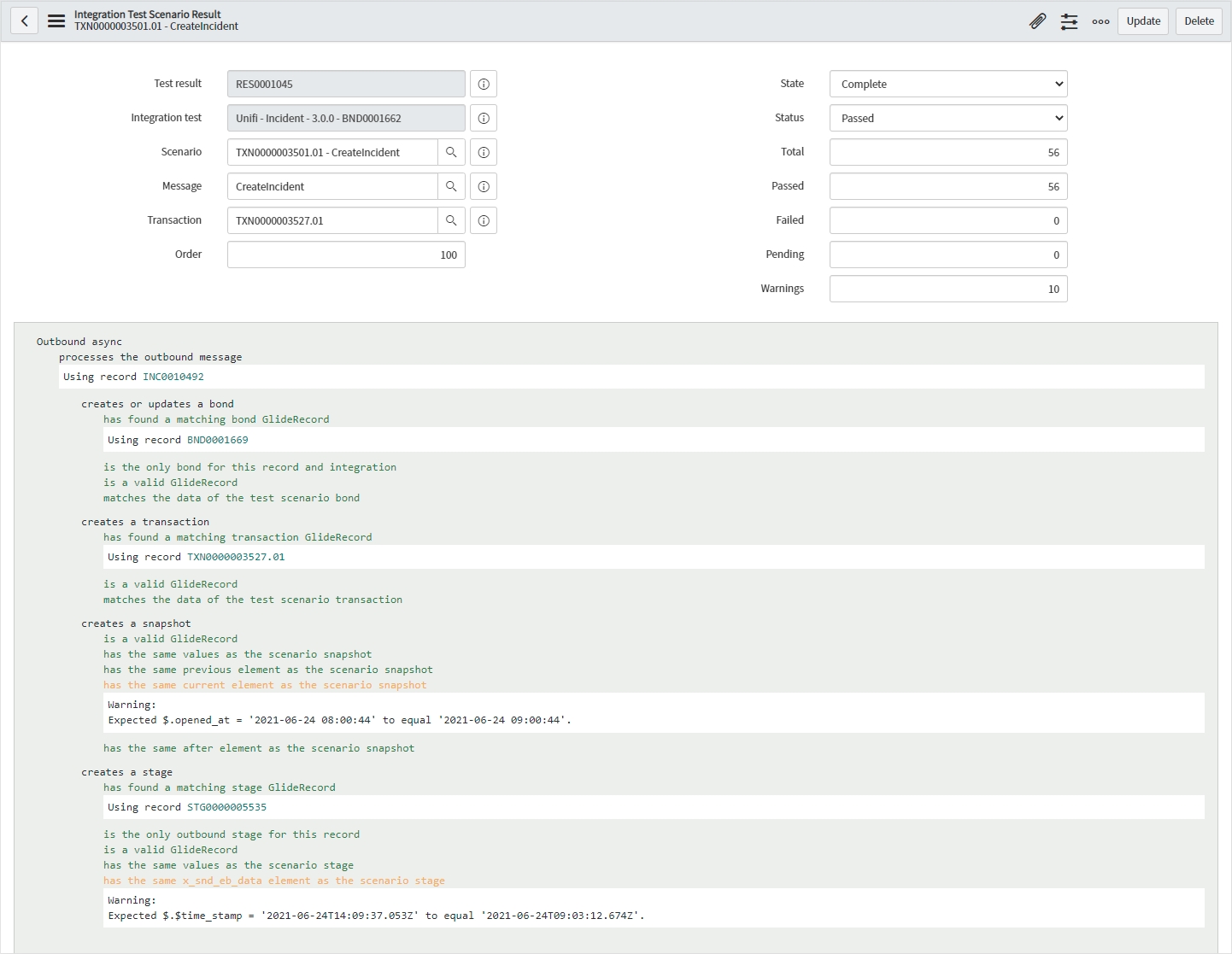

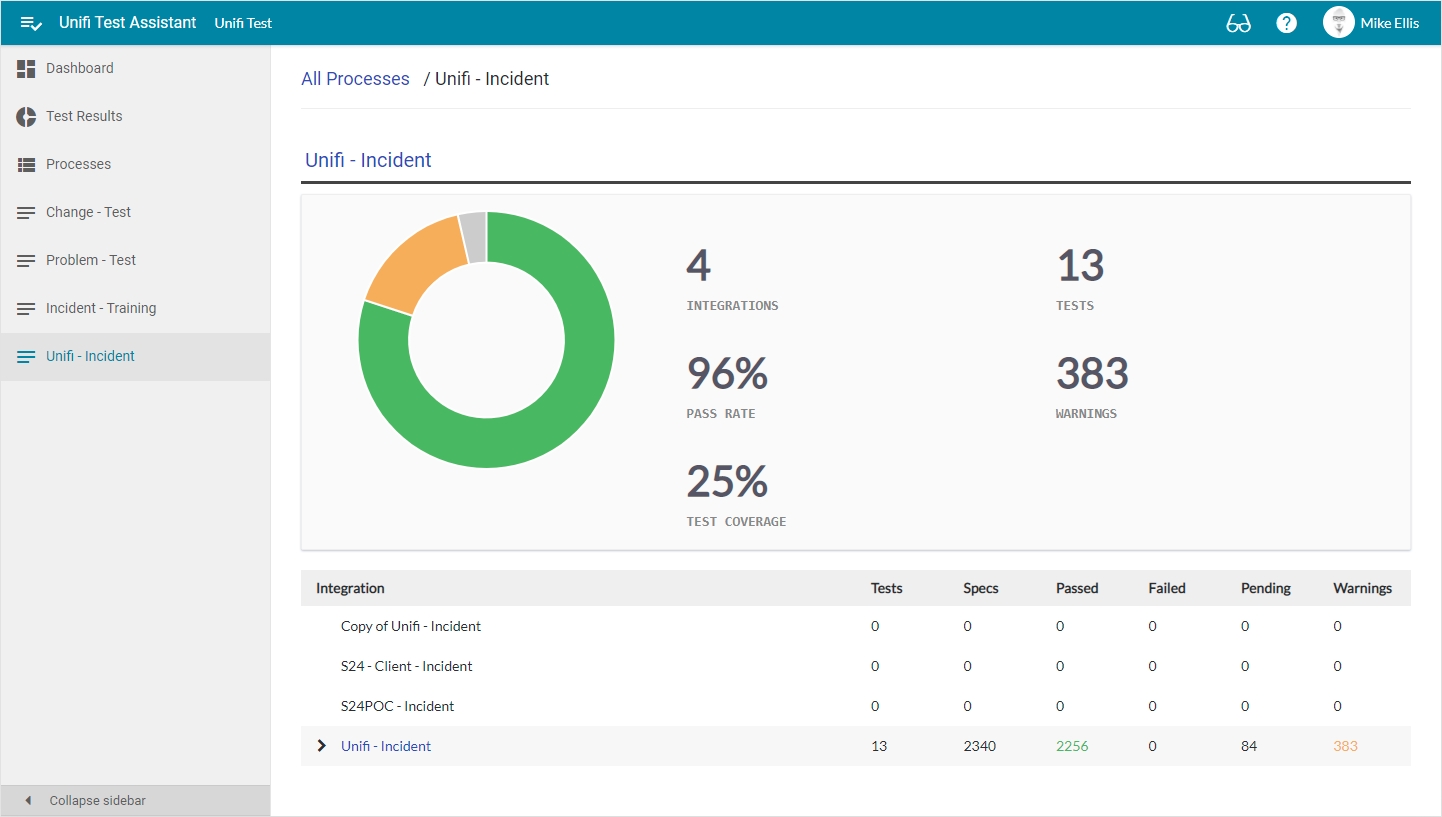

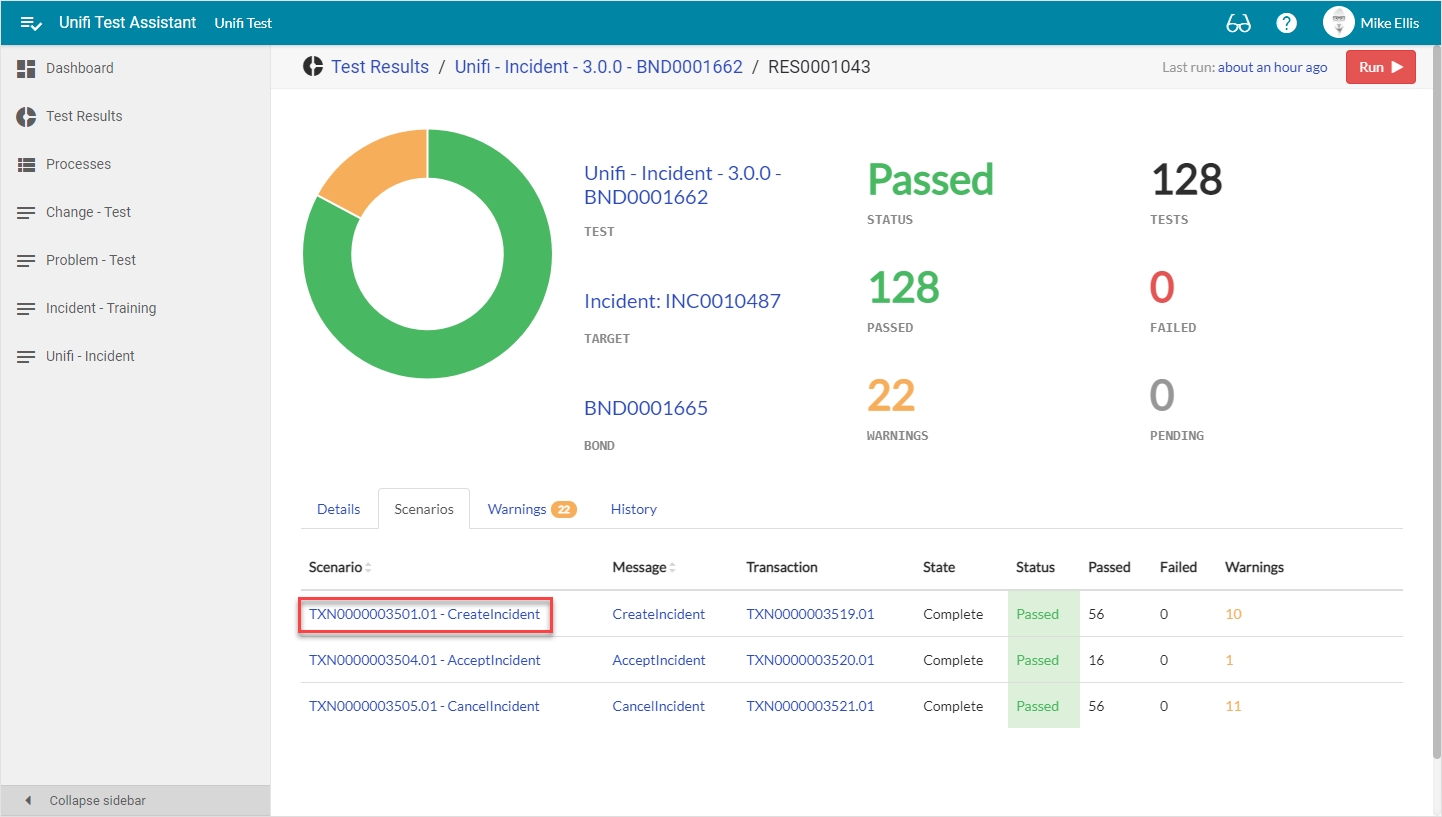

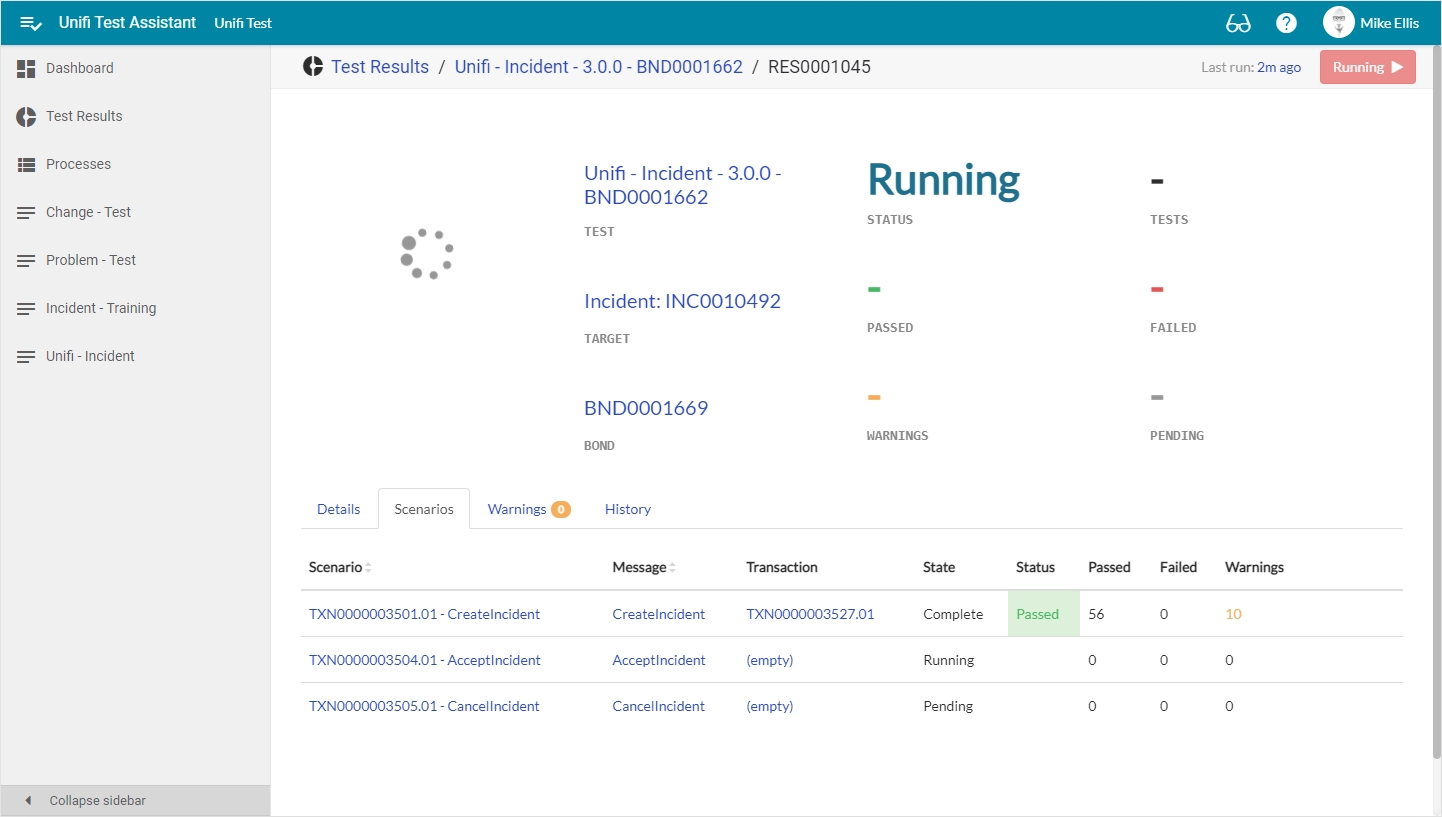

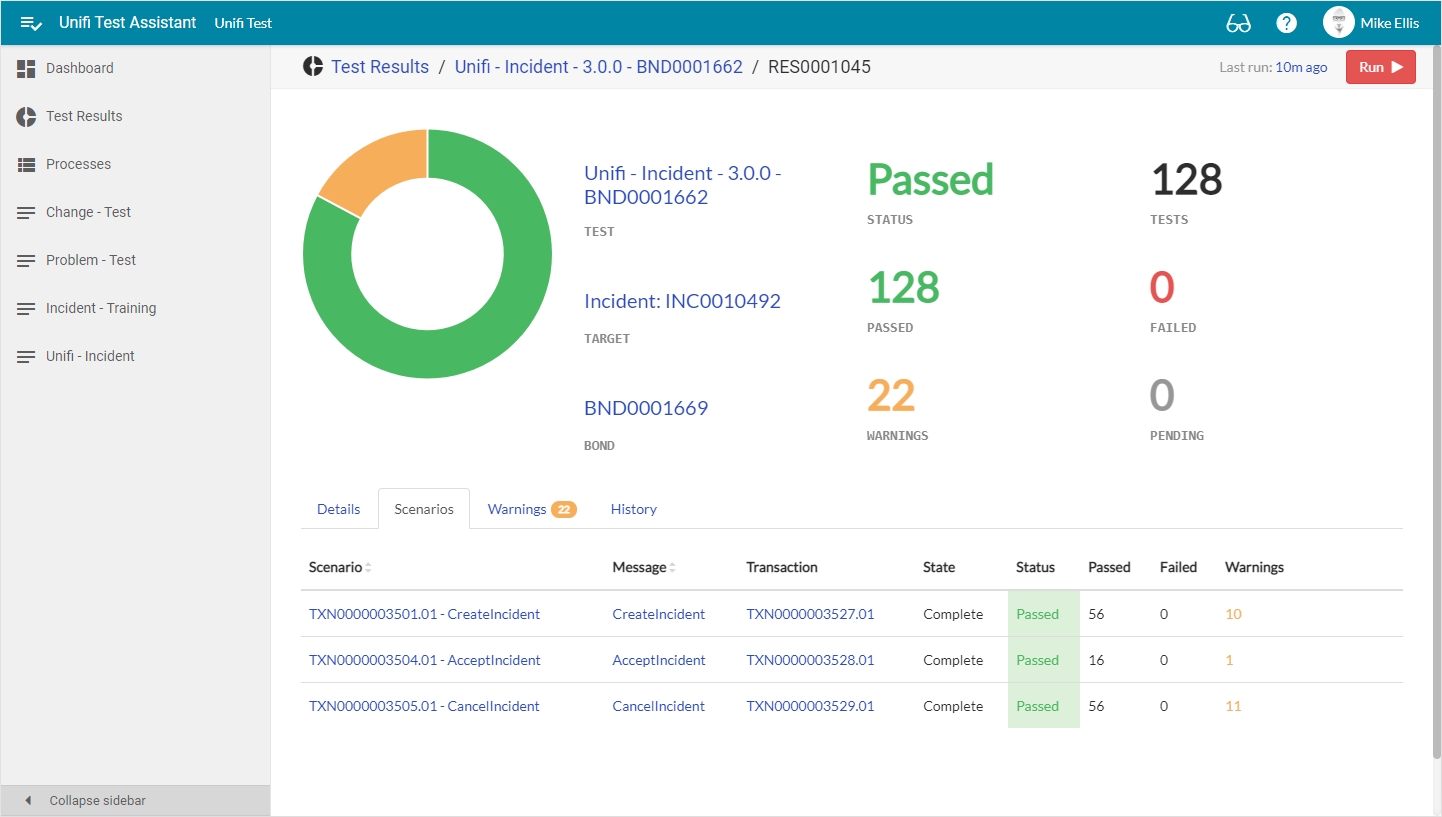

Exploring the results of the Integration Test is intuitive, efficient and informative using Unifi Test Assistant.

Whenever you package your Integration (for details, see the Packager Feature Guide), any Integration Tests you create will also be included along with the other elements of your packaged Integration.

Because tests are generated from real-world data in your instance, in order for your tests to work in other instances, the data that you use has to exist in those instances as well (i.e. the data contained on the bonded record e.g. Caller, Assignment group etc.).

If you change your process (e.g. change the structure of data objects being exchanged), you will need to generate new tests.

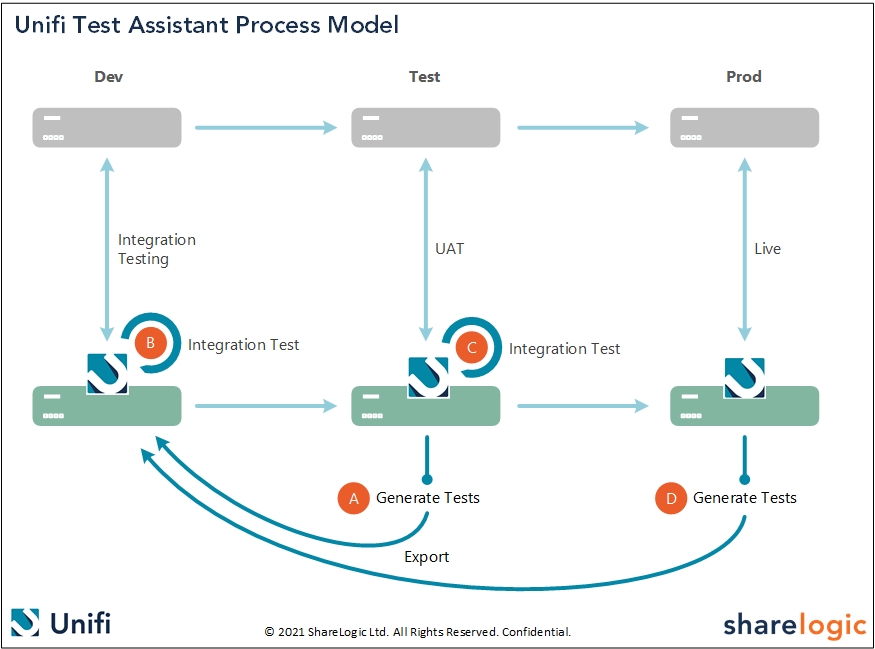

The Unifi Test Assistant Process Model shows how Unifi Test Assistant has been built to work with your integration and platform release process.

Unifi Test Assistant is designed to be used with integrations that are already working. It is not a replacement for unit testing.

A) Once UAT has been completed for the integration, Integration Tests can be generated from the resulting Bonds and imported back into Dev.

B) The Integration Tests can be executed as many times as required to perform regression testing for new platform upgrades, patch releases or for any other reason.

C) Integration Tests are packaged with the Integration and can be executed as part of UAT if required.

D) Integration Tests can be generated in Production to allow new or unforeseen scenarios to be captured and tested against in future release cycles.

The dedicated portal interface for running and exploring automated Integration Tests.

Integration Test is the overarching record containing all the elements of the automated Integration Test. It correlates to the Bond from which it was created and comprises each of the Transaction scenarios on that Bond.

Integration Test Scenarios are the elements that make up an Integration Test. Each Scenario will correlate to the relevant Transaction on the Bond from which the test was created. Each contains the relevant Test Scenario Data objects for the particular Scenario.

Test Scenario Data is a JSON representation of all the relevant records created during the processing of a Transaction (e.g. HTTP Request, Transaction, Bond, Snapshot) and is used to both generate the test and ascertain the results of each test run.

Whenever you run an Integration Test, the results are captured in an Integration Test Result record. The record links to and contains a summary of each of the individual Test Scenario Results.

Whenever you run an Integration Test Scenario, the results are captured in an Integration Test Scenario Result record. The results of each Test Scenario are tallied and rolled up to the parent Integration Test Result record.

We will give step-by-step instructions on how to generate, run and explore automated Integration Tests.

Unifi Managers can ignore Transactions that aren't Complete or already ignored.

You would normally use Ignore for Transactions that are Queued, Timed Out or in an Error state. You would perhaps ignore a Transaction that cannot be processed because it is broken and you know it won't work.

Another example might be that you choose to ignore an update because the other system has processed it but not responded correctly (putting the Transaction in Error), so rather than replaying and duplicating the update, it would be better to ignore it. You may even have a number of Transactions that you want to ignore (perhaps in the case of system unavailable).

We've already said that setting Transactions to Ignored stops the queue from processing. Unifi doesn't automatically continue processing subsequent Transactions - they remain Queued. This is because it is more beneficial to have a focused environment when debugging - to not have Transactions automatically firing off (potentially blurring issues).

It requires user intervention to manually restart the queue and process those subsequent Transactions. This is done via the Process Now UI Action.

In the case of an Outbound Transaction with an attachment being ignored, the associated Bonded Attachment record will be set to Rejected. This means that it will be available to be sent with the next Transaction.

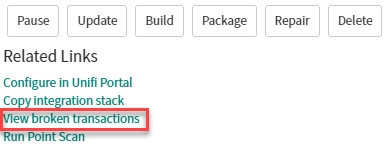

In the case of a major outage (where perhaps the other system is down, or the authentication user credentials have been updated), you might have a number of failed Transactions. Rather than stepping into each Transaction and replaying them individually, you can simply replay all the broken Transactions on the Integration. This could represent a significant time-saving.

As a further aid, there is also a 'View broken transactions' Related Link on the Integration record which will take you to a list of all Transactions in an Error or Timed Out state.

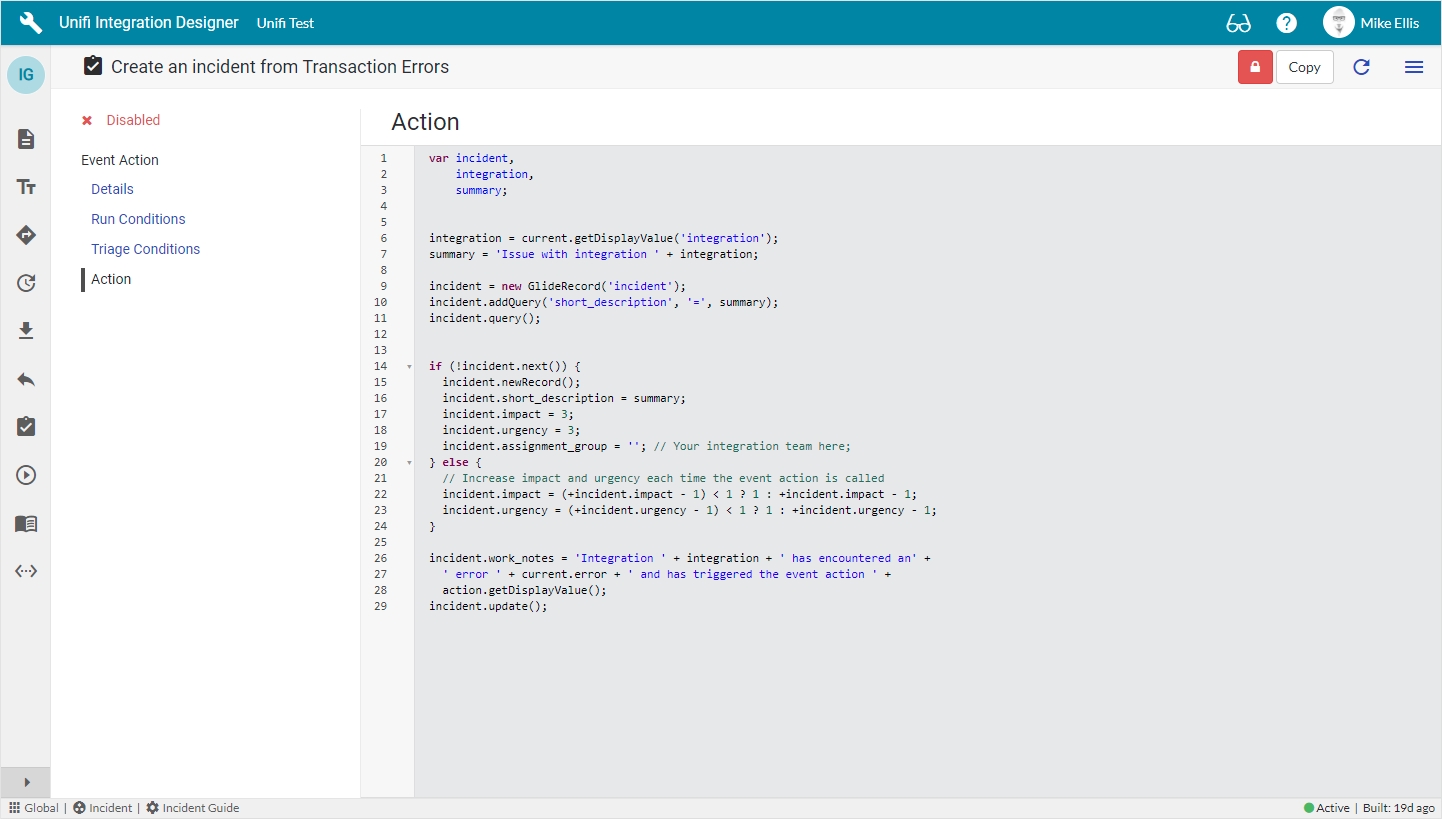

Hints & Tips: It is possible for Unifi to be proactive and raise Incidents about Integrations (see Event Actions). In such a case, the repair functionality could be tied to the resolution of the Incident, whereby Unifi automatically runs 'Repair' on the Integration the Incident was raised against.

When a Transaction is replayed (whether individually, or in bulk), the original record is set to 'Ignored' and a new Transaction (with a decimal suffix) is generated (taking the same Stage data & reprocessing it) and sent.

The name of the ServiceNow process being integrated.

<SN Process Name> (e.g. Incident)

API Name*

The unique name of this process for use with the API.

<your_unique_api>

Target table

The primary target or process table that this integration uses.

'Incident' [incident]

Reference field

The field on the target table that is used as the reference for the external system.

'Number'

Description

Describe what this Process is for.

<Your description>

Your 'New Process' modal should look like this:

Click Create.

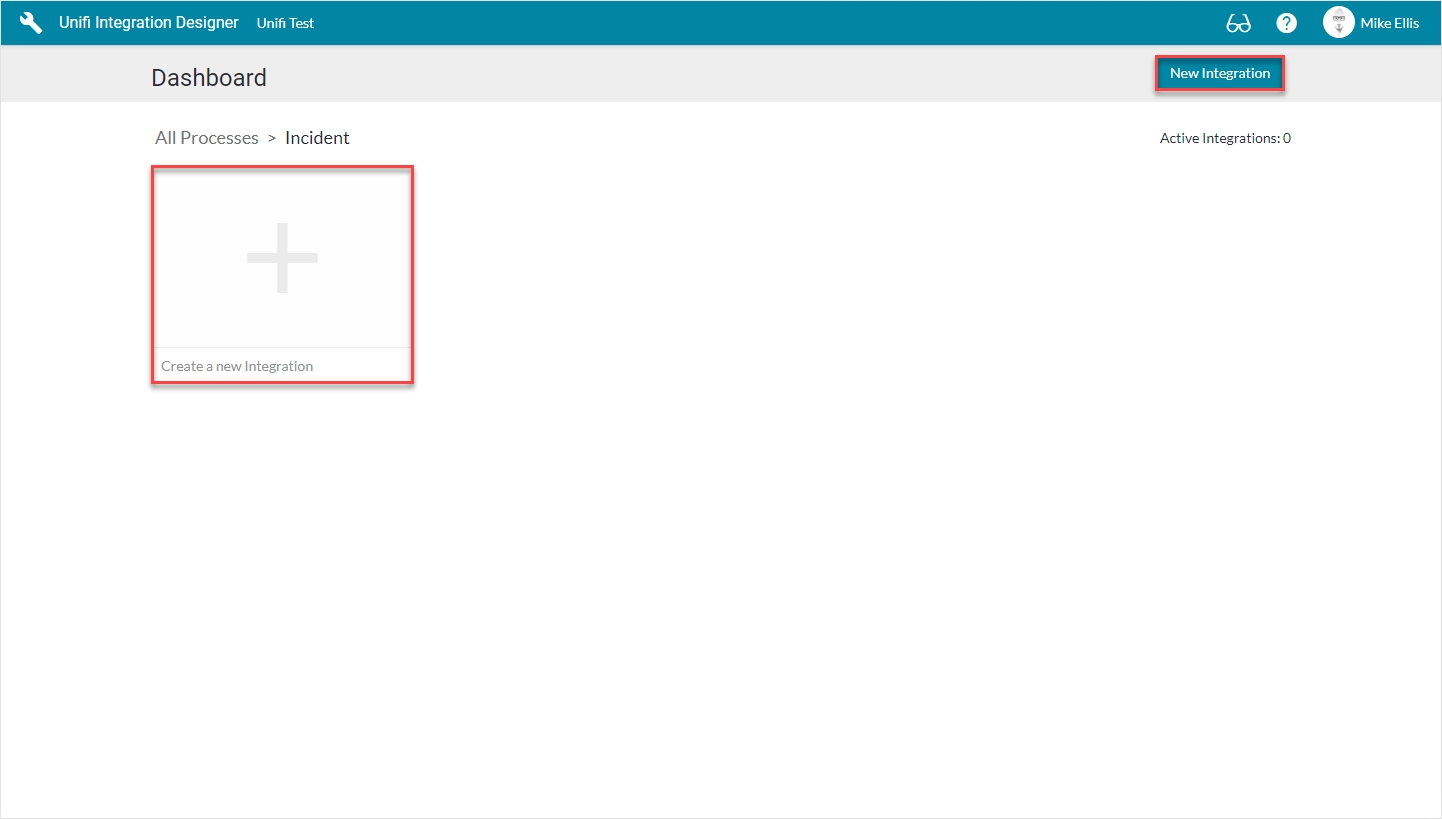

You will be redirected to your Process Dashboard:

Click either the '+' tile or 'New Integration' in preparation to configure the Integration.

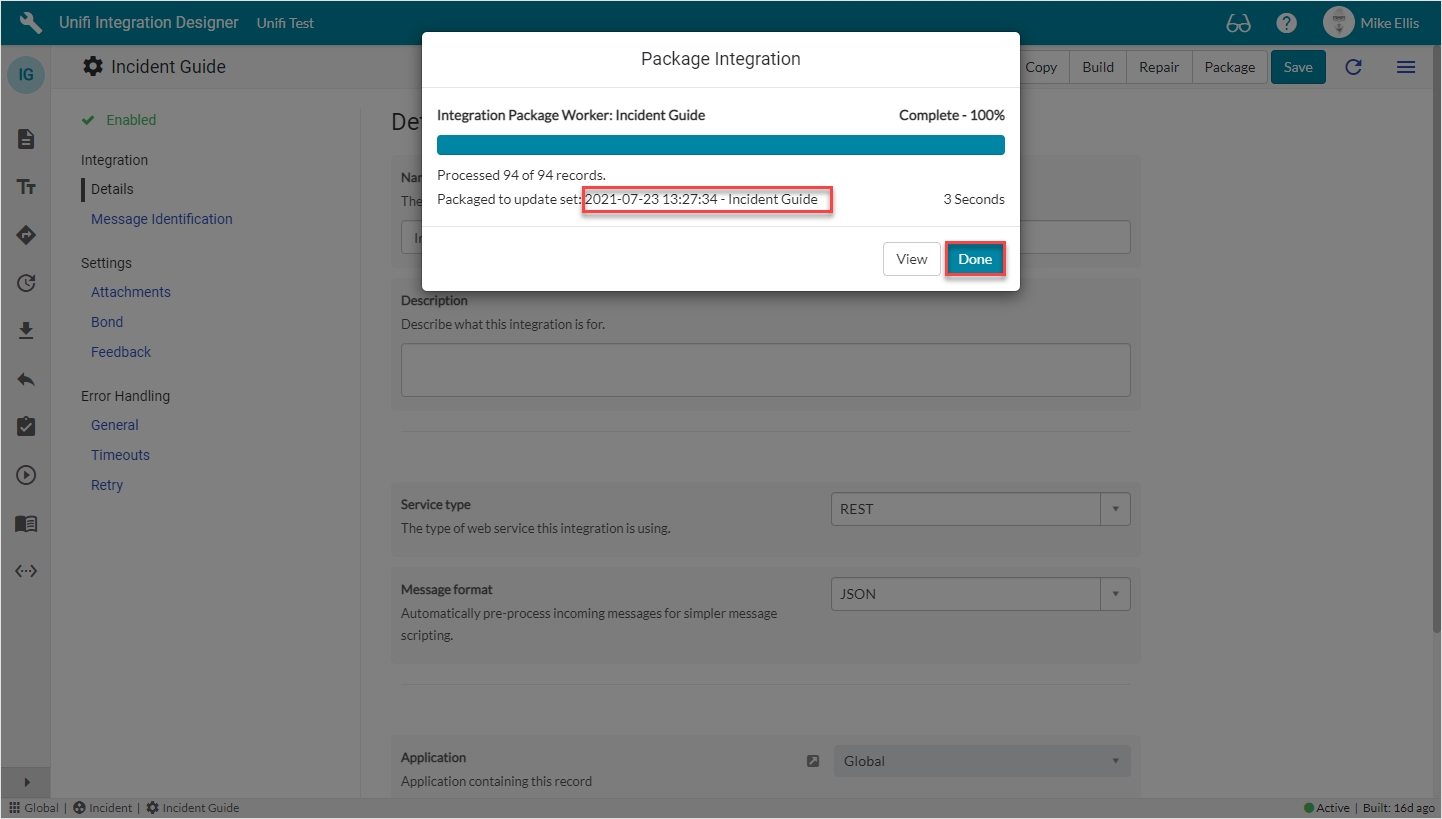

Name

The Package Integration popup is displayed.

Confirm by clicking Package.

Unifi packages the Integration into an Update Set which is automatically downloaded.

Users that do not have the admin role are not allowed to export Update Sets.

The Integration Package Worker modal is displayed.

Copy the name of the Update Set.

Click Done to close the modal or View to view the new local update set record.

Navigate to the downloaded Update Set file then rename the file using your copied Update Set name in order to easily identify it when uploading to the other instance.

Congratulations! You now have the Update Set containing the complete Integration available to be imported to your other instance.

You can find more information on how to Load customizations from a single XML file in the ServiceNow Product Documentation.

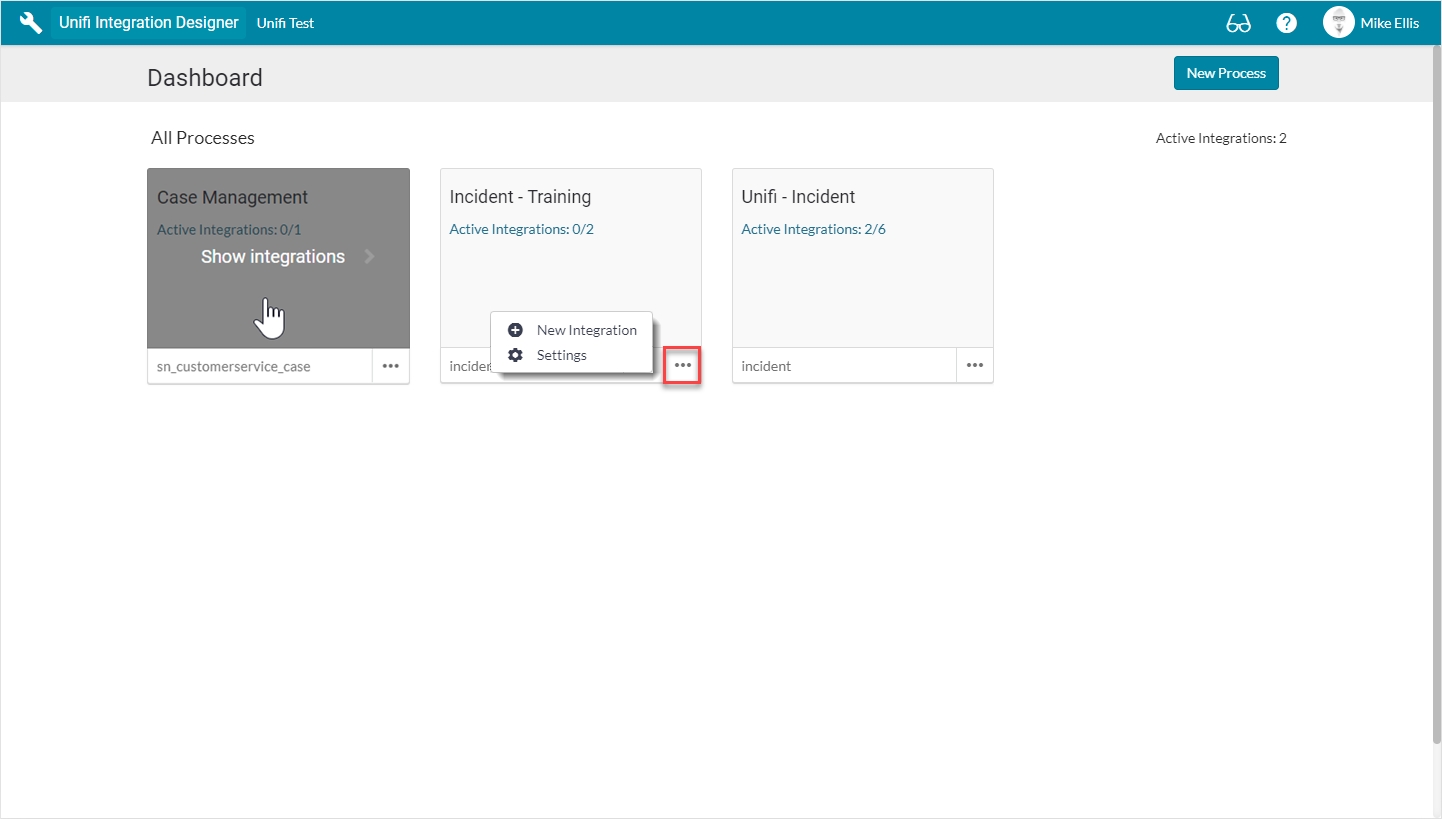

You will be greeted with the following Dashboard (which opens in a new window):

Any existing Processes will be listed as tiles, showing the number of Active Integrations for each. The total number of Active Integrations is also shown on the Dashboard.

Hovering over the tile of an existing Process will display 'Show integrations'. Clicking it will take you to the Integrations page (for that Process).

If appropriate, you can either edit the Settings of, or add a New Integration to an existing Process by clicking the ellipsis (at the bottom right of the tile).

In the next section, we shall look at configuring the Process.

/**

* Executes a child function corresponding to the object's type property.

* The object is passed to the child function so methods and properties can be overridden.

*

* @param {Object} obj The full class object to be patched.

*/

function hotfix(obj) {

var type = typeof obj === 'function' ? obj.prototype.type || obj.type : obj.type;

if (type && typeof hotfix[type] === 'function') {

hotfix[type](obj);

}

}

hotfix.version = '4.4.0.0';

Name

String

The name of the Test Scenario. Automatically populated from the Transaction and the Message used to generate the test.

Integration test

Reference

The Integration Test this Test Scenario belongs to.

Type

Choice

Automatically populated. Choices include: Inbound Async, Inbound Sync, Outbound Async, Outbound Sync

Order

Integer

The order in which each Test Scenario is run (defaulted to match the order of Transactions on the originating Bond).

Description

String

Use this to enter a description for your Test Scenario.

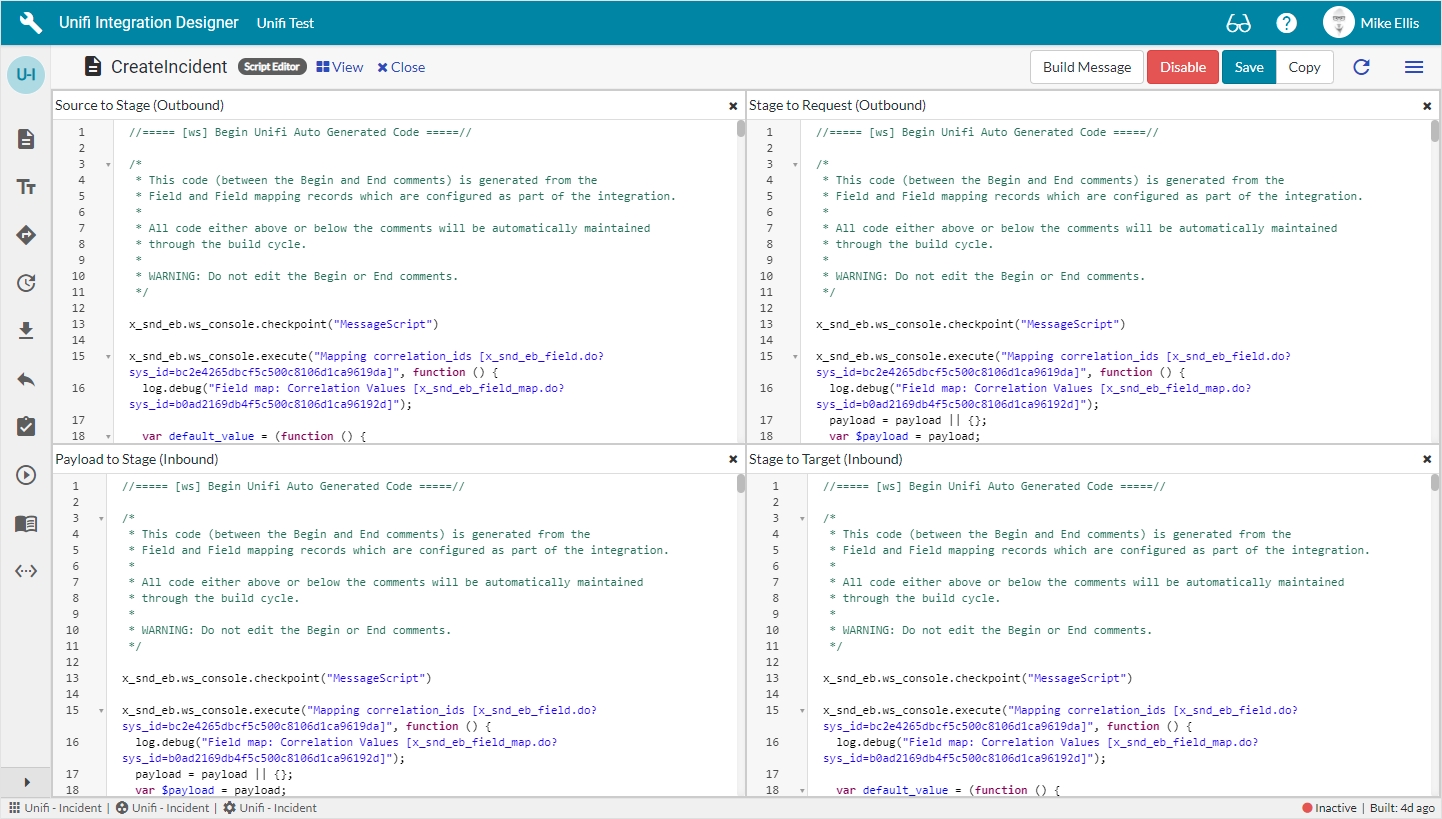

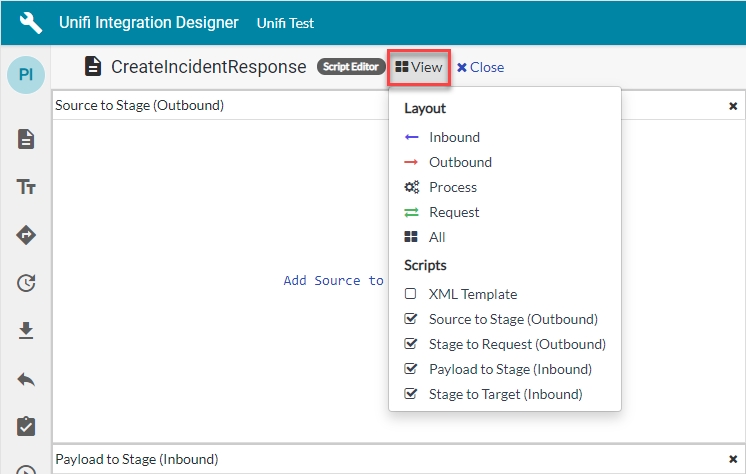

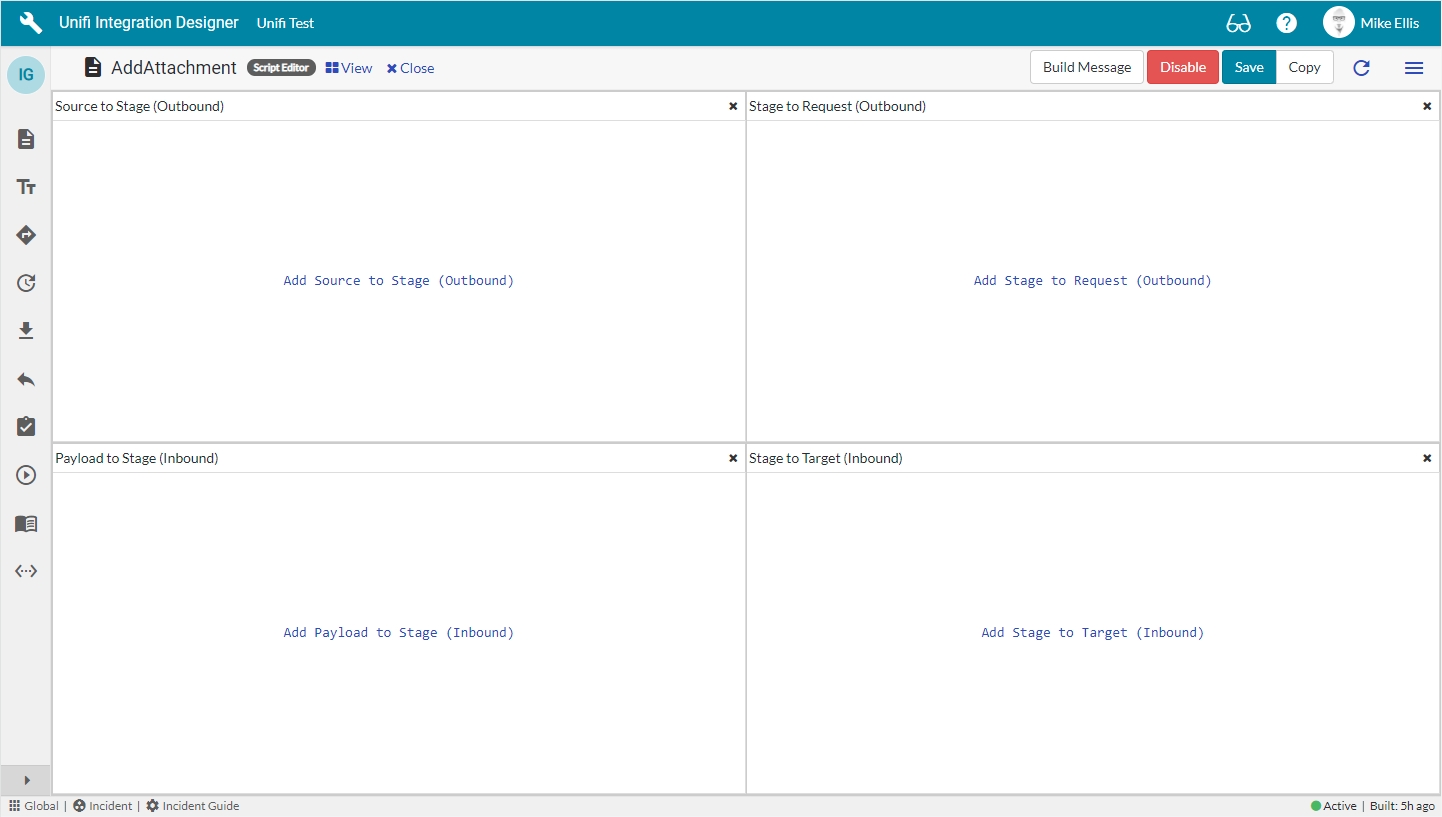

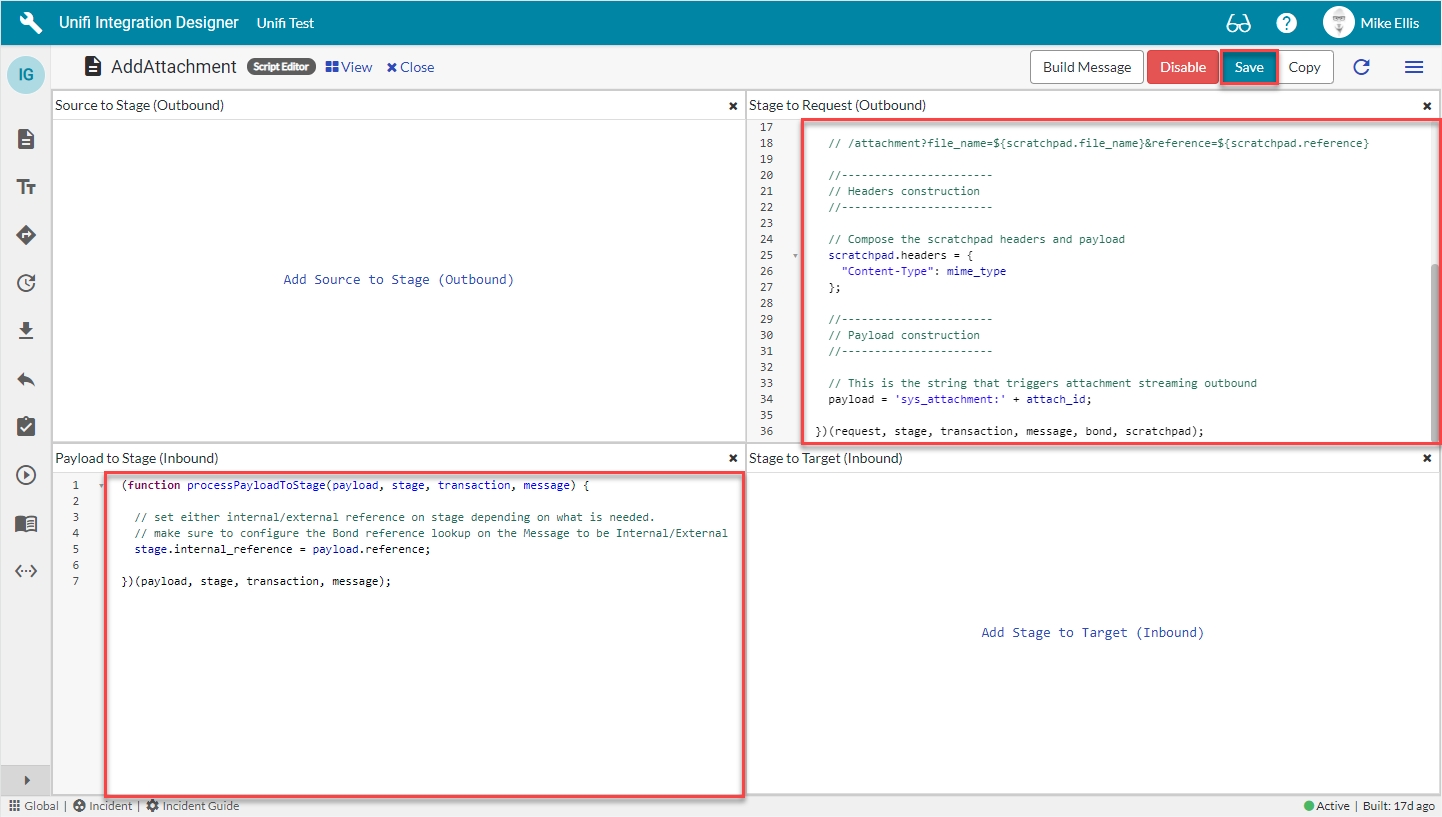

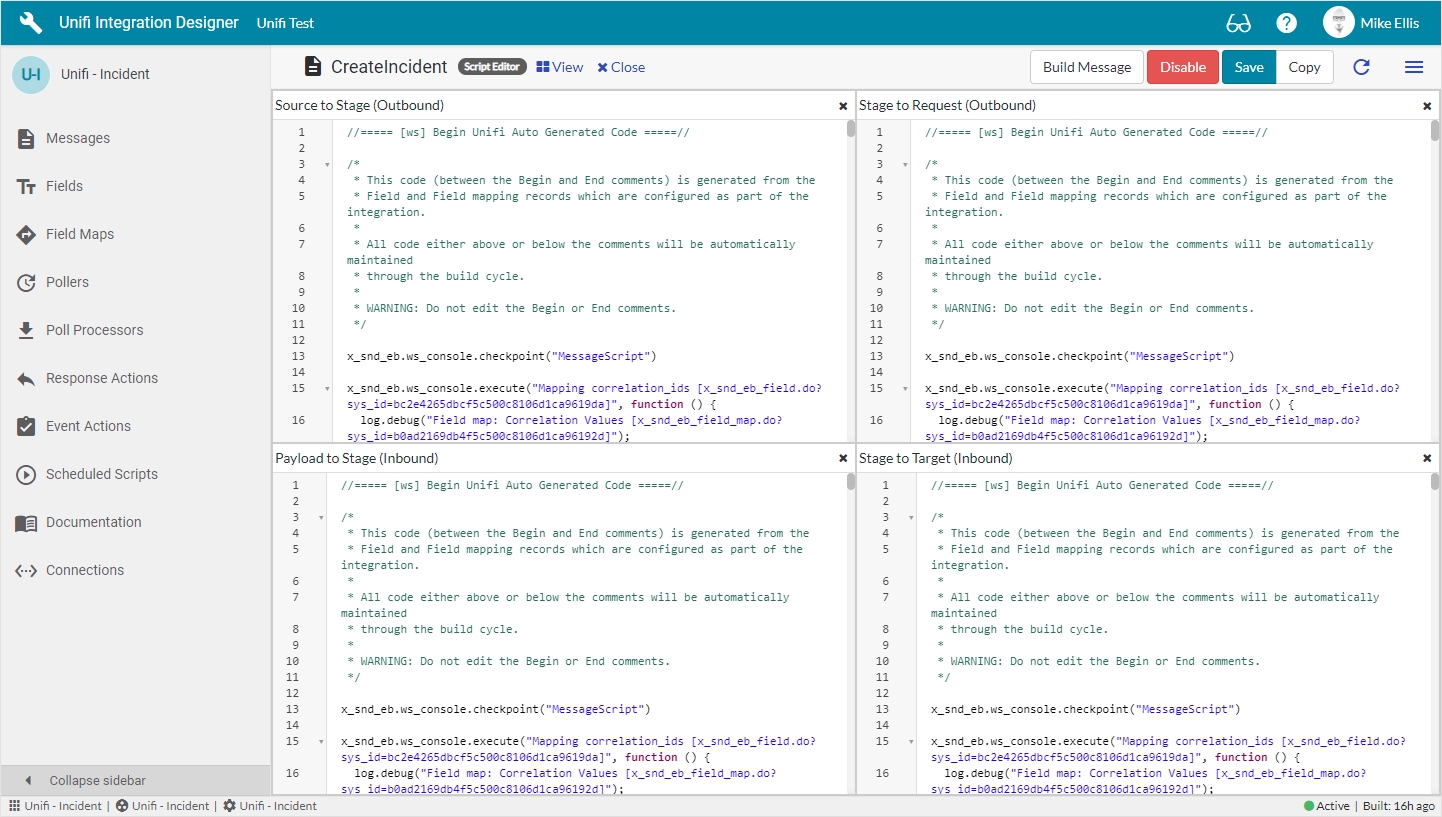

Message Scripts are where the request processing and data mapping occurs.

There are four different types of script which are used either before or after the Stage. Having these script types in relation to the Stage allows us to find any potential errors/discrepancies in data more easily.

Payload to Stage (Inbound)

Data coming in is going to be translated (moved ‘as is’) from the Request to the Stage.

Source to Stage (Outbound)

Data going out is going to be transformed (moved and changed) from the source to the Stage.

The following example extract from the Source to Stage Message Script shows location data being mapped:

Stage to Target (Inbound)

Data coming in is going to be transformed (moved and changed) from the Stage to the target.

Stage to Request (Outbound)*

Data going out is going to be translated (moved ‘as is’) from the Stage to the Request.

In Unifi Integration Designer, you have visibility of your message scripts in the one pane; this makes scripting so much more efficient.

Although you are able to script directly in the Message Scripts, much of the scripting can now be done by configuring Fields & Field Maps (see ).

The Message Scripts can also be the place where Bond state & ownership are set manually (without using the control fields on Message).

The following Stage to Target Message Script from the AcknowledgeCreateIncident Message sets the Bond state to Open: